Last week I launched the Lumberjack with my first-ever post. I explained that in the last 18 months, I built a rather successful AI education startup on a single assumption, that an AI revolution — led by ChatGPT — was imminent because AI makes everyone instantly more productive. Therefore upskilling in AI was going to be the most important thing tomorrow.

I explained how this assumption got falsified recently, which made me realize that I no longer believe that upskilling is the key to the riches promised by AI.

So I resigned, handed the reins to my cofounder, surrendered my shares and moved on.

I got a lot of comments and anecdotal evidence of people extracting immense value from LLMs despite the lack of big-picture proof in the statistics presented in my post.

I also got called out as “someone jumping on the bubble burst bandwagon”, so I decided to shout it a bit louder:

LLMs are incredibly valuable tools, yet there is a discrepancy: personal experience says so but very little measurable evidence exists to prove this.

My first intuition said it was a skill problem.

We can’t see it, because some people have the skills and some do not.

I was wrong. You can read my last post to see why.

AI is a technological phenomenon that greatly impacts our society. There is a weird concept living at the intersection of social and tech issues. I think this is the key to unlocking the second wave of AI adoption:

Seeing value from AI is not a matter of skill. It’s a matter of agency.

The Human Colossus

Tim Urban, author of the Wait but Why blog wrote my favorite written piece ever in 2017. In that series he explained how we have created the Human Colossus.

”The avalanche of books allowed knowledge to transcend borders, as the world’s regional knowledge towers finally merged into one species-wide knowledge tower that stretched into the stratosphere.

The better we could communicate on a mass scale, the more our species began to function like a single organism, with humanity’s collective knowledge tower as its brain and each individual human brain like a nerve or a muscle fiber in its body. With the era of mass communication upon us, the collective human organism—the Human Colossus—rose into existence.”

There is a single cohesive element that glues the Human Colossus together. It’s language. Yuval Noah Harari argued that it’s the operating system of human civilization. More importantly:

Everything that makes up our society are nothing else than cultural artifacts, including human rights, laws, customs, myths, the written and unwritten rules of societies. They are created and maintained through language. Thus, language is the operating system of our intelligence.

It’s so important, that as Jurgen Gravestein recently wrote in his Substack “People treat language fluency as a proxy for intelligence. Simply put, we judge how smart people are based on the way they talk.”

But language is not intelligence. Treating it as a proxy causes us, humans to have biases against people with speech impediments, underestimating their intellectual capacity, while overestimating things like GPT-4o because it can talk eloquently.

The Human Colossus however is not alone.

It created another thing: The Computer Colossus.

In the early 90s, we taught millions of isolated machine brains how to communicate with one another. They formed a worldwide computer network, and a new giant was born—the Computer Colossus.

So we, the human hive-mind, the Human Colossus, figured out how to connect the neurons in your brain with the neurons in mine: through language. Then we used transistors to make one big brain — the Computer Colossus — which is practically the internet. Since LLMs were trained on its contents, that would mean that ChatGPT is a blurry JPEG of the web.

However unlike before, now the Human and the Computer can talk to each other because they run on the same OS:

Language.

This OS is evolving so rapidly, it’s becoming hard to decide what’s real and what is not with GPT-4 acing the Turing Test with flying colors. But it’s not just text, but also other modalities too. Gravestein called this “hyperreality”. Within the last two years we went beyond the Uncanny Valley.

Pictures like the ones below are not “almost-human-but-not-really”. Instead, they are so human they turn our perception the other way around:

We are now suffering from inattention blindness. Only a few of you will have noticed that Fake Airbnb Guy has way too many fingers.

Humans are intelligent, but our hardware is running LanguageOS a lot slower than our Computers.

We generate about 5 tokens per second, while GPT-4o generate almost 100 tokens per second. So yes, LLMs cannot deal with outliers (I explained this in my last post), count the r’s in strawberry or stop making shit up, but they’re blazingly fast.

Hm, so it’s not very intelligent, but it’s fast. What is it good for then?

Using LLMs is a private experience

People have been finding their own answers to this question for the last 18 months. I won’t analyze the macro picture further, I did that last time. Now I’ll focus on the individual.

Ethan Mollick writes about this a lot. Emily Pitts Donahoe explains, that she had this same conversation with Leon Furze.

“Leon Furze says that he sometimes dictates the first drafts of entire articles by recording voice memos on his phone while he’s out for a run.” — says Emily.

Then she adds: “This would never work for me, and not just because I’m a fairly poor runner. But it clearly works for Leon! That’s the thing that matters.“

The conversation goes on and then she infers that “Because the most meaningful or important work of writing occurs at different stages of the process for different people, it’s very difficult to make hard-and-fast rules about where AI supports or hinders learning, even within one assignment.”

Finding value in AI is an inherently individual experience.

You can’t really teach this.

There’s nothing to teach.

You just need to fuck around until you find out.

Emily realized this when she said:

“I think we also have to encourage experimentation and play.”

This deep, personal experience of using AI is echoed by Nicholas Carlini. I highly recommend you to read all the things he uses AI for. I’m also inclined to start writing my own list like that. Over the last 18 months, I’ve generated over 8 million tokens worth of text using ChatGPT only. I’m sure I can find loads of valuable use cases there to share.

Carlini’s critics claim his use cases would be “easily done by an undergrad”. But he has a response to the naysayers:

I don't have that magical undergrad who will drop everything and answer any question I have. But I do have the language model. And so sure; language models are not yet good enough that they can solve the interesting parts of my job as a programmer. And current models can only solve the easy tasks.

This is what Ethan Mollick meant when he said that “It is individuals who are benefiting from AI, as systems and organizations are much slower to adapt and change to new technologies. But individuals really are benefitting.”

Replace ChatGPT with a dozen undergrads and most people who are not getting value out of GPT would still have the same problem.

If I gave you a dozen undergrads, you would have no idea what to do with them to make your own life better. We’re so used to not having agency like that, that most of us are completely tone-deaf to the opportunity. Except for the rebels, who like to play “Fuck Around, Find Out’.

Good on them, because AI is currently living in its FAFO era.

FAFO unlocks latent expertise

Those who play this game, unlock latent expertise, Mollick argues. Putting AI to work will bring the second wave of AI adoption and real growth. This happened before during the Industrial Revolution and will happen again. Crazy new inventions spread not just because they were invented, but because they were utilized by people. They refined them, and implemented them for very specific uses.

“Right now, nobody - from consultants to typical software vendors - has universal answers about how to use AI to unlock new opportunities in any particular industry.” - Mollick says, which makes sense if you look at the missing ROI numbers.

The AI revolution is indeed a bit late, because we need to FAFO first.

But once someone with domain expertise embraces LLMs, they can use them to share their expertise in ways previously unknown.

Mollick’s example is job descriptions:

For example, LLMs can write solid job descriptions, but they often sound very generic. Is there the possibility that the AI could do something more? Dan Shapiro figured out how. He is a serial entrepreneur and co-founder of Glowforge, which makes cool laser carving tools. Dan is an expert at building culture in organizations and he credits his job descriptions as one of his secret weapons for attracting talent (plus he is hiring). But handcrafting these “job descriptions as love letters” is difficult for people who haven’t done it before. So, he built a prompt (one of many Glowforge uses in their organization) to help people do it. Dan agreed to share the prompt, which is many pages long - you can find it here.

I am not an expert in job descriptions, and certainly don’t haven’t done the trial-and-error work that Dan has done about this topic, but I was able to draw on his expertise thanks to his prompt. I pasted in a description of the job of Technical Director for our new Generative AI Lab (more on that in a bit) at the end of his prompt, and Claude turned it from standard job description to something that is much more evocative, while maintaining all the needed details and information. Expertise, shared.

Since I’m an econ junkie, we could call it commoditization of skills. The transaction cost of transferring some skill from one individual to another with nearly identical output quality has dropped to virtually zero.

Breaking the Skill Code

The problem is, that this kind of automated thinking creates what Jonathan Zittrain called “Intellectual Debt”. Shapiro created his automated job description GPT based on the knowledge he has. His expertise. GPT unlocked his expertise to many.

But those many people — they have no idea why it works. They will just take the responses at face value. In some cases this kind of Intellectual Debt makes sense, but not always. And we don’t have a system to decide.

“If an A.I. produces new pizza recipes, it may make sense to shut up and enjoy the pizza [perhaps not]; by contrast, when we begin using A.I. to make health predictions and recommendations, we’ll want to be fully informed.” - argues Zittrain.

But automated thinking is a one-way street. Matt Beane writes in the Skill Code that the very fabric of how humans transfer skills from one another is now in danger. I really loved the summary of this topic from David Berreby, so I recommend you read it. Here’s the one key idea I’ll mention here:

When an expert can get better assistance from a robot than she could from a human apprentice, she has a lot of incentive to go with the machine. It's faster, more reliable — and in professions like surgery, actually safer (for every apprentice doctor in an operating room, Beane notes, "patients spent 25 percent longer under anesthesia"). But giving apprentice work to machines leaves the human learners no chance to get their hands dirty. The senior surgeon guides the robot, and the nurses and residents "sit there in the dark and fall asleep," as one nurse told Beane.

This is what’s driving real change. Not the technology itself but how people interact with it and the lasting changes it brings. All the effects, together. Guy Wilson explains, that:

“People, technologies, and other factors create change when they interact. The details of those interactions are of interest, they are important. This is about much more than whether a technology works, but also about how people use it, react to it, the ways it changes them and their environment, and the way people and the environment affect the technology.”

At this point, it seems like our Human Colossus has developed some new cells that are interacting with the Computer Colossus in novel ways. It’s not visible with the naked eye yet, only through a microscope.

But the Human Colossus is now trying to establish new communication forms with the Computer Colossus.

This is not only empowering the Computer Colossus but it’s also irreversible. Whatever we give away to computers, it’s unlikely that we can get back.

In short: Humans are losing agency to Computers.

Degrees of Agency

Again, this has happened before. With the printing press, with the assembly line or the transistor. Only we’re now spoiled dopamine-chasing monkeys with iPhones so we got so hyped that expected this to happen a LOT quicker. We were expecting 300 million jobs being overturned in a year.

But turns out AI cannot take agency from us. We have to willingly give it away. We want to, but we have no clue what and how much we want to give away. We’re delighted at the opportunity but terrified that we might fuck it up.

Because there is one key thing that never happened before making everything a lot more terrifying.

Humans never had to think about giving agency to something other than other humans.

A printer, your iPhone, or Zapier don’t have real agency. They just execute the rules you set. You!

To understand this problem, let’s explore the concept of agency.

Martin Hewson described three types of agency, which is the ability to autonomously carry out actions towards a goal to maximize rewards and minimize risk.

- Individual: one acting on their behalf

- Proxy: one acting on someone else’s behalf

- Collective: people acting together for a common cause

All conscious things have agency. At least that’s the point of the Universal Declaration of Human Rights when it starts out by saying in Article 1

All human beings are born free and equal in dignity and rights. They are endowed with reason and conscience and should act towards one another in a spirit of brotherhood.

But as van Lier et al (2024) proposed, you can evaluate agency based on the dynamic interplay between an agent’s accessible history, adaptive repertoire, and environment. This approach does not require the agent to be alive or conscious, making it applicable to artificial systems.

Da Costa et al (2024) went even further to claim that a neuroscientific concept by Karl Friston can be applied to AI agents too since both the human brain and AI agents aim to minimize the difference between predicted and preferred outcomes.

In their work called Synergizing Human-AI Agency, Zheng et al (2023) found that “viewing agency as a spectrum recognizes that individuals may have varying degrees of autonomy, influence, and power in different contexts or situations.”

Which means that the Human Colossus and the Computer Colossus can have agency together. They called it Mixed Agency, which is “the coexistence and interaction of human and AI agencies, where both contribute distinct expertise to enhance creativity and productivity. This synergy requires careful management to avoid agency costs, such as loss of human agency or authenticity crises”.

At an individual level, this is what Mollick means when he says “invite AI to the table”.

Mixed Agency between Human and AI

We have no idea how to create Mixed Agency. Humans have always been mostly alone. I say mostly because the closest match to something similar would be the agency of dogs. But it’s nowhere near close to the capacity of a frontier model.

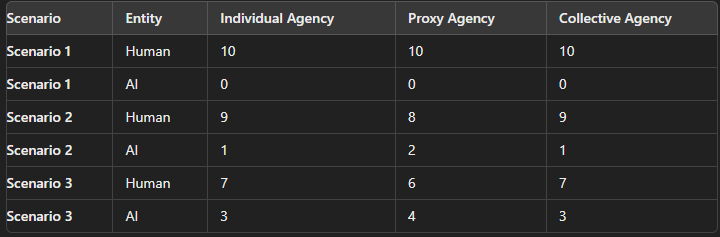

Let me give you three scenarios. They’re fictional but relevant. I asked GPT-4o to help me grade the levels of agency on a scale of 1-10 after feeding it most of the papers I referenced in this post.

Scenario 1: Human-Only Environment

In this scenario, all tasks, decisions, and creative processes are handled by humans alone. Here, humans have full control over individual, proxy, and collective agency. They are responsible for generating ideas, making decisions, and executing tasks. The reliance on human skills and judgment is complete, with no assistance from AI tools.

Who does what?

Humans brainstorm ideas, make decisions, and collaborate to achieve goals.

Only humans have agency.

Scenario 2: Human + LLM

In this scenario, humans collaborate with LLMs. “Invite AI to the table.” LLMs assist by providing information, generating content, and offering suggestions based on vast datasets. While humans retain control over final decisions, they leverage LLMs to enhance productivity and creativity. The proxy agency is slightly shared, as LLMs can act on behalf of humans by suggesting actions or generating preliminary content.

Who does what?

Humans remain the decision-makers but use LLMs to draft documents, explore creative ideas, or gather information.

LLMs provide a supportive role, acting as an information and content generation resource.

The agency begins to shift as humans allow LLMs to perform specific tasks on their behalf.

Scenario 3: Human + AI Agents

In this scenario, humans work alongside advanced AI agents that possess decision-making capabilities. These AI agents use LLMs but they can also autonomously handle tasks such as data analysis, operational decisions, and some aspects of project management. The collective agency is shared significantly with AI agents, as they actively contribute to achieving common goals. Humans still oversee and guide the process but increasingly rely on AI to manage complex and routine tasks.

Who does what?

Humans oversee and make strategic decisions, focusing on high-level goals and ethical considerations.

AI agents manage routine operations, perform data analysis, and make autonomous decisions within predefined boundaries.

The agency is more evenly distributed, with AI agents taking on a larger share of responsibilities.

Comparing the three scenarios, here’s how the agency is shifting away from Humans, towards AI. The Computer Colossus chipping away agency from the Human Colossus, and we love them for it.

Every use case would have a different mix of AI and human agency. This is an optimization problem. How do you find the right level of complexity for an agentic workflow and define the Mixed Agency attributes for both human and AI. Andrew Ng is particularly excited about this

In economics terms — without doing deeper research — I would dare to say that:

Shareholder value is becoming a function of agency distribution between humans and AI

Which basically means that for most businesses, in order to utilize AI well, they will need to think about what exactly they want to let go of.

Decisions made NOT by humans and being okay with it. Not just simple deterministic ones that have an actual correct answer, but non-deterministic ones too. Creative ones too.

We can’t see productivity metrics and stock markets change, because companies are not asking these questions yet.

Once they start asking this question, they will start finding answers.

It’s happening at an individual level, but not at an organizational level.

Yet.

Which is why we are in the FAFO era of AI.

But the Human Colossus and the Computer Colossus are already talking. One neuron at a time. One use case at a time. One agentic workflow at a time.