Since March 2023 I've built a school dedicated to upskilling people in AI with the help of my previous co-founder. We had over 10,000 students from around the world and made over $300,000 in revenue.

I poured my heart and soul into building Promptmaster. Yet on Aug 1st I quit and handed the reins to Dave Talas who is now running the show solo. It hurts like hell to move on but I’m also very excited about the future.

There were many reasons for my decision but the most important is this:

Prompt Engineering is a waste of time and what you know about AI doesn't matter.

Note: This is the first issue of Lumberjack. I will be sharing my brainfarts here with everyone for free. I don’t know how frequent these will be but I’ll try to make an in-depth analysis and practical guides every time.

I love to build stuff with no-code and AI and I will share everything I build. If this is the kind of thing you like, consider subscribing. For the time being I will do everything for free, but if you want to support me, please consider pledging (it’s only $5/mo).

Alright, let’s try to understand why AI is…kinda shit.

Thanks for reading Lumberjack! Subscribe for free to receive new posts and support my work.

Prompting was the future (for a bit)

When GPT-4 came out, the entire world went berserk. Report after report was saying the same thing: Millions of jobs would be lost, and the AI catastrophe/salvation (depending on who you talked to) was imminent.

The ultimate winner of this was OpenAI. The undisputed emperor of this new realm. GPT-4 massively outperformed every other large language model leaving no room for debate.

Social media platforms, YouTube and Google Search exploded into an entirely new category of content: making GPT-4 do smart stuff.

They called it prompt engineering and soon became the Job to End All Jobs.

The whole world was united in saying that prompt engineering will be Da Thing.

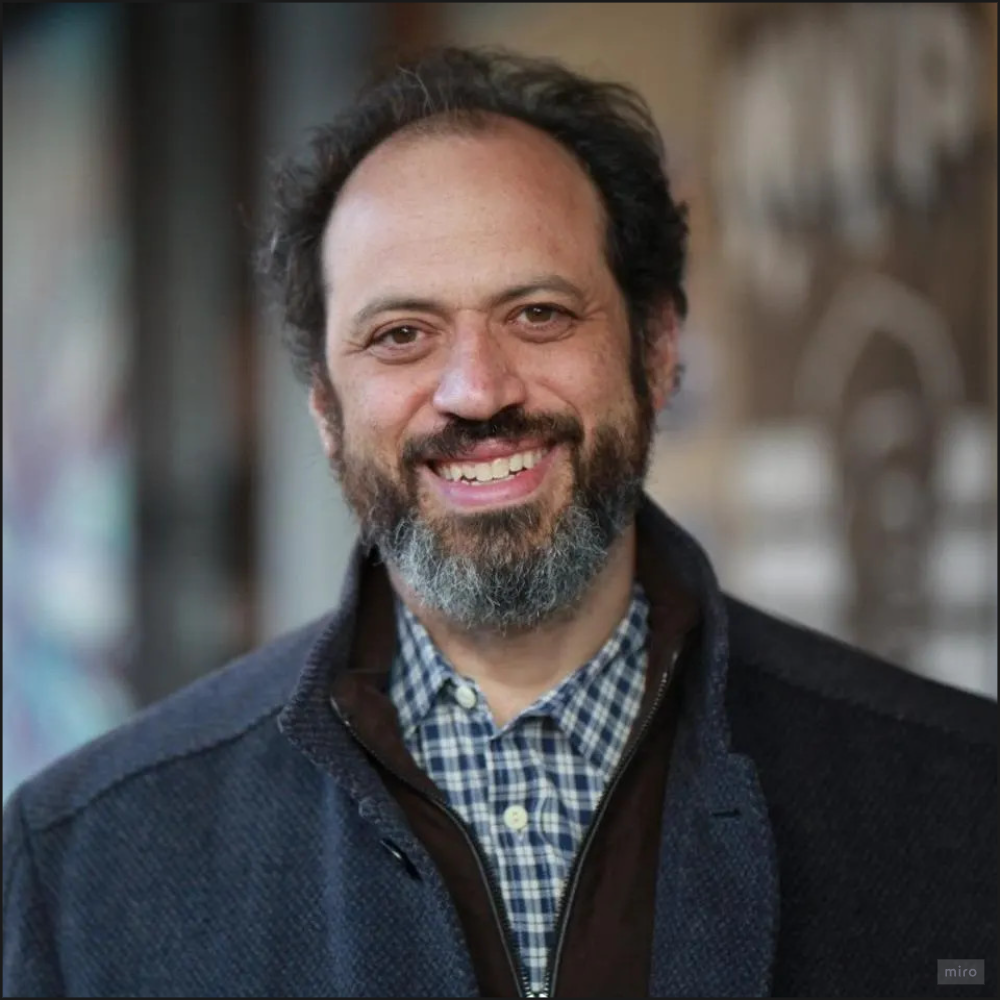

This was cemented even further when science seemed to prove that prompt engineering is indeed Da Thing. Enter this bearded fellow.

This is Ethan Mollick, Assistant Professor at Wharton, the author of Co-Intelligence, and the guy who runs the One Useful Thing blog (which you should read). Mollick et al did a study to see if prompting impacts people’s work, just as the media promised.

They published a paper called “Navigating the Jagged Technological Frontier”, where they found out that people who studied prompt engineering and were using ChatGPT for work completed their work 25% faster and the quality of their output was 40% better.

There we have it. If you learn prompt engineering, you’ll do better, faster, meaner and leaner in your job. Get promoted, rich, retire early and whatever.

All you need to do is get good at it and you’re set.

Me and my then co-founder built a business out of this called Promptmaster. I have quite some background in machine learning having founded and led Momentum.AI (we ran out of funding and closed down in 2017). Using our network we reach out to academic partners, and ML researchers and put together a curriculum on prompt engineering.

We started teaching everywhere we could. Youtube lives, online courses, university courses, live workshops.

Quickly we turned this into a real, viable business and life seemed good. All we had to do was to “outteach everyone” and make sure that we provided the best teaching material on the topic out there. (It still is better and more in-depth than anything on the market.)

We made a few key assumptions that were key to our success (and key to my departure):

ChatGPT is leading an imminent AI revolution making everyone more productive.

The market translated this sentiment into a simple catchphrase that we also started using:

“AI will not take your job but someone who uses AI will. Learn prompt engineering.“

Everybody agreed with this principle.

Prompt Engineering is Da Thing to make you superhumanly productive.

Except for this guy.

His name is Professor Oguz A. Acar, from King’s College London. In the summer of 2023, when everyone was applying for $300k Prompt Engineering jobs, buying courses to learn prompting and just going crazy about ChatGPT in general, he published a piece in the Harvard Business Review titled ”AI Prompt Engineering Isn’t the Future”.

He argued, that:

The core value of using AI does not come from your ability to prompt but your ability to formulate problems - To identify, analyze, and delineate them.

A skill that 85% of C-suite executives say they’re terrible at.

Pfheh, who cares.

Who is this guy anyway?

Everybody sees how incredibly powerful ChatGPT is right? Right?!

Prompting is useless

Then a few months have passed. Then a year. Do you know what didn’t happen?

The wave of economic upset everybody was envisioning.

Our core assumption was built on three statements:

- ChatGPT was leading the market

- the AI revolution was happening and

- AI was making us all productive.

Over time, all three of these statements have been falsified.

ChatGPT is not leading

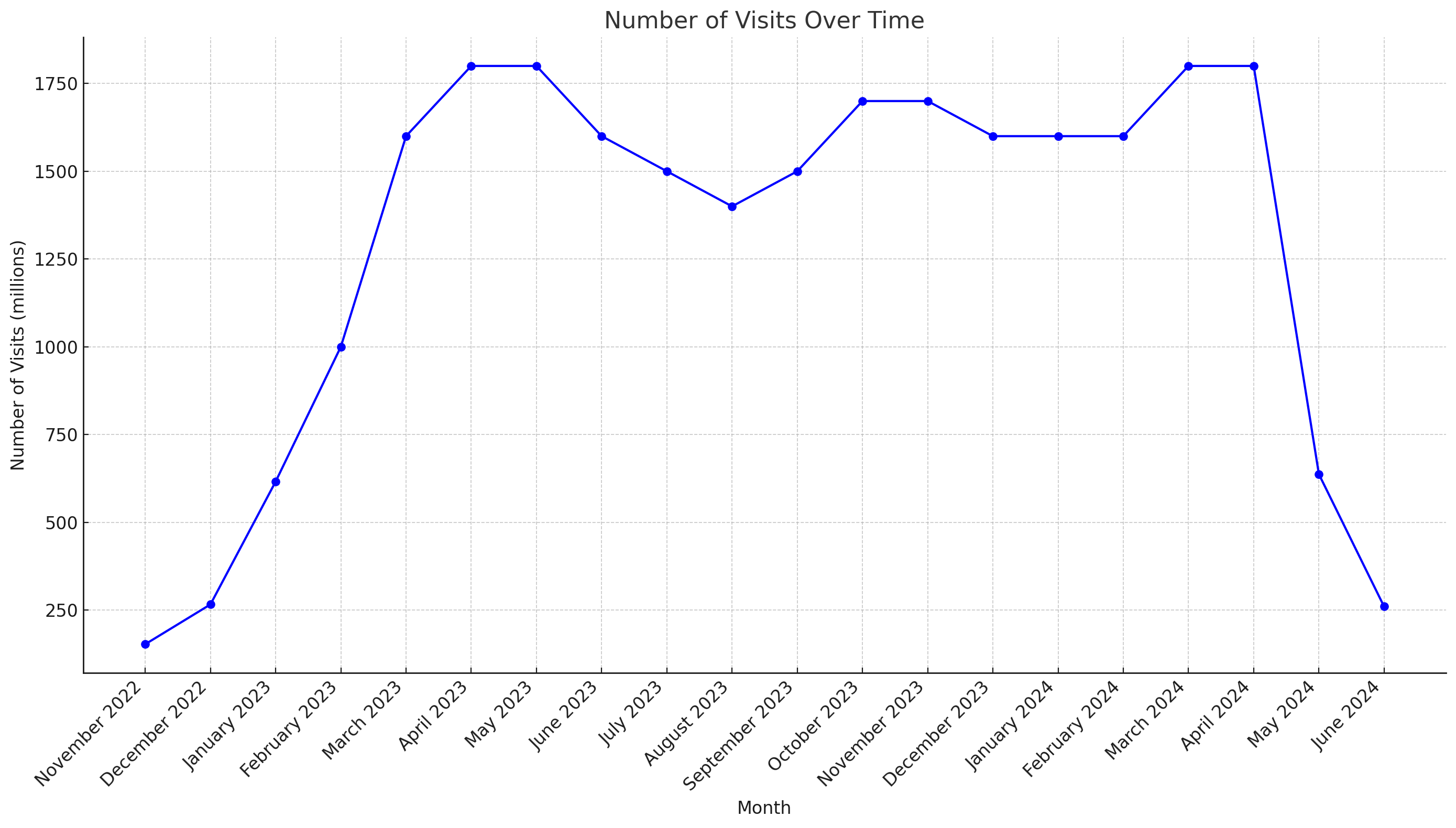

ChatGPT exploded to about 2 billion page views per month and while OpenAI was busy with the boardroom drama, the alignment debate, and just Sam Altman in general, the world woke up to LLMs.

Even now this is still happening with 3 top execs leaving OpenAI including founder Greg Brockman just yesterday.

The AI industry started showing a few cracks.

We got Gemini Pro 1.5. Claude Sonnet 3.5. Llama 3.1. Mistral. All wonderfully brilliant.

Then lately ChatGPT’s throne started to…crumble and page view numbers started freefalling.

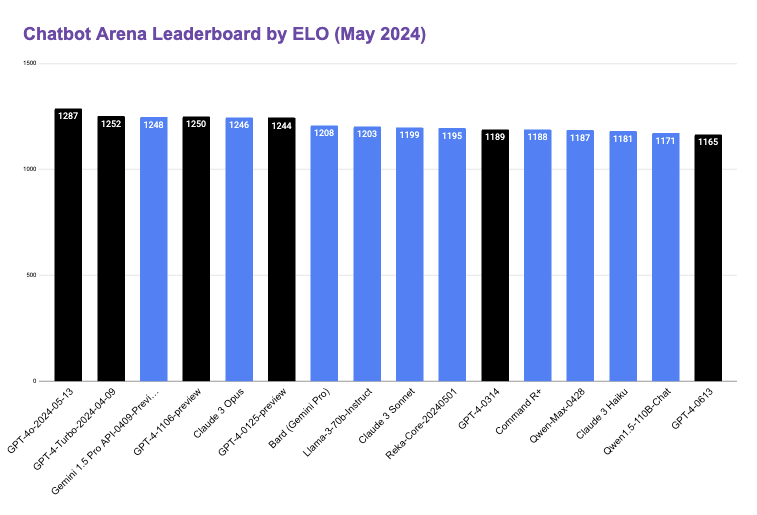

However, all the other models are thriving. Do they best GPT-4o in everything? Absolutely not. But instead of running laps on the rest of the market, GPT-4o is now head-to-head with the rest of the market. Yes, there is a chance that GPT-5 will change this but I wouldn’t bet too big on it. There is a great piece on this evaluation from Bret Kinsella if you want to dig deep.

So there we have it.

Ethan Mollick, put out a post to his blog this March where we highlighted two critical pieces of thought. If not contradicting, it’s at least sophisticating the arguments made in his paper:

- “Being good at prompting is not a valuable skill for most people in the future”

- “The gap between what experienced AI users know AI can do and what inexperienced users assume is a real and growing one.”

The first point was quite important for someone running an online education platform. If AI went “promptless” a.k.a. using it becomes intuitive, what would happen to a school like Promptmaster?

I was arguing that prompt engineering is a nonexistent skill.

It’s just effective communication. That’s all you need to make AI work for you.

Well, I was wrong.

The AI revolution is delayed

The whole AI revolution seems to be…delayed.

A recent post by the Economist was published asking “What happened to the artificial intelligence revolution?” which highlighted a few important things.

The markets have priced in the AI revolution with over $2 TRILLION added to Big Tech market caps.

VCs started throwing money at literally everything that had AI in it.

However, there’s not much to justify this. Microsoft, who is undoubtedly winning this race is only expected to add $10 billion in generative AI revenues this year, which is a big problem. AI eats up CapEx and the ROI seems further than ever.

Of course surveys published by Microsoft and Linkedin say 75% of knowledge workers already use AI daily, but looking into it you’ll see there’s more to it.

In fact, only 5% of businesses are actively using AI, because as the author says “beyond America’s west coast, there is little sign AI is having much of an effect on anything.”

I’ll go even further. Do you remember the 300 million jobs thing? The claims that “AI will not take your job but someone who uses AI will?”.

The reality is that unemployment is close to an all-time low in most of the West.

So it would seem that the AI revolution is…a bit late.

What’s clear is that any business that hopes any kind of benefit from AI needs to invest heavily into it — with unknown returns — and most companies are simply not doing it.

Now, the media jumped on it like it was candy on Halloween.

Journalists love arranging funerals for big market trends because they make for a good reading.

AI is not very useful

“But what about the productivity gains?” - you might ask.

The promise was that AI will make us super-duper productive. This is what Mollick et al found, right?

Well, turns out it’s quite the opposite.

Upwork did a research where 77% of workers said that AI is making them less productive.

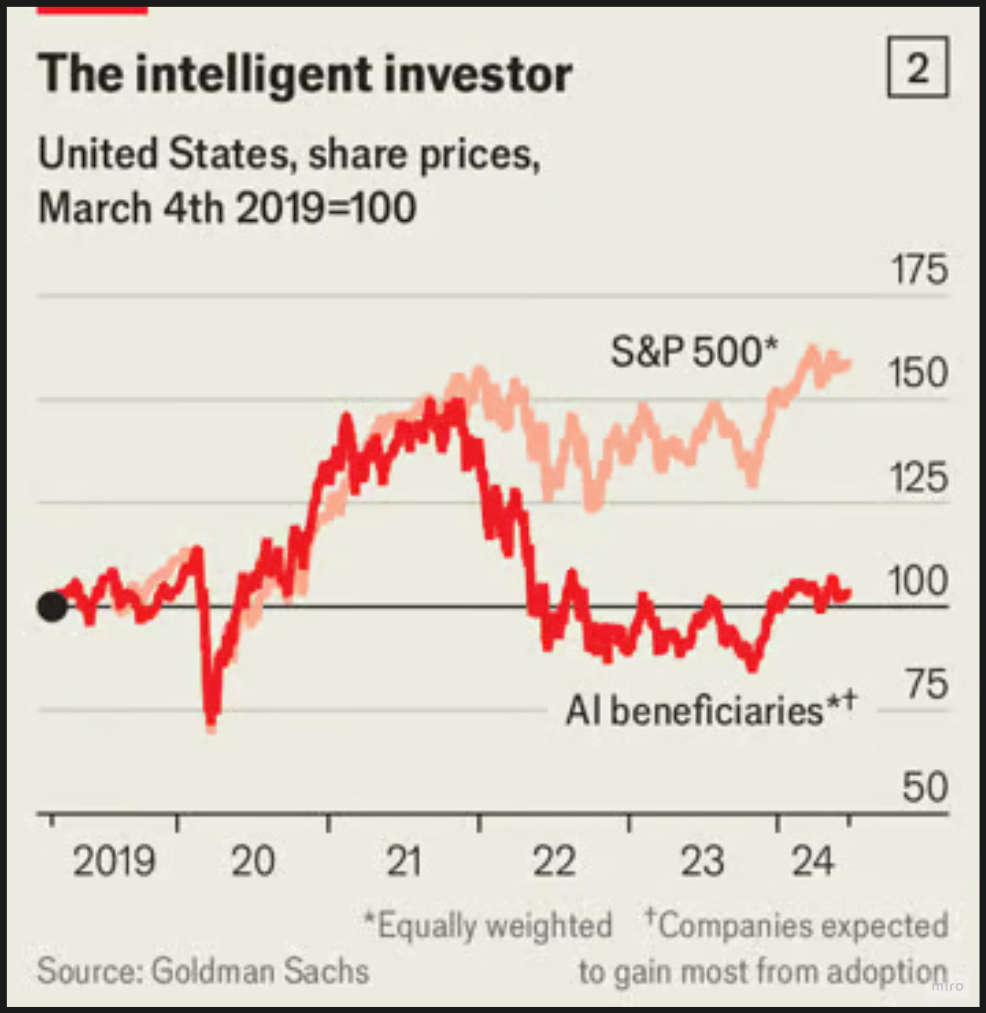

Goldman Sachs created an index tracking companies that in their view would be the most impacted by AI adoption. If AI is delivering what it’s promising, this index should vastly outperform the S&P500. Except it’s not.

Okay, perhaps because stock prices are not a good indicator to measure productivity gains.

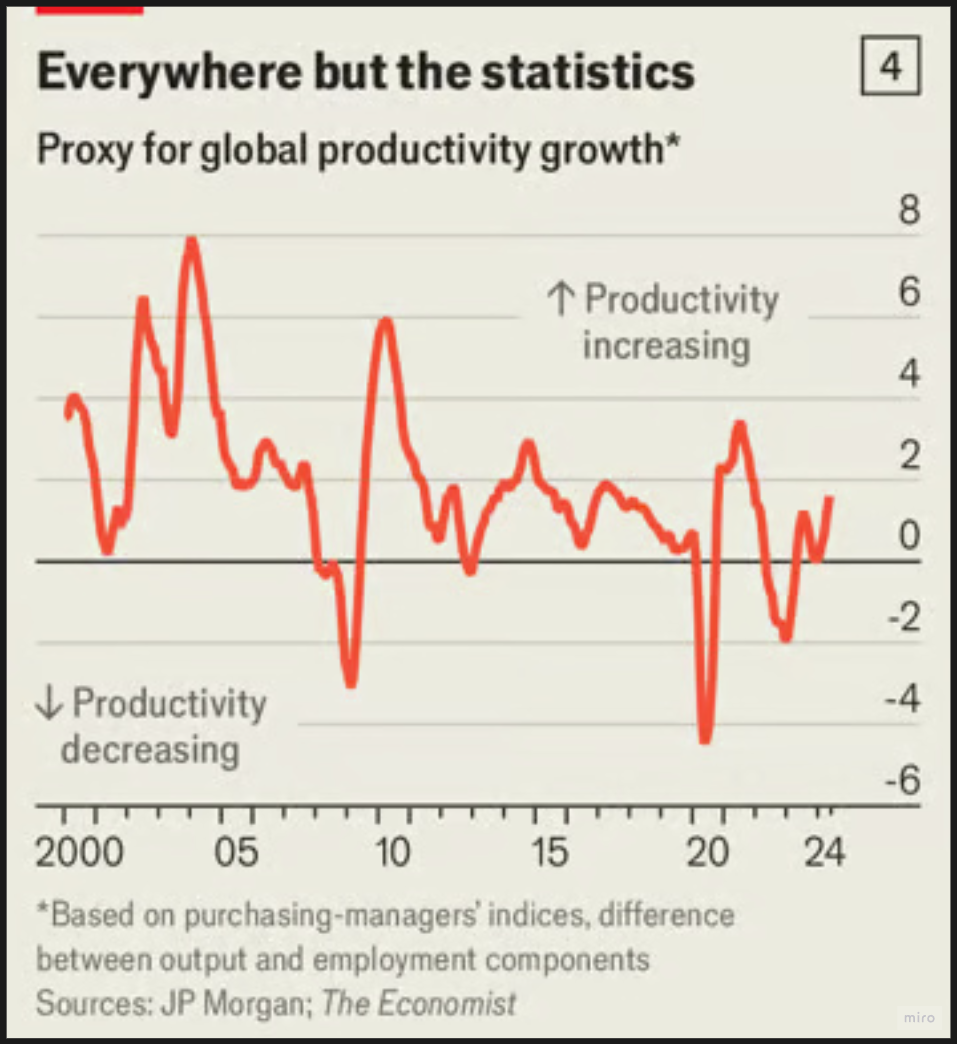

Surely productivity must be increasing if 75% of workers are already using AI!

Sorry, no.

This DIRECTLY contradicts Mollick et al’s findings. How is that even possible?!

Do you know what it doesn’t contradict with?

Economist Robert Gordon’s work called “The Rise and Fall of American Growth”. Gordon documented how US productivity has been declining since the 1970s DESPITE exponential technological growth. Robert Solow captured this well as he famously said:

"You can see the computer age everywhere but in the productivity statistics."

This was also the key takeaway from the Upwork research. They said:

By deploying new technology—no matter how exciting and full of potential—without updating our organizational systems and models, we risk creating productivity strain: employees with yet another thing on their plate who are mentally, practically, and systematically unable to use this technology to achieve the anticipated gains. We risk another productivity paradox with generative AI if we don’t fundamentally rethink the way we work.

AI is just another software project

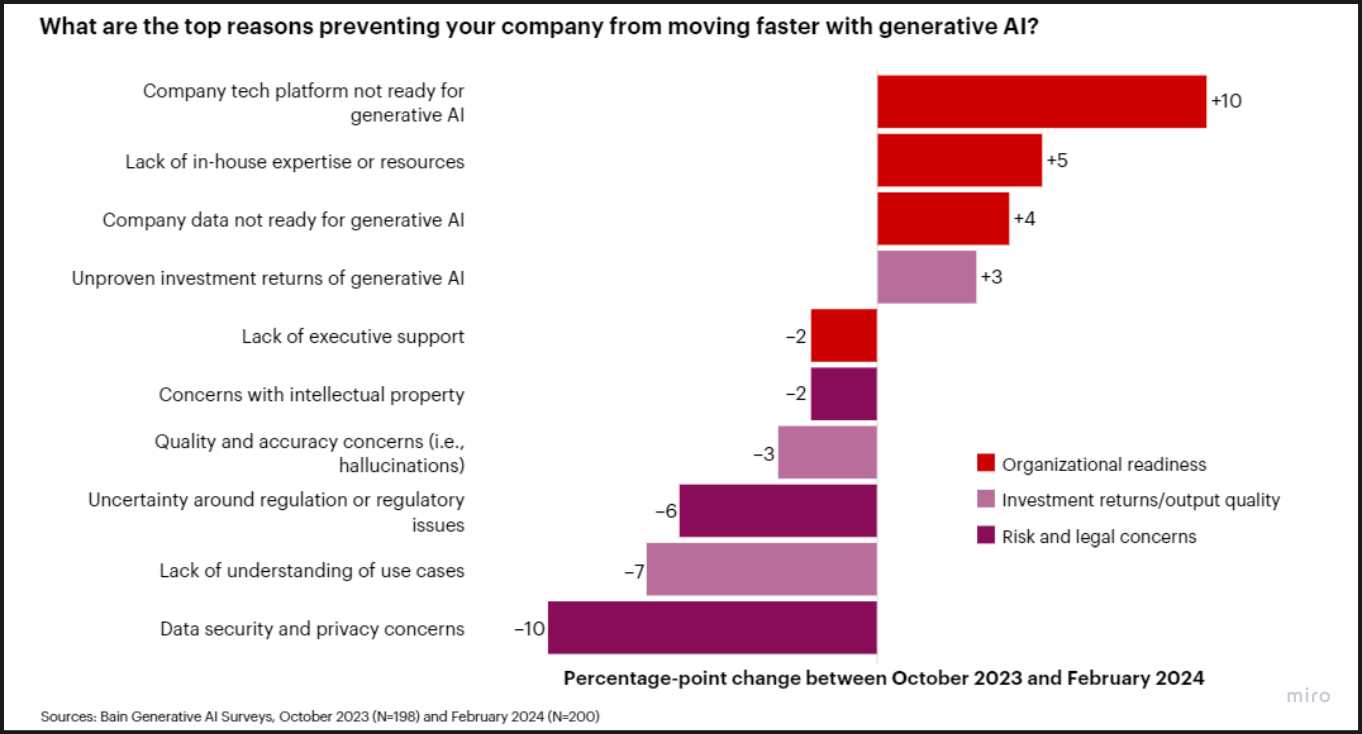

Earlier this summer Bain published a new report about how AI is doing uprooting everything we know about business. Long story short: not well. There is a deeper exploration of this report on Ted Gioia’s piece where he argued that there will be some heavy turbulence in the next 5 months, I recommend that you give it a read.

Mike Wilson, Chief Investment Officer of Morgan Stanley very recently said that

“AI hasn't really driven revenues and earnings anywhere”.

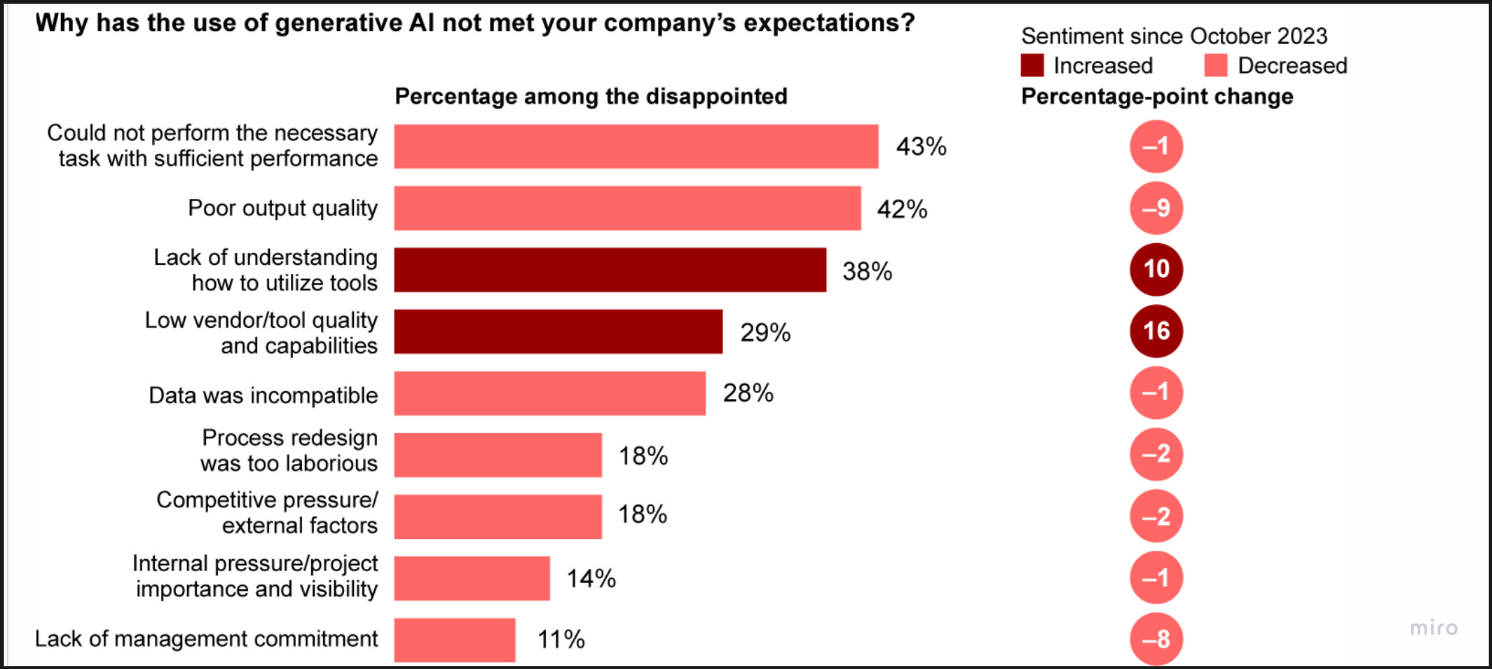

When Bain looked into the reasons why, we get a clearer picture:

Even if the technology can do valuable things for businesses, the whole idea of AI use is just so…alien to most companies. The organization is not ready, the data is not ready.

AI is incapable of performing the necessary tasks with sufficient performance and what is even more striking is that the existing tools are just not good enough. Therefore teams are left to their own devices but they have no idea how to utilize these tools.

This is not a skill problem. Note that the survey didn’t say “lack of understanding how to use tools”. It said utilize.

Do you know what’s the definition of utilize?

The problem isn’t that people have no idea how to prompt. It’s that they have no idea what to prompt it FOR. They know how to use ChatGPT, they already do. The Microsoft and Linkedin research confirmed that.

But they have no idea how to get value out of it, and if it doesn’t create value, it creates cost.

If AI is not a productivity gain, it’s a productivity strain.

The folks at Upwork were right.

Turns out that AI is not just a simple plug-and-play solution, regardless of what the media said a year ago. If you just dive in head-on with your newfound knowledge of AI, you will fail. Plain and simple.

IP, regulatory issues, hallucination, or use cases don’t matter when the company is physically unable to plug AI solutions in. They never even get to those being an issue.

Integration is hard and slow and nobody knows how to do it.

Turns out AI is…just another software project.

It’s a different kind of software. A kind of non-deterministic software that’s a bit random and doesn’t really work in 1s and 0s. But, it’s just software.

Right now, most businesses are failing because they’re trying to implement Excel in 1985 when everyone is still using paper and fax machines.

It doesn’t matter how smart Excel is and how well you know all the formulas if none of your spreadsheets are in Excel and you don’t even know what you could put in Excel.

The Outlier Problem

So which is it? Are we seeing a mass destruction of jobs and a robotization of everything or are we in a hype bubble spending billions on a new fad?

Well, it’s kind of both. Brian Merchant in Blood in the Machine said that “for most businesses, the measuring stick for AI output isn’t whether it’s so good it can replace a person wholesale, but if it’s good enough to justify savings on labor costs.”

So in some cases, it might be a suboptimal choice, but not from a labor cost perspective. The author argues that generative AI is likely to be extremely disruptive to atomized, freelance, and precarious creative labor. We already see some of this happening with copywriters, artists, and other creative professions being completely disrupted by mostly mediocre AI-generated content. The key here is being atomized. It’s easy to replace the work with AI because it’s atomized. You don’t need to deal with the integration at all.

Yes, a logo generated with Ideogram will not have the nuance of a logo designer, but it’s also free and instant and most small business owners (who employ most of the world) don’t care about good design anyway.

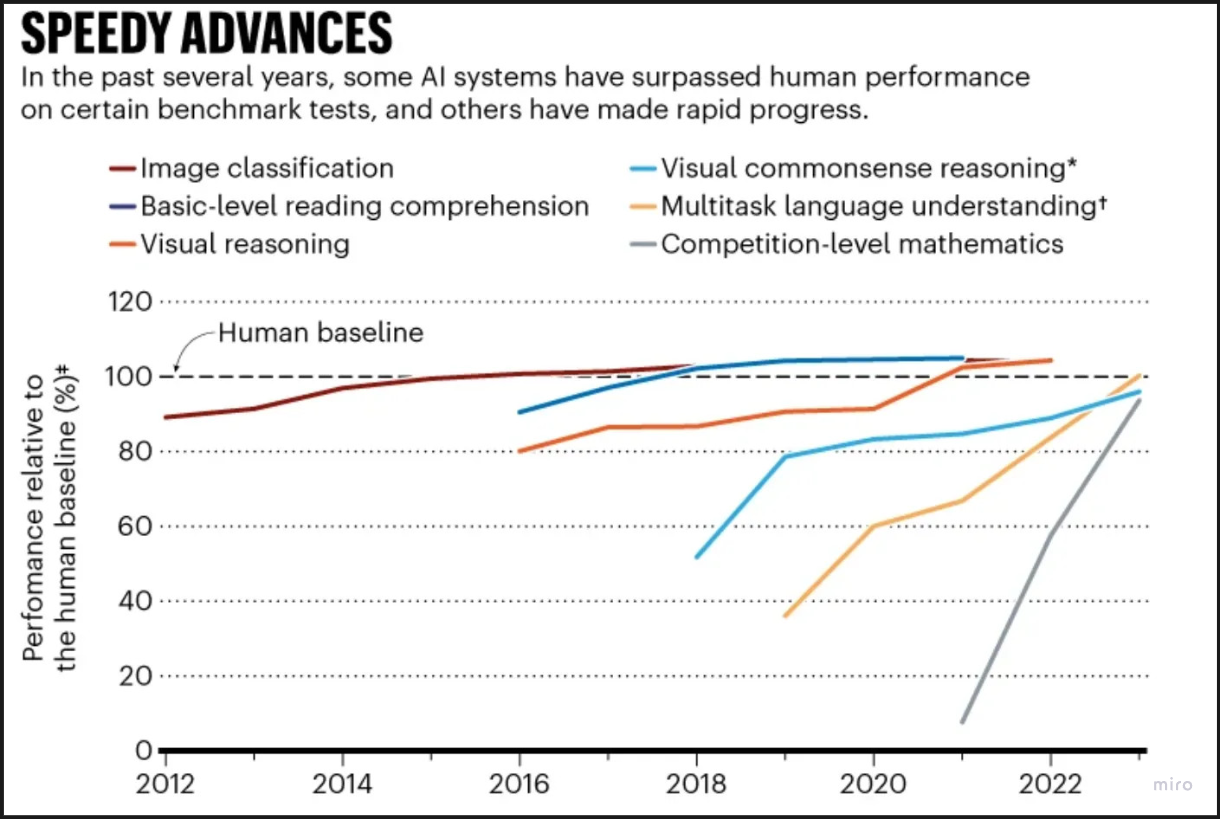

AI now beats humans at basic tasks says Stanford. You don’t need AI to do the whole creative process, just use AI to do the the basic things.

However, as Melanie Mitchell points out, AI surpassing humans on a benchmark that is named after a general ability is not the same as AI surpassing humans on that general ability.

Which correlates with the seemingly paradox finding of how Mollick’s research found that people could do their jobs faster and better if they learned prompting. They didn’t actually evaluate their jobs in the study. They just created a survey to emulate the work process and then measured how people scored on the survey.

However, when it comes to actual productive work, where you need to create value in context, most people report the exact opposite: that they’re slower and worse.

Of course one of the big promises of AI was that it would be a great equalizer. That it would actually fill in the gaps for you.

That’s the generative part of generative AI, right?

Oh yes, it can fill in the gaps all right.

With a bunch of nonsense.

AI lacks common sense

One of the biggest problems with AI is that it lacks common sense.

Just like us humans, it uses shortcuts (that are similar to human heuristics) to infer insights from data using what it learned during training, but they are never really checked against common sense.

One study found that an AI system which had successfully classified malignant skin cancers from photographs was using the presence of a ruler in the images as an important cue (photos of nonmalignant tumors were less likely to include rulers).

This is why Daniel Jeffries argues that LLMs are much smarter than you and dumber than your cat.

I’ve been arguing for this for a long time: While only 7% of our human communication is verbal, we tend to discount its role in providing context.

Humans are terrible at providing context.

However, LLMs (and generative AI in general) do not have the common sense that we do to allow for unsupervised context generation. Anything that comes outside their data set is bound to be problematic.

Earlier this year Califano et al conducted a study to see if people would trust an AI chef. Turns out that people tend to view AI-generated recipes with skepticism, particularly when they carry the label “AI-generated”.

We are still in the uncanny valley and will remain there until AI gains common sense.

This is why there is a huge gap between the capabilities of frontier models (which are amazing) versus the reality of how impossible it is for most people to get value out of it.

It’s not because you don’t know prompt engineering.

It’s because we’re expecting LLMs to act as open-ended agents that can take over a task just like an average colleague could. If it doesn’t have context, it asks questions, follows up, makes decisions, and logs them.

Have agency.

It doesn’t matter how good you are at prompting if the LLM you're prompting doesn’t know jack shit about the context it’s operating in. In some cases you can bypass that and just giving it context but then you just end up spending more time in front of the computer.

This is what the entire industry is trying to work towards.

Open-ended agents don’t necessarily mean AGI but it’s close.

However currently all we have is narrow agents that operate within a closed loop of inputs, actions and outputs. Assuming the right systems are set up, you can heavily compartmentalize your work and automate some of it with duct tape, glue code, or — my personal favorite — no-code tools.

But even then, narrow agents still lack common basic sense, but at least their context is managed.

Open-ended agents are hard

Gary Marcus is very vocal about this. The key is how you handle outliers. If you go on a holiday and hand over some tasks to your colleagues to take care of while you’re gone, you would expect them to act accordingly, even if they meet the unexpected. Even if they have to deal with something that you didn’t discuss.

Even if they have to manage outliers.

AI is terrible at managing outliers.

This is why ChatGPT messes up simple requests to count the number of rs in the word strawberry to identify subtle changes to riddles.

It’s not in the training data therefore it cannot deal with it. You want general, abstract rules to apply that are incomprehensible by the current state of generative AI.

The problem becomes paramount — as Marcus argues — when you try to build something like a driverless car. As he says:

“You don’t want your decisions about whether to run into a tractor trailer to depend on whether you have seen a tractor trailer in that particular orientation before; you want a general, abstract rule (don’t run into big things) that can be extended to wide range of cases, both familiar and unfamiliar. Neural networks don’t have a good way of acquiring sufficiently general abstractions.”

Marcus published an article in the 90s on how children learn the past tense in English.

I think this might be the key to understanding where and how to find the value in AI for our lives.

Here’s what he found:

In essence, we were arguing that even in the simple microcosm of language that is the English past tense system, the brain was a neurosymbolic hybrid: part neural network (for the irregular verbs) part symbolic system (for the regular verbs, including unfamiliar outliers). Symbolic systems have always been good for outliers; neural networks have always struggled with them. For 30 years, this has been a constant.

Symbolic systems are the solution. AI is still struggling with that so humans need to intervene. Human and AI need to play as a neurosymbolic hybrid where the neural network gets governed by symbolic systems.

Do you know what lives inside a symbolic system, where all you have to do is just follow some abstract, general rules but you can go crazy as long as you stay within those boundaries?

Creativity. Innovation. Problem solving. The ingenuity of humans.

Current frontier models can be incredible tools that can unlock incredible productivity but it will not be done via prompt engineering and studying AI.

It will be done by changing the way we think about work and changing the way we solve problems.

This is also what I found during my time in teaching people. Those who were great at compartmentalizing problems - or as Oguz A Acar said “formulating problems”.

But that’s not a new thing is it?

Elon Musk famously said that

Your income is directly proportionate to the complexity of the problems you solve.

Use narrow agents to solve problems

This means that AI is simply just not there yet.

The neurosymbolic hybridization of machine learning is just not there yet.

Without this, we are unlikely to have open-ended agents that can mimic AGI.

This means that all we have for now are narrow agents designed around compartmentalized workflows to ensure that automated and human parts of a workflow have seamless transfer of context.

We will not have an AI revolution anytime soon.

Yes GPT-5 will come and 6 and many other frontier models and they will bring incredible new capabilities that were unfathomable yesterday.

But it’s not an unstoppable wave that you need to adapt yourself to or else.

Zapier co-founder Mike Knoop recently said that:

AGI progress has stalled out and scaling compute will not help.

Knoop and Marcus seem to agree about the core problem of AI. It’s not compute. It’s handling outliers. It’s a design flaw of current machine learning. You need a human there.

So there are two kinds of people: Those who can take a complex problem, compartmentalize it, and apply AI-powered narrow agents to solve the problem, and those who ask questions like “Can AI do X for me?”.

The future is not prompt engineering. It’s your ability to compartmentalize problems.

Here’s a story from Acar’s post in HBR:

Consider Doug Dietz, an innovation architect at GE Healthcare, whose main responsibility was designing state-of-the-art MRI scanners. During a hospital visit, he saw a terrified child awaiting an MRI scan and discovered that a staggering 80% of children needed sedation to cope with the intimidating experience. This revelation prompted him to reframe the problem: “How can we turn the daunting MRI experience into an exciting adventure for kids?” This fresh angle led to the development of the GE Adventure Series, which dramatically lowered pediatric sedation rates to a mere 15%, increased patient satisfaction scores by 90%, and improved machine efficiency.

The story of Dietz is a fundamentally human one.

It also holds the keys to successfully applying AI in our lives.

Use your human brain to compartmentalize a problem, to reframe the situation again and again until you find a catch.

Then build narrow agents to make it work.

What’s next?

So there we have it. The core assumptions that I think made Promptmaster successful have been falsified and I could not continue charging people for teaching them how to prompt.

Because when everyone was coming at us asking us to teach them prompt engineering it felt safe to say that the learning objective is for people to know how to prompt well.

But that’s just simply not true. After talking to hundreds of people I realized that the learning objective is never the acquisition of knowledge or skill.

I have the unique skill of being able to breathe out of my left eye socket. I learned this trick when I was a kid. It’s not useful at all unless I can utilize it (I can not).

The ultimate objective is always to spend more time with family, friends, your children and doing human things that make you happy.

So I decided to quit and start this newsletter.

There is a lot to teach but I don’t want to charge people for learning from me. Instead, I want to build stuff and share the stuff I build.

I have this motto that I’ve always struggled with during my time at Promptmaster because it felt antithetical to a school. Now I can finally embody it fully:

My knowledge is free, my time is money.

If it looks like I’m turning my back on my old business, I’m not. Promptmaster is a phenomenal business with a fiercely loyal community.

Dave is probably the most talented person to make the company a success. He is a phenomenal teacher which is what this business needs. Because the problem Promptmaster needs to solve has changed.

It’s not just about how to teach, but also what to teach. In this pursuit, I have become a bottleneck, because what really fulfils me is building, but Promptmaster needs full, unwavering focus on teaching.

Dave now has full, unrestricted access to his zone of genius and really outteach everyone. If you want to gain the skills as a non-technical person to make sense of AI technology, you can watch everything on Youtube for free, or you can learn everything you need to know in 1/10th of the time, so I do recommend you check it out.

All the while here on the Lumberjack, I will build things and write about them. I will try to compartmentalize the problems I see in my life. Deconstruct them and then solve them using AI and no-code.

Then I will tell you how I solved them and share the complete solution. I am slowly making my family’s life simpler and easier by integrating AI and automation into it. One compartmentalized problem at a time.

There is also a lot of things I think about in this field so I’ll jot those down too.

The current problem I’m trying to solve is to make myself less forgetful by building a set of narrow agents that help me manage our household with my wife. I have ADHD and I’m forgetful. Using my skills to build a solution is rewarding. I’ll share the code, the scenarios, and everything once I’m done.

Like a lumberjack felling a tree, each swing of the axe brings me closer to achieving my goals.

If you want me to swing my axe for problems in your business, you can hire me.

Also, if you liked this issue, consider subscribing or pledging to support my work. I will share everything I do here on the blog and I couldn’t do it without your support.

Pledging with even only $5/month will help me do this full-time and share everything.

Thanks for reading Lumberjack! Subscribe for free to receive new posts and support my work.