TL;DR

Your n8n, Zapier, and Make workflows fail because of a fundamental context management problem. These tools force you to manually manage state, schema validation, and error handling across dozens of nodes—turning simple 5-step ideas into brittle spaghetti monsters. The issue isn't your skills or the tools' bugs; it's their architectural foundation. This article explains why workflow automation is inherently fragile and what reliable automation actually requires.

Your n8n workflows break randomly. Your Zapier automations fail silently for days. You've burned entire weekends debugging only to find the same issues resurface.

The problem isn't your skills, the tool's bugs, or your integrations. It's the architectural foundation these tools are built on.

Current workflow automation tools have a context management problem, and no amount of error handling will fix it. This post explains the root cause non-technical founders and operations people need to understand.

You won't become a developer reading this, but you'll understand why your automations are brittle and what reliable automation architecture actually looks like.

New to n8n? Check out our beginner-friendly n8n tutorial to get started.

The Silent Collapse of No-Code

It's the middle of the night. You were just about to go to bed. Your phone lights up with a Slack message. Your AE is panicking because a $2m enterprise deal has been sitting in "Qualified" stage for a week and the client reached out visibly confused by the radio silence.

It should have auto-progressed to "Proposal Sent" when she uploaded the signed NDA. You built a n8n workflow to handle that.

She manually moved it forward an hour ago, but now she's asking the question that makes your stomach drop: "How many other deals are stuck like this?"

You roll out of bed, open your laptop, and check the logs.

The workflow ran. Successfully. Twenty-three times in the past three days, in fact. Green checkmarks all the way down. But somewhere between Salesforce and your deal progression logic, critical data just... vanished. The NDA upload triggered the workflow, but the stage update never happened. And because everything reported success, there were no error alerts. Nothing.

This isn't the first time. Three weeks ago, it was lead routing silently dropping high-intent leads into a black hole. You're playing whack-a-mole with invisible moles.

No-code forums are full of stories like this. One user said

"My breaking point was when a Make scenario silently failed for three days because of an API rate limit change. I only discovered it when clients started complaining. No proper alerting, no rollback capabilities, nothing. Just silent failure and data loss."

Another described it as

"playing detective with garbage logs and error messages that make no sense. I've burned entire days hunting down bugs that were just simple config issues."

The pattern is always the same. The workflow appears to work. The logs show success. But somehow, somewhere in that chain of fifteen nodes, something gets lost or corrupted, and you don't find out until it's already caused damage.

You're not crazy. Your workflows are breaking randomly. And it's not your fault.

Why are n8n workflows so brittle?

By now, you probably think you know why your workflows fail. API changes. Schema mismatches. Rate limiting. Someone mapped the wrong field.

But they're symptoms, not the disease.

Think about your last debugging session. You checked the API connections (all green). You verified the field mappings (all correct). You tested each node in isolation (all worked). But when you ran the full workflow, it still failed... sometimes.

That's because the root cause isn't in any single node. It's in how these tools handle context—the data that flows from one step to another.

When a lead fills out your form, that's your starting context: name, email, company.

- Node 1 (Enrich): Adds

company_sizeandindustry. - Node 2 (Score): Adds

lead_score. - Node 3 (Route): Adds

owner_id.

By the time that lead reaches your CRM, the context has grown from 3 fields to 30. In tools like n8n, Zapier, and Make, this context flows through your workflow like a game of telephone.

Remember that? One person whispers a message to the next, and by the time it reaches the last person, "I like apple pie" has become "Buy a laptop."

That's what's happening to your data.

According to Superblocks' guide to workflow orchestration, proper context management requires explicit dependency tracking, state management, and error handling—features that visual workflow builders often leave to the user.

The irony is that these tools can handle context, but they make you do all the work.

- Want to prevent corrupted data? You add validation nodes.

- Want to handle schema changes? You add transformation nodes.

- Want to catch errors before they cascade? You add error-handling branches.

- Want to stop two workflows from overwriting each other? You add conditional checks and wait nodes.

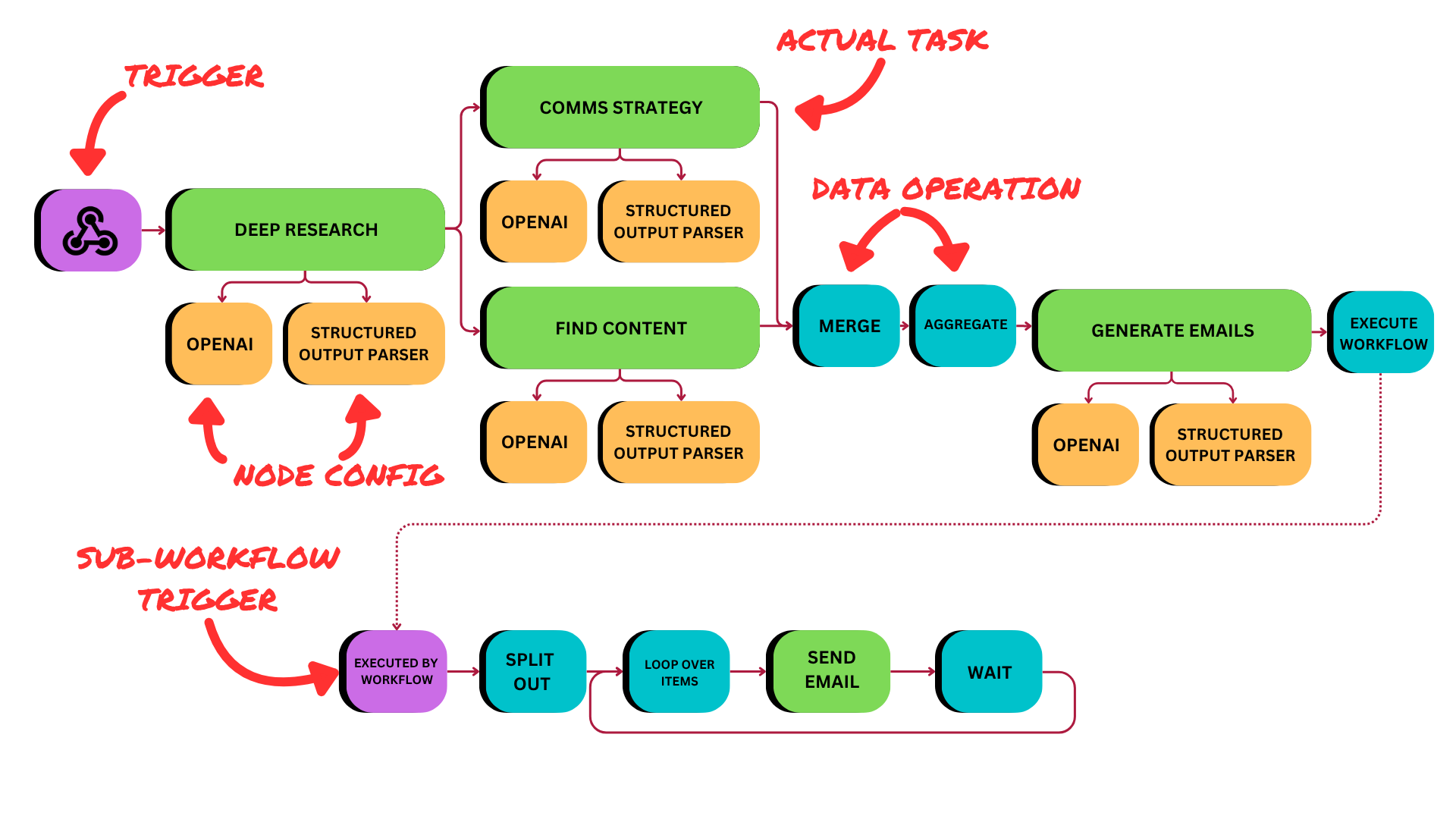

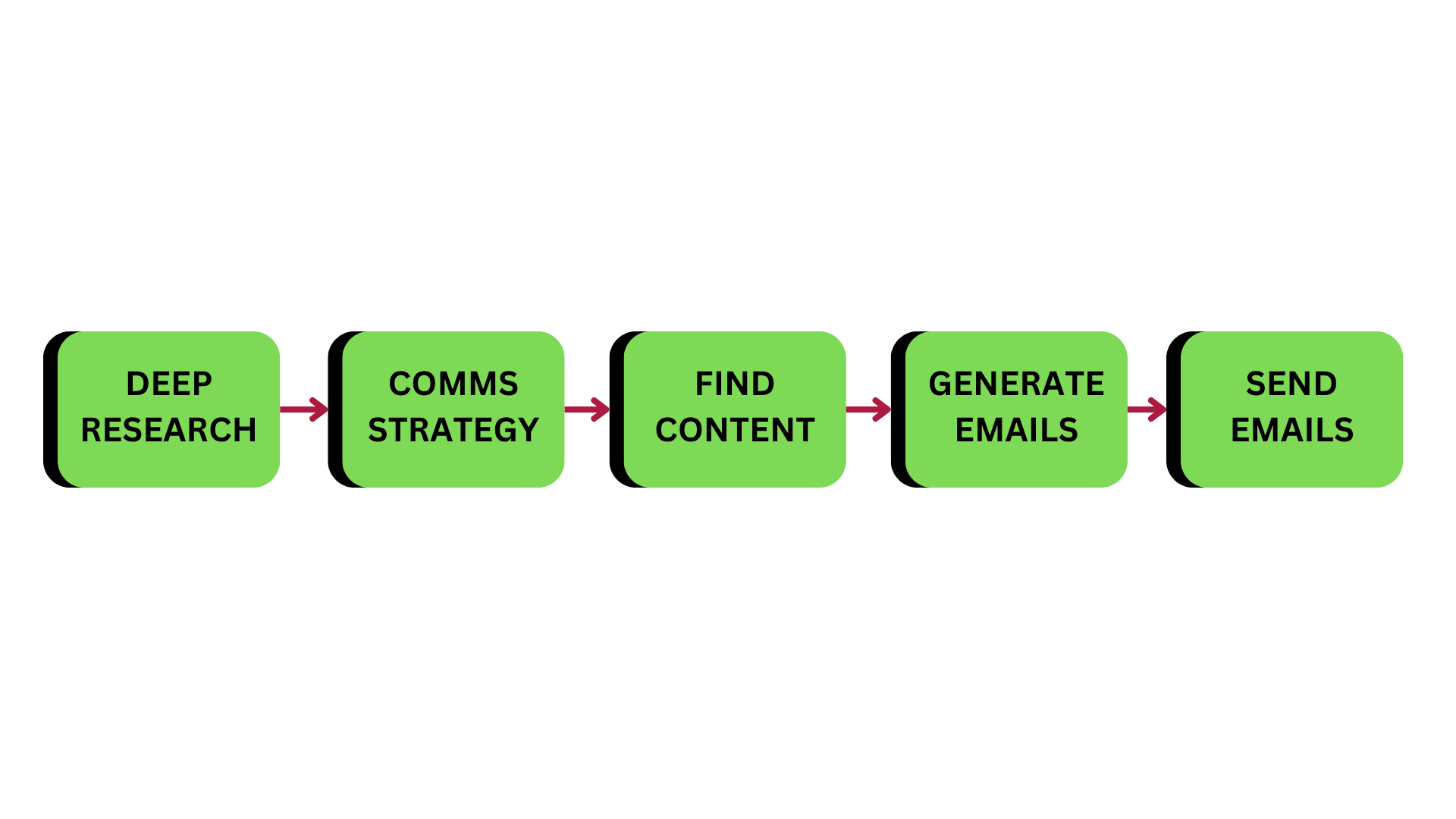

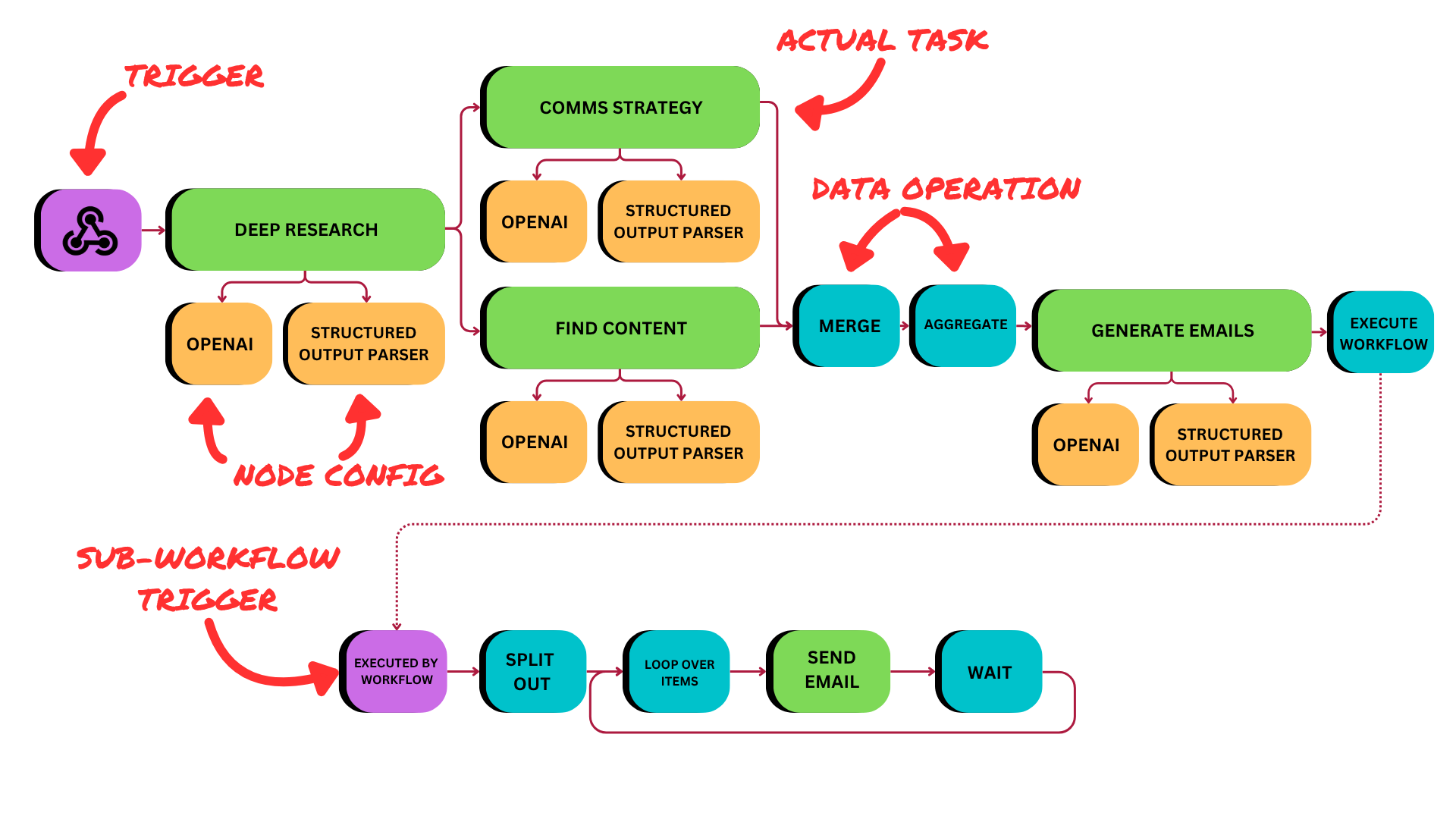

This is why your 5-step idea...

...ends up looking like this monster:

All those extra steps are just plumbing. You are manually managing the context. Then you try to scale it, running five workflows in parallel. They all touch the same database entries, overwriting each other and creating a mess that now rules your Tuesday night.

Want to see practical examples? Check out 10 n8n workflows every solopreneur needs to understand common automation patterns.

This isn't a user error. It's a design flaw. Zapier, Make, and n8n all grew out of a world where automation meant something simple:

Trigger → Action → Done

They were never designed for complex, let alone stateful workflows that touch shared databases or call LLMs.

They treat context as an afterthought, so you end up manually managing:

- State: Keeping track of what happened.

- Schema: Keeping data shapes aligned.

- Concurrency: Preventing race conditions.

- Validation: Catching garbage before it spreads.

That's not "no-code." That's ops-engineer cosplay.

The problem with your n8n workflow is not that you built the wrong plumbing.

It's that you had to build the plumbing in the first place.

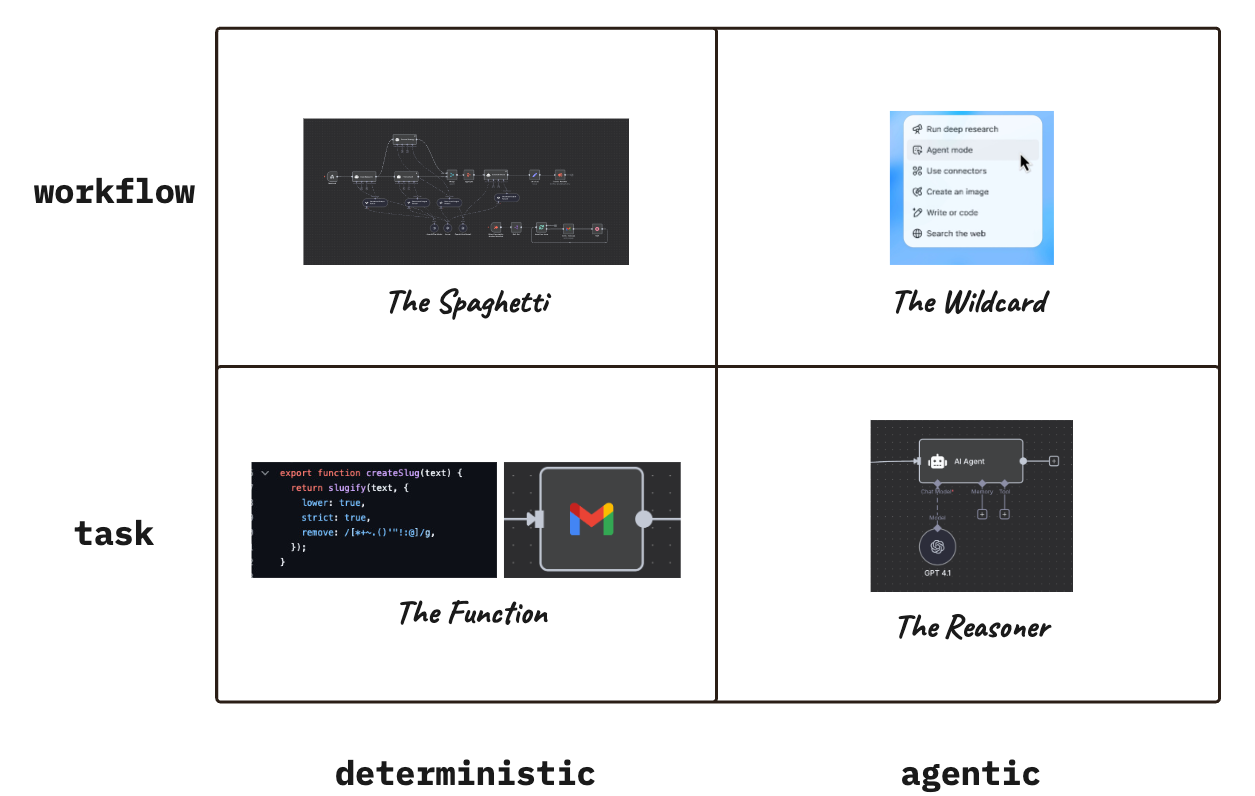

The Two Axes of Automation

So, how do we escape this "ops-engineer cosplay" and build something reliable?

First, we need to understand the field. Most debates about automation are actually about two separate, crucial axes:

- Scope: Are you automating a single task or many tasks?

- Behavior: Is that automation deterministic (always the same) or agentic (can 'think' and adapt)?

Put them together and you get a 2x2 matrix that explains 95% of the automation tools and problems you're experiencing today.

The Function

Deterministic execution of a single task. If this, then that.

- What it is: A simple function that always does the exact same thing with the same inputs.

- Examples: A serverless function, a Python script to format text, an n8n "Gmail" node that sends an email predictably.

- The Verdict: Incredibly reliable, testable, cheap, and fast. But it has zero flexibility. If the input data is messy ("garbage in"), you get garbage out.

- Plumbing: Entirely manual. You miss the plumbing, it never runs.

The Reasoner

A single task to an agentic node (ie: AI Agent node running on Langchain) with some tool access. Chat completion models and Reasoning models behave differently based on number of turns they take at trying to complete the task.

- What it is: A single LLM step that interprets ambiguous context and produces a structured result.

- Examples: A node that says, "Summarize this call for the AE" or "Extract the intent and next step from this email thread."

- The Verdict: Great for handling ambiguity and messy human language. But its output is non-deterministic (it can vary), and it needs strong guardrails to prevent "drifting" off-task.

- Plumbing: More flexible once the prompt reaches the agent. But what data, context or prompt are sent to the agent needs your manual plumbing.

Learn more about how AI agents work in our comprehensive guide: AI Agents Explained: What They Are and Why They Matter.

The Spaghetti

Multiple nodes stitched together into a deterministic workflow. Running more complex processes automatically.

- What it is: A graph of predictable (A) steps with an explicit, hard-coded control flow. You can bolt on AI Agent nodes and LLM calls.

- Examples: This is your classic n8n, Make, or Zapier workflow without any AI. It's also used for heavy-duty data pipelines (e.g., Apache Airflow).

- The Verdict: Reliable for stable, high-volume processes. But as you know, as complexity grows, you get a "plumbing explosion" trying to manually manage state, errors, and retries.

- Plumbing: Entirely manual and brittle, but without the guardrails of code-first orchestration systems. You can add AI as a bolted on feature, but the workflow itself will remain brittle.

According to Trantor's 2025 workflow orchestration report, the industry is shifting toward context-aware, adaptive workflows that eliminate rigid "if-then" structures.

The Wildcard

- What it is: A single, autonomous system that decides its own sequence, tools, and strategy across multiple steps. The workflow doesn't exist, the agent builds it for itself.

- Examples: Manus or ChatGPT Agent Mode, where you give it a high-level goal like "Research, plan, draft, and publish a blog post."

- The Verdict: Amazingly flexible and easy for non-technical users to start. But it's dangerously unreliable, prone to loops, can lead to massive cost blow-ups, and is almost impossible to audit.

- Plumbing: As long as it has access to the right tools (via MCP for example), it will figure it out and you can forget the plumbing. However you have zero control over whether it actually does what you need.

Practical n8n Tutorial

Want to see these concepts in action? This comprehensive tutorial shows you how to build reliable n8n workflows:

Why workflows break

So why did that $2m deal get stuck? Not because you "misconfigured a node".

They fail because they push you into building Spaghetti (Many-Deterministic) and force you to manage all the state and plumbing yourself.

Then, they ask you to bolt on Reasoners (the "AI Agent" node) without any real isolation.

This means you inherit both sets of problems:

- The State Problem (spaghetti): The "game of telephone" where data gets corrupted as it's passed through 20 nodes.

- The Alignment Problem (reasoner): The "fuzzy" AI node that works 95% of the time but silently fails on an edge case with no warning.

You're left debugging a system that is both brittle and unpredictable. Naturally autonomous agents become very attractive after this, but then again, they are so unpredictable that even AI researchers think they shouldn't be deployed.

...so what now?

Well, the AI industry is hell-bent on convincing everyone that their super-duper autonomous agents will work somehow. Ever since I launched this blog, I've been arguing that it's unlikely because AI is kinda shit for that.

Instead I proposed the idea of narrow specialized agents that are good at doing one thing well and nothing else. But deploying those require us to completely rethink how we think about our own work, which opens an even bigger can of worms.

So instead, let's just run a thousand random pilots, buy prompt engineering workshops from some local experts, buy a Copilot license, put out a press release claiming we're now "AI-first" and collect the bonus at year-end.

Then by the time the market realizes it's a shitshow, we won't care anyway, the we will have convinced ourselves that we've done something and the entire fiasco will be buried under the cozy and debilitating layers of business as usual.

I don't think that GPT-6-7 (!) or whatever will solve this. I also don't think Manus or any other agent will solve this.

Not unless we admit something: if context management is the real bottleneck in AI automation, then we're fucked.

Because we have no clue how to make it work either.

Frequently Asked Questions

Why do my n8n workflows fail randomly?

n8n workflows fail due to context management issues. As data flows through multiple nodes, it can get corrupted, lost, or transformed incorrectly—like a game of telephone. The tools force you to manually manage state, schema validation, and error handling, which leads to brittle automations that break unpredictably.

What's the difference between n8n, Zapier, and Make?

All three are "many-deterministic" workflow builders that suffer from the same architectural limitation: they make you manually manage context across nodes. n8n is open-source and self-hostable, Zapier is the most user-friendly SaaS option, and Make offers the most advanced logic capabilities—but all require extensive "plumbing" for complex workflows.

Should I use AI agents instead of workflow automation?

Fully autonomous AI agents (like ChatGPT Agent Mode or Manus) are unpredictable, prone to infinite loops, and can cause massive cost overruns. A better approach is using "Reasoner" nodes (single AI steps with specific tasks) within deterministic workflows, giving you flexibility without losing control.

How do I prevent silent failures in workflow automation?

Add explicit validation nodes between critical steps, implement comprehensive error handling with alerts (Slack, email, SMS), use monitoring tools to track execution history, and build "checkpoint" nodes that verify data integrity before proceeding. Also, use idempotent operations (safe to run multiple times) to prevent duplicate actions.

What's the alternative to visual workflow builders?

Code-first orchestration systems like Apache Airflow, Dagster, or Temporal offer built-in state management, dependency tracking, and error recovery. They require programming knowledge but eliminate the "plumbing explosion" problem by handling context management automatically. For non-technical users, specialized narrow AI agents designed for single tasks may be the future.

Last updated: February 11, 2026