Why Your Timeline is Having a Different AI Conversation Than You Are

Last week, I watched a Fortune 500 CEO demo their "AI transformation" by copy-pasting ChatGPT outputs into PowerPoint.

The same day, a 19-year-old demonstrated a system that monitors his Stripe webhooks, writes SQL patches when schema drift happens, and texts him only when confidence drops below 94%.

They both said they were "using AI in production."

They were both right.

They were also living in completely different universes.

We live in parallel universes

We don't share one "AI world." We share headlines, X threads, and LinkedIn hot takes—but we live in parallel universes defined by our level of use.

What looks like revolutionary magic to one person is embarrassing slop to another. Not because they're snobs or luddites, but because they're standing on different floors of the same building, looking at different horizons.

The disconnect has nothing to do with age or budget and everything to do with how many walls you've punched through to get where you are.

Below is my guide to navigate through these parallel universes. Use for yourself, your team, and especially for interpreting your timeline.

The 5 Levels of AI

- Level 0 — The Untouched (8 billion): AI is someone else's problem. Or opportunity. Or apocalypse. Whatever, it's not theirs.

- Level 1 — The Normies (~800 million): Free chatbot users. Weekend warriors. For them, ChatGPT = AI and switching from GPT-4o to GPT-5 was an existential moment.

- Level 2 — The Power Users (~5 million): They pay $20/month because the free tier is not enough. They get limited requests, they want better models, more access. Becoming power users. Somewhat frustrated by hallucinations but still very confident in how AI will disrupt everything. They usually think there's an AI tool for everything and don't necessarily understand how they're basically all the same tech.

- Level 3 — The Builders (~500k): They use ChatGPT Pro or Claude Max. They build rudimentary stuff with n8n, Zapier or Make. They don't trust ChatGPT outputs anymore and have developed an intuition for spotting hallucinations. They are all in on AI but don't yet know how deep the rabbit hole goes. They dominate Linkedin with their fancy n8n workflows where they call everything an AI agent.

- Level 4 — The Operators (~50k): Using Claude Code or Cursor to build stuff for themselves. Learning how to navigate AI swarms for building. Learning engineering principles to get better at things. Spennd increasingly large amounts of time with plumbing context instead of typing into models. These ppl stop hyping AI, because they know how much of it is held together by WD-40 and duct tape.

- Level 5 — The Researchers: They don't use AI. They build it. This is PhD superstar territory, demigod level knowledge.

Notice the pattern? Each level is ~10× smaller than the last. Each wall you break through filters out 90% of the people who were with you.

This is why your X feed feels schizophrenic. You're watching conversations between people who might as well be speaking different languages. Because they are.

The "Aha From Pain" Moments

Nobody reads their way up this ladder. You don't level up from a Medium article or a YouTube tutorial.

You level up when you hit a wall, curse at your screen, and refuse to accept that this is as good as it gets.

Level 0 → 1: "Holy sh*t, it actually works"

The moment: You paste your mess of a resume and watch it become something a human might read. You feed it your spaghetti code and it explains what you wrote better than you could. You ask it to explain why your houseplant is dying and it knows.

The wall you broke: Skepticism. Or more often unawareness.

Your new religion: "AI is useful (sometimes)."

You sound like: "Have you tried ChatGPT? It's actually incredible!"

Level 1 → 2: "The free stuff is costing me money"

The moment: You hit your 40th message of the day at 2 PM. Or Claude gives you error messages instead of answers during a client call. Or GPT makes your code elegant but sometimes nonsensical. You realize: inconsistent AI is worse than no AI.

The wall you broke: Treating AI like a toy instead of a tool.

Your new religion: "I'll pay for reliability because time is money."

You sound like: "The latest Claude update totally changed my workflow."

Level 2 → 3: "Copy-paste is killing me slowly"

The moment: Your perfect prompt is buried in a Notes app graveyard. You have seventeen versions of "the one that works" and they're all different. You realize you've been doing the same "upload → format → prompt → copy → paste" dance 50 times a week. Your wrist hurts. Your soul hurts more. You are a living and breathing ETL pipeline.

The wall you broke: Manual repetition.

Your new religion: "Systems beat sessions."

You sound like: "Just built a simple automation that turns Jira tickets into PRs. Game changer."

Level 3 → 4: "This needs to work when I'm asleep"

The moment: Your "simple automation" is now business-critical. When it breaks, Slack lights up. You need versioning, rollbacks, monitoring, and a way to explain to your CEO why the AI decided to email all your customers in Sanskrit at 3 AM.

The wall you broke: Hobby mode.

Your new religion: "This is infrastructure now."

You sound like: "Our p99 latency is still under 200ms even with the RAG pipeline."

Level 4 → 5: "The models aren't good enough"

The moment: You've squeezed every drop from prompt engineering. You've tried every model, every temperature, every technique with an acronym. Your evals plateau. You start reading papers. You probably enroll in a postgrad program and that's when even this blog seems childlike to you in complexity.

The wall you broke: Being a consumer of AI.

Your new religion: "We have to push the edge ourselves."

You sound like: "The attention mechanism's quadratic complexity is the real bottleneck here."

The Same News, Five Different Planets

This is where it gets interesting. And by interesting, I mean completely insane.

Headline: "OpenAI Announces GPT-5"

- L1: "Is this the iPhone moment? Should I be scared?"

- L2: "How much faster? What's the token limit? When do I get access?"

- L3: "Show me the API docs and the pricing."

- L4: "What's the migration path? How do we A/B this against production?"

- L5: "Interesting architecture choice. Let's reproduce this and see what breaks."

Headline: "New No-Code AI Platform Raises $50M"

- L1: "Finally! AI for normal people!" (They'll never sign up)

- L2: "Could this replace my three subscriptions?" (It won't)

- L3: "But can I script it?" (You can't)

- L4: "Another wrapper. Next." (They're right)

- L5: "Who's funding this slop?" (Good question)

Tweet: "Just discovered this INSANE prompt that makes ChatGPT 10x smarter!!!"

- L1: Bookmarks immediately. Life changed.

- L2: Adds it to their prompt library. Maybe.

- L3: Tests it. Meh. Moves on.

- L4: Doesn't see it. Too busy shipping.

- L5: Mutes the account.

This is why your timeline gives you whiplash. You're watching five parallel conversations happening in the same feed. The L1 celebrating their first win. The L2 optimizing their workflow. The L3 showing off their weekend hack. The L4 sharing their postmortem. The L5 dropping arxiv links.

They're all right. They're all wrong. They're all talking past each other.

How to Diagnose Your Level

Level 1 Symptoms

- You screenshot AI outputs to share with friends

- "How do I save this conversation?" is a real problem

- You have ChatGPT bookmarked

- You think prompt engineering is magic

- You say "AI" like it's one thing

Level 2 Symptoms

- You have strong opinions about Claude vs GPT

- You've rage-quit a model mid-task

- $20/month feels like a bargain

- You know what "context window" means

- You've started a sentence with "I asked AI to..."

Level 3 Symptoms

- Your terminal history has "curl" and "api key"

- You have prompts in version control

- You've written "TODO: handle this edge case" next to AI code

- You know what temperature does (and you've tweaked it)

- You've explained rate limits to a non-technical person

Level 4 Symptoms

- You have dashboards for your AI pipelines

- "Fallback model" is in your vocabulary

- You've written a postmortem about prompt drift

- You measure tokens like AWS measures compute

- You've said "we need to instrument this"

Level 5 Symptoms

- You've implemented a paper from scratch

- Your bookmarks are 50% arxiv links

- You have opinions about transformer alternatives

- You've trained something (even if badly)

- You understand why everyone else is wrong about AGI

How to Actually Level Up (Without the BS)

Forget the courses. Forget the certifications. Here's how you actually climb:

To Level 2

Track every time AI saves you 10+ minutes for one week. Use real numbers. If the number is meaningful, pay for the upgrade. If it's not, stay at L1—it's fine there.

To Level 3

Take your most repetitive AI task. The one you do more than 3x per week. Turn it into a single command. I don't care if it's a bash script held together with duct tape. Make it one button.

To Level 4

Take that button and put it on rails:

- Add retry logic

- Add error handling

- Add monitoring

- Add a backup plan

- Give it to someone else to run

When it breaks at 3 AM and you fix it without getting out of bed, you've arrived.

To Level 5

Start measuring what "good" actually means in your domain. Build evals. When you plateau, stop tweaking prompts and start reading papers. When the papers don't help, write your own.

The Ugly Truth

If you're at Level 3 or 4, you're not "ahead of the curve."

You're in a different dimensional plane of existence.

You're part of the 0.006% who've turned AI from a party trick into a power tool. Your competition isn't using AI—they're using ChatGPT. There's a decade of capability gap hidden in that difference.

But here's what matters: Don't argue across universes.

When your L1 boss gets excited about ChatGPT, don't roll your eyes. Translate. When your L5 friend starts talking about constitutional AI, don't preten, Ask. When your timeline erupts about the latest "breakthrough," check which universe it's breaking through in.

The walls between levels aren't borders—they're filters. Each one you break through doesn't make you better than anyone else. It makes you different. Your problems change. Your tools change. Your blind spots change.

The magic isn't knowing which level you're on.

It's knowing that the levels exist.

For years I spent my days pulling people from Level 1 to Level 2, then from Level 2 to Level 3. For the last year here at the Lumberjack, I've spending my days pulling people from Level 3 to Level 4. Not with hype. Not with hand-waving. With ugly code that works, wired to real business problems, with enough structure to survive contact with reality.

This probably means that I'm losing out on a bunch of people because I get too technical. My AI-First Operator Bootcamp is a great example where we have mums trying to prompt their way towards more productivity joining the sessions with members of the Agentics Foundation discussing swarm intelligence.

MIT just came out saying AI is basically a $40billion cope for corporate board members. Why?

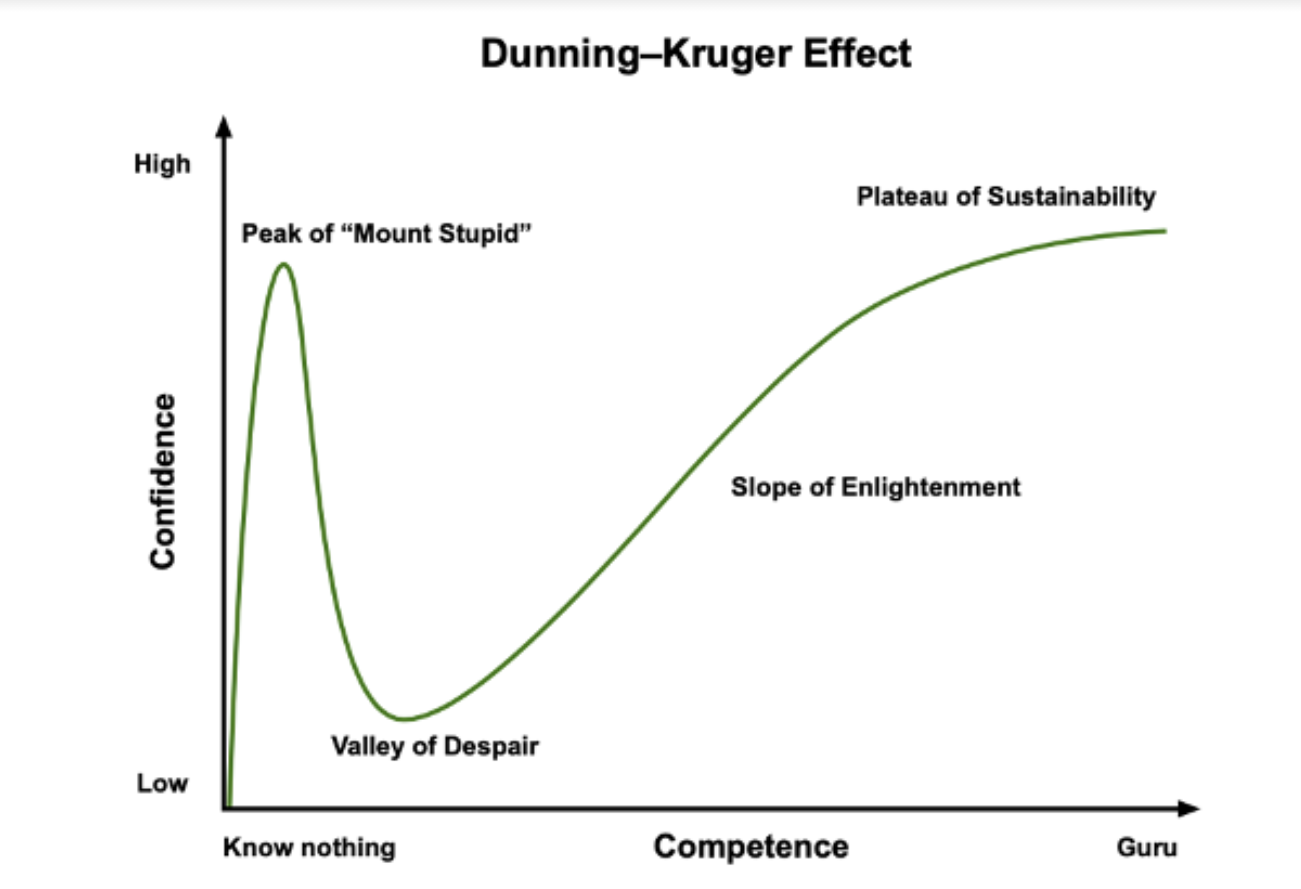

Because the world is the easiest to understand when you're standing on the Peak of Mt Stupid and the only way to go there is down. And people don't want to go that way. In other words: people very rarely move between levels voluntarily, because they do not know that there's another level. They're not only uninformed but also confident.

So instead of admitting that AI itself won't change the world unless we change with it, they'll spend ridiculous amount of time doing so much mental gymnastics that their EEG charts become a solid argument for making it an Olympic sport.

It creates the infuriating scenarios where executives might say "let's integrate AI to our business because that's the future" but when solutions are presented they're being rejected because it's "too complicated" and "ChatGPT can do it anyway, if not now then in 6 months" (not true).

The companies winning with AI right now aren't the ones rwith the best models or the biggest budgets. They're the ones who understood early that AI isn't one thing—it's a ladder. And the only way up is through the walls. That means organizational development AND digging through AI implementation at the same time. Who would've thunk, huh? AI turns out to be just another software project.

Your timeline isn't confused. It's just crowded with people standing on different rungs, pointing at different horizons, using the same words to describe completely different views.

Now you know why.