Marteen Buyl is a researcher at Ghent University. He recently co-authored a paper titled “Large Language Models Reflect the Ideology of their Creators”.

More stuff about ideology and alignment. The internet is full of these articles, the media loves it. It’s easy to be scared and fear makes people click. I don’t spend much time on these thoughts.

Then a question popped into my head:

But is it possible, to get rid of bias?

To dig into this, I first wanted to understand bias as a phenomenon.

Disclaimer: This is a deeply philosophical piece that has been sitting in my drafts for months. I want to write more. To do that I need to write shorter posts. This is one attempts to do that.

The goal when we train LLMs can be described the following:

Train the model so that, given any prompt (𝜉), it generates a response (𝜂) that closely matches the expected response (𝜂*) from the corpus (Ω), minimizing the bias or error (𝜖).There are two problems with this:

- The expected response

(𝜂*)in this case means ground truth according to the corpus. In other words, in theory if I only trained a model on Catholic literature, we'd train the penultimate Catholic model. - By treating

(𝜂*)as ground truths mean we imply the existence of objective truths, which exist in some fields like mathematics and don't exist in some others like culture.

So the training process is basically about trying to minimize the loss function of bias: ϵ=L(η,η*)

The way you would train a model to get rid of bias is kind of like this: You initialize random weights (let's mark them with theta θ and you feed 𝜉 to generate 𝜂. Then you calculate the loss function above. Based on the result, you adjust θ using gradient descent . Then you keep repeating the process updating θ to minimize 𝜖.

Then the question is how do you decide is η is correct or incorrect?

Again, when we talk about objective facts, it's easy. if ϵ=L(η,η*) is high, we say that the model is hallucinating.

But that's not really that simple, because if we treat the entire world's data as the "primal corpus" (also known as reality) as R, we must acknowledge that our corpus is only a subset of Reality: Ω⊂R If we had access to all of reality, our expected response could be marked as H (capital η). Disregarding philosophical questions of whether the true nature of reality can be observed and known (as in is it possible to create a corpus that encompasses all reality Ω=R. This means that there is a bias between our response and our corpus that can be described by ϵ=L(η,η*) but there is also a bias in relation to the actual truth. I'll mark our divergence from the actual reality with capital epsilon. Thus we can use a loss function here too. E=L(η*,H) Which means that our actual bias formula would look something like this:

That cannot be computed because

Hcannot be observed or calculated andη*is inherently biased because of the incomplete and subjective nature ofΩ⊂R

All tech can do is work towards more accurate fitting to the corpus but never actually get rid of biases.

We have no objective point of reference to determine what total lack of bias means.

Alignment and bias

Here’s a controversial take. Imagine a world where we are fully made aware of our human biases. We cannot change our human nature, but we know how biased we are against an objective reality.

We would then start creating social constructs to use language as workarounds for our biases. We would create new terms to use, and new rules to follow. It becomes artificial. Not human. Forcing humans to behave in such a way might even introduce tension in society.

Then we create an AI system that is designed to avoid our biases by incorporating such language and rules.

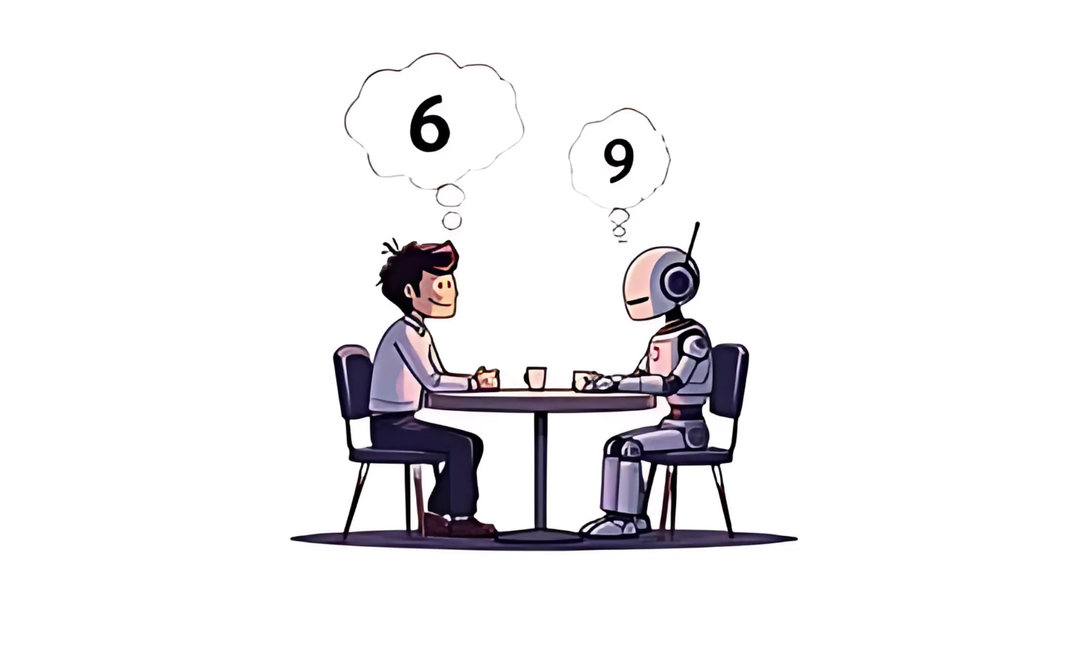

This AI would by definition be nothing like a human being. In our pursuit of eliminating bias, we eliminated alignment. This AI system would have vastly different values than we do, therefore creating uncertainty and unpredictability for humans.

If humans are biased by design, getting rid of bias must sacrifice alignment.