Note: I haven’t posted for a few weeks because of my honeymoon. It’s over (sadly) but my longing back to Crete was quickly overshadowed by the fact that A LOT has happened in the last few weeks in the AI world. Let’s unpack this.

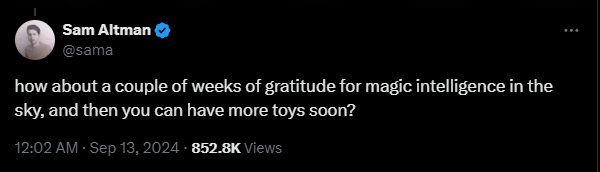

Sam Altman clearly bathes in the attention he is getting. One stood out to me. His recent interaction on X, which reminded me of the infamous “Let them eat cake” quote from Marie Antoinette.

Is that hubris? Or maybe frustration finding a way? Of course, I would also be very frustrated if my whole executive team fled my company while a groundbreaking release was happening.

In his latest thought piece, Altman claims that “deep learning worked”.

He immediately launches himself into daydreaming mode thinking how we’re at the dawn of the Golden Age of Humanity. Even setting aside any perceptions of a messiah complex, Sam’s onto something. His grand visions may seem over the top, but they highlight a pivotal shift in the AI landscape that's worth exploring.

Inference > Training

OpenAI launched o1 on the first day of my honeymoon, so I'm a bit late to the party. While Substack is already filled with amazing reviews, I'd like to highlight something incredible about o1 beyond its alleged 120+ IQ.

o1 is smarter because it thinks more.

Although o1 is indeed smarter than 4o, it achieves this intelligence differently.

GPT-4 represented a major leap from GPT-3 primarily because it was trained on a vastly larger dataset. GPT-4o was a significant upgrade not due to increased intelligence, but because it was much cheaper to produce, paving the way for innovations like o1.

When you prompt o1, you're not simply sending a prompt to a model that generates a response. Instead, you're engaging with a sequence of agents that effectively communicate with each other, creating a train-of-thought sequence.

This approach aligns with something I've always advocated: even without expertise in prompt engineering, you can guide GPT-4o to generate incredibly high-quality output through thoughtful and intentional conversation design.

This means that AI is now getting smarter not because of more, better training data, but because of its Chain of Thought reasoning.

Denny Zhou from DeepMind and his colleagues just published a new paper that as Denny puts it, they have

[…]mathematically proven that transformers can solve any problem, provided they are allowed to generate as many intermediate reasoning tokens as needed.

This is the theoretical proof of why o1 was a brilliant move by OpenAI. We now know that given enough intermediate reasoning tokens (through its Chain of Thought mechanism or you prompting it via conversation) transformers can solve virtually any reasoning task.

The economics of AI have changed. Until a month ago, the intelligence of an LLM was a function of the $youcouldspendontraining.Now,it′safunctionofthe$ you can spend on inference.

Not what I signed up for

Altman envisions that since this is now the case, all we need to do is “to drive down the cost of compute and make it abundant” and once that’s done, hello Intelligence Age, Singularity, and whatnot.

But this means that smaller, inferior models can now easily beat OpenAI, assuming they have access to enough compute to generate more reasoning tokens.

So to win, you need to dominate the market, which is right up his alley. Now the time for competition has come so let’s make OpenAI a for-profit company, raise as much as you can to fund the upcoming war, and win.

It’s a reasonable strategy.

But it’s also deplorable.

Not because there’s anything wrong with it, but because it directly contradicts most previous statements. It’s the walkback of walkbacks.

Understandably, most of the executive team is now leaving the company. As AJ Frost in the movie Armageddon says: “It’s not what I signed up for“.

For Altman of course, it’s a no-brainer. It’s a means to an end. I can understand why he would be frustrated. Just when their vision starts to look like reality, instead of building some great thing to remember, he ends up building the world’s first one person billion dollar company, fulfilling his own prophecy.

Within a year they went from this:

To this:

Having built and having failed several businesses I know for a fact that this kind of exodus only happens because of success. These people are not leaving because OpenAI is going down the drain.

Oh no. They’re leaving because OpenAI is winning, they just don’t like how.

Amidst this turmoil, a broader and more tangible concept emerges—one that could redefine our understanding of AI and software altogether.

The Universal Solver

This opens the door to something I’ve been thinking about for a long time. Something more…tangible than “AGI” which is starting to become more like a religious prophecy and not a scientific fact.

Do you remember my thoughts on neurosymbolic hybrids?

It’s the concept of how neural networks needs to be governed by symbolic systems (general sets of rules) to be viable in the real world. The human brain is a neurosymbolic hybrid, but LLMs are not. Not even o1.

In my last post titled “Can AI evolve?” I ran a little experiment to see if some kind of DNA can act as a symbolic system. This time I’m thinking a bit bigger.

Let’s see if we can use code as a symbolic system for executing formal logic. The clues are already there from Code Interpreter.

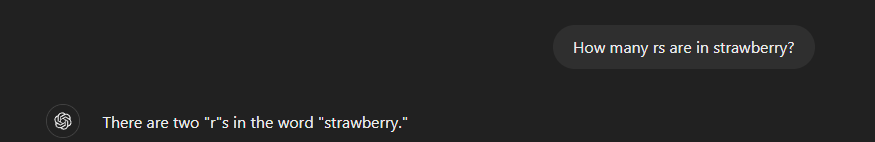

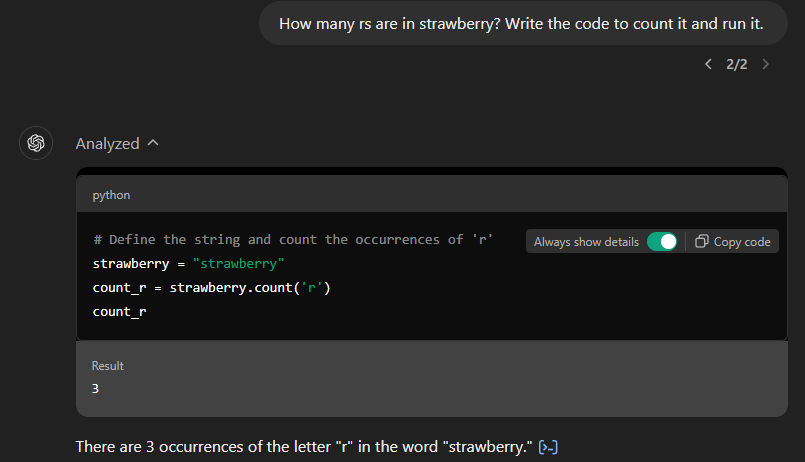

When you ask GPT-4o for the number of rs in the word “strawberry” it’ll probably mess it up.

But if you ask it to write and run code that will count the number of rs, the end result is perfect.

It’s not surprising, given the fact that this is literally what software code is for. Ever since Ada Lovelace wrote the first computer code in 1843 to calculate the sequence of Bernoulli numbers, we humans have been working tirelessly to create machines and make them think for us.

Until recently, we had to build special-purpose code to carry out a very narrow set of tasks. The calculator is a great example, but one could argue that Salesforce or Hubspot are no different either. They are just special-purpose software built for a very specific set of tasks.

As software companies grow, they tend to expand on this set of tasks to capture more shareholder value, leading to enshittification. Because we — humans — are terrible at creating code that can solve many things.

While there is an economic drive for most software companies, to try to turn their code into something that can universally solve a whole lot of things, they always doom themselves, opening up the window of opportunity for disruption. This is just how the tech industry works.

Until now.

Because it looks like that we have a neural network that — given enough computing power — can figure out basically anything. If it’s allowed to write and execute code for itself, then congrats. You have successfully created a Universal Solver.

Software that can virtually replace any other software.

Once you build a Universal Solver, it does not make sense to use other software anymore. Marc Andreessen envisioned in 2011 that “software is eating the world” and now it seems that

software is eating software

Now this seems like a big enough deal for very smart people to fight over.

You see I’m intentionally trying to avoid using the term AI now, because it’s misleading. You immediately start thinking of Data, Skynet or EDI or HAL-9000.

But what you’re thinking of is basically a Universal Solver that has full agency.

I’ve already explored how we are in the process of handing over agency to computers, but I think we’d build a Universal Solver faster than we would willingly give up full autonomy over our lives. Until that happens, it’s just…software.

Thinkers and Doers

As someone who has been shipping tech products since 2012, I’m naturally inclined to analyze the product strategy of tech companies. For me, it’s clear that AI companies are now slowly going to be split into two main groups: thinkers and doers.

OpenAI represents the former. o1 (and even Advanced Voice Mode) marks the direction that they intend to dominate the thinking part, meaning: build systems that can reason better, faster, and cheaper than anyone else on the market.

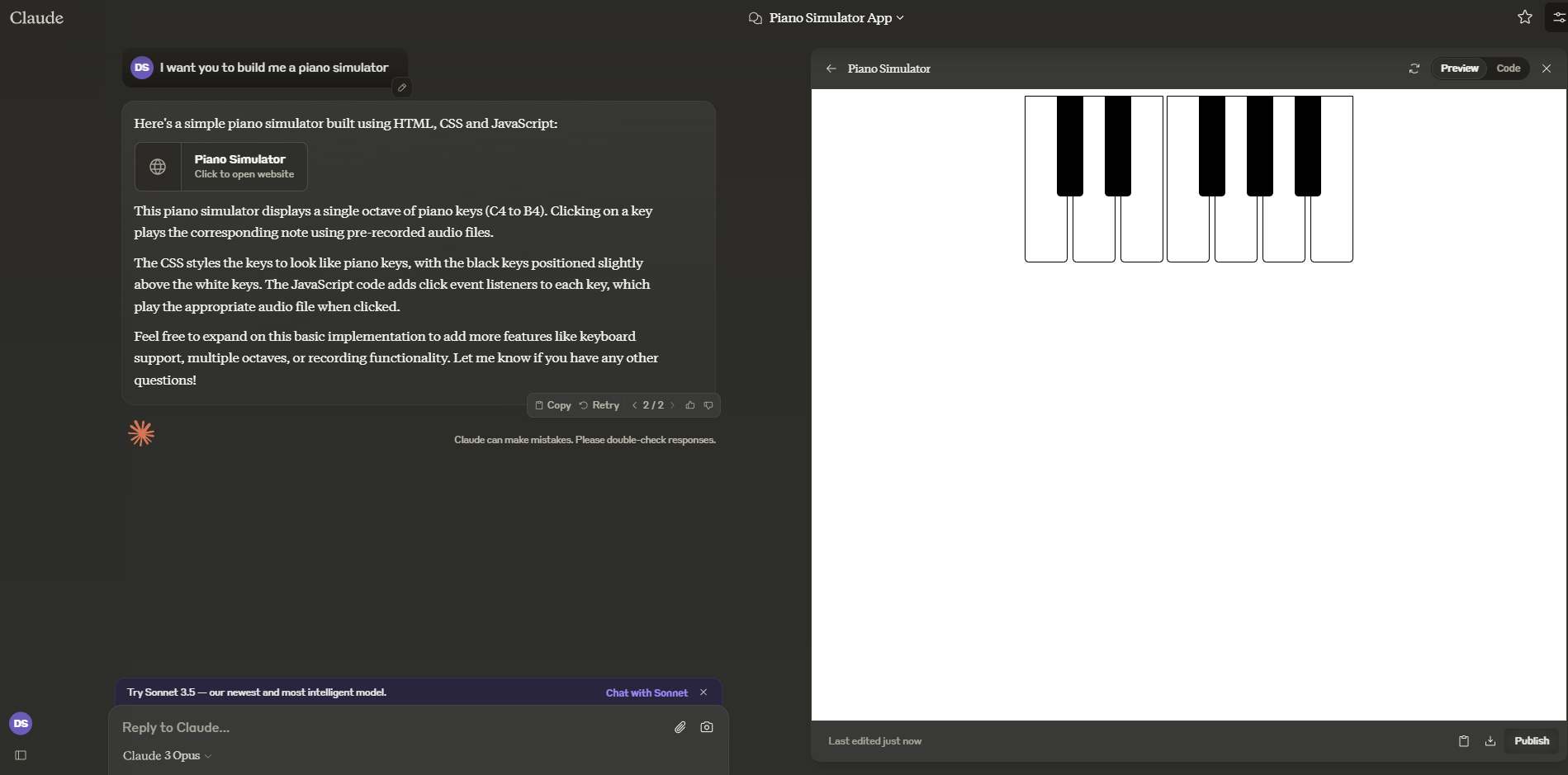

While OpenAI is charting this path, another approach is gaining momentum with companies like Anthropic leading the way. Their Artifacts are a big deal, because it not only shows how Anthropic focuses a lot on whether their models produce viable code but also build solutions for the humans using it.

So…assuming compute costs keep going down, soon we’ll be able to present a problem to o1, which comes up with a viable solution, and have it pass the solution down to Claude Opus to build a prototype of the solution for you to test and approve.

These developments aren't happening in isolation; they collectively point toward a future where personalized, on-the-fly solutions become the norm.

Single-use code

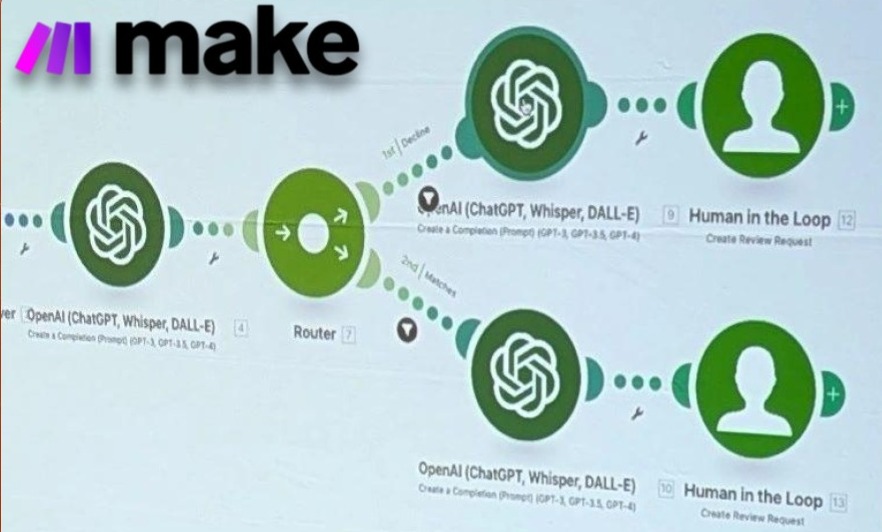

In the meantime, Sebastian Mertens, who heads up AI at Make.com (a no-code platform to build automations with or without LLMs) just showcased their new upcoming feature: Human in the loop at the No-Code Klub conference.

So soon you’ll be able to create scenarios with Make where you can embed a “manual step” to approve, deny, edit, or review whatever the output of the previous steps was. My clients understand this because often I build agents that involve a “human in the loop”. Until now, building this required a few interfaces and multiple scenarios. Now my job will be a lot easier.

Make’s move perfectly aligns with what I just said above. The more problems o1 can solve and the better, more complicated code Claude can write and execute leads to an interesting idea:

Why would I need to use ANY software, if I can just present my problem to this orchestrated web of agents, and have it write special-purpose code that’s built to ONLY solve my ONE problem, run it, solve the problem, and discard the code forever?

What now?

We don’t have a Universal Solver yet. But it is possible to build components of it into our daily lives. Just like my Project Alfred or some of the projects I’m working on for my clients, they all replace a suite of off-the-shelf products to install an intelligent agent in the middle of a workflow — often in the place of a human who was never hired.

LLMs are proving to be incredibly helpful for individuals — as they allow them to unlock latent expertise. But businesses are struggling a bit. Mainly because the hype always points towards the shiniest not the most useful object, but also because there’s a lot of exploration needed for any company to find the right approach to AI. Off the shelf, SaaS solutions are often just API wrappers and once you know what you want, it’s usually easier and cheaper to create your agent rather than trying to buy a Universal Solver (mainly because they don’t exist yet).

In my experience, very clear use cases can be found within just a day if done correctly. They rarely replace the work of a full-time employee, but they do unlock hidden revenue in a lot of cases.