Last August I called out the bullshit on how prompt engineering became this end all be all magical solution to everything. Do you remember the $350k per year job opportunities as prompt engineers? Do you know anyone who actually works as one?

Here’s the article for a deep dive. Some statements have changed since, but most of it is still true:

A few months went by and most of the world seem to have caught up with this. But we know that venture funding moves most of the stones in AI world so sanity was facing off its eternal adversary again: FOMO.

The large AI labs needed a new hype.

By the time the prompt engineering craziness started subsiding, Sam Altman published his thought piece called “The Intelligence Age”. In that post he said:

we’ll soon be able to work with AI that helps us accomplish much more than we ever could without AI; eventually we can each have a personal AI team, full of virtual experts in different areas, working together to create almost anything we can imagine.

Whoa, that sounds nice. Cherry on top, OpenAI finished their 12 days of OpenAI series with a bang: introducing o3. Ironically, 12 days of Christmas happens after Christmas in Christian theology, but I’m just nitpicking now.

Then Salesforce decided to stop hiring engineers. Why? Because Agentforce will take care of everything. Then Zuck joined in and said soon AI will be able to do a mid-level software engineer’s job on Joe Rogan’s podcast.

Reality check

OpenAI is great at talking hyperbole. While we’re about to enter the Golden Age of Intelligence according to the PR department, out in the real world, things are a bit…sillier.

Apple Intelligence was touted as a killer partnership between OpenAI and Apple, until it started claiming that Luigi Mangione killed himself (he didn’t). 1 A week later Apple decided to pull the plug.

The reality is that these AI systems are becoming increasingly good at beating human benchmarks. But that doesn’t mean they’re getting exponentially better, that just means we’re shit at designing benchmarks.

The problem with this is inflated expectations. Do you know why everyone drinks the Kool-Aid of fully autonomous robots and virtual AI assistants?

Because it promises to solve all your problems without having to lift a finger.

It’s a get-rich-quick scheme for nerds.

By now everyone and their neighbour is already fed up with the whole AI Agent thing. Which feeds into the fact consumers already hate AI, but the show must go on, regardless of how old this all gets.

This whole AI agent thing is getting a bit out of hand, while we’re still waiting for these so called AI agents to actually bring us the promised land.

Of course being a contrarian is fun, but jokes aside, how can we make sense of this AI agent thing. What is an agent and why do they matter now? Let’s try to unpack this, maybe we’ll find a path to actual value and utility.

Operator calling

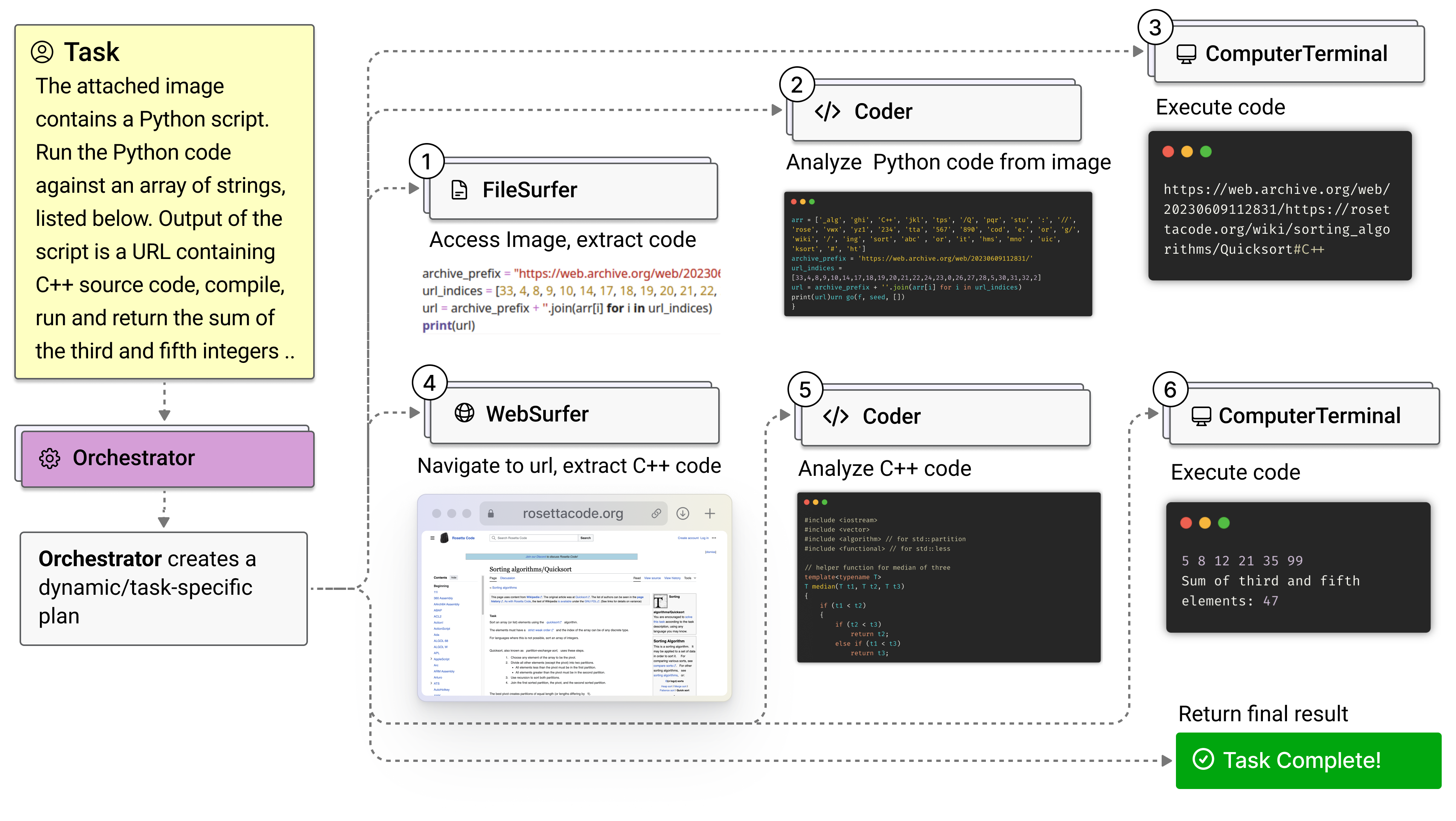

Then yesterday OpenAI launched the Operator. It runs a web browser and does things for you. The also published a new model called Computer-Using Agent and I’m failing to see the difference between this and Anthropic’s Computer Use.

If you read my post “We are not feeling the AGI - Part 1” at the end I show you a demo video from 2023 (!) when I was using AutoGPT to do the same.

Microsoft’s Magentic One was published in November.

So we definitely got the new hype. Originally I wrote this article on Monday, wanted to post this on Tuesday but then had a bunch of ideas so kept pushing it back. Now I just added this bit to touch on Operator, perfect timing Sam!

Jokes aside, these agents know nothing new. The tech is not new. But as much as these companies try to sell you the idea that agency is something software either has or doesn’t have, it's a tad bit more complicated than that.

Mainly, because of two constraints:

- The more agency you give your system, the more likely things are to go completely sideways.

- The more complex problems you give your agency, the more data it will need to process to complete it, swallowing tokens more frantically like a frat boy chugging beer on a keg stand.

Agency is a spectrum

There is an ultimate trend of humans losing agency to computers. I explored that in my article with the same title.

One of my favorite Substack’s covered the problem of agency recently. Jurgen Gravestein’s excellent writing attempts to define what is an AI agent.

He borrows from the book “Intelligent Agents” which was published in 1995 (!) and is considered to be the gold standard in this field. I won’t spoil it for you, but Jurgen concludes that today’s AI Agents meet some of the criteria but not all.

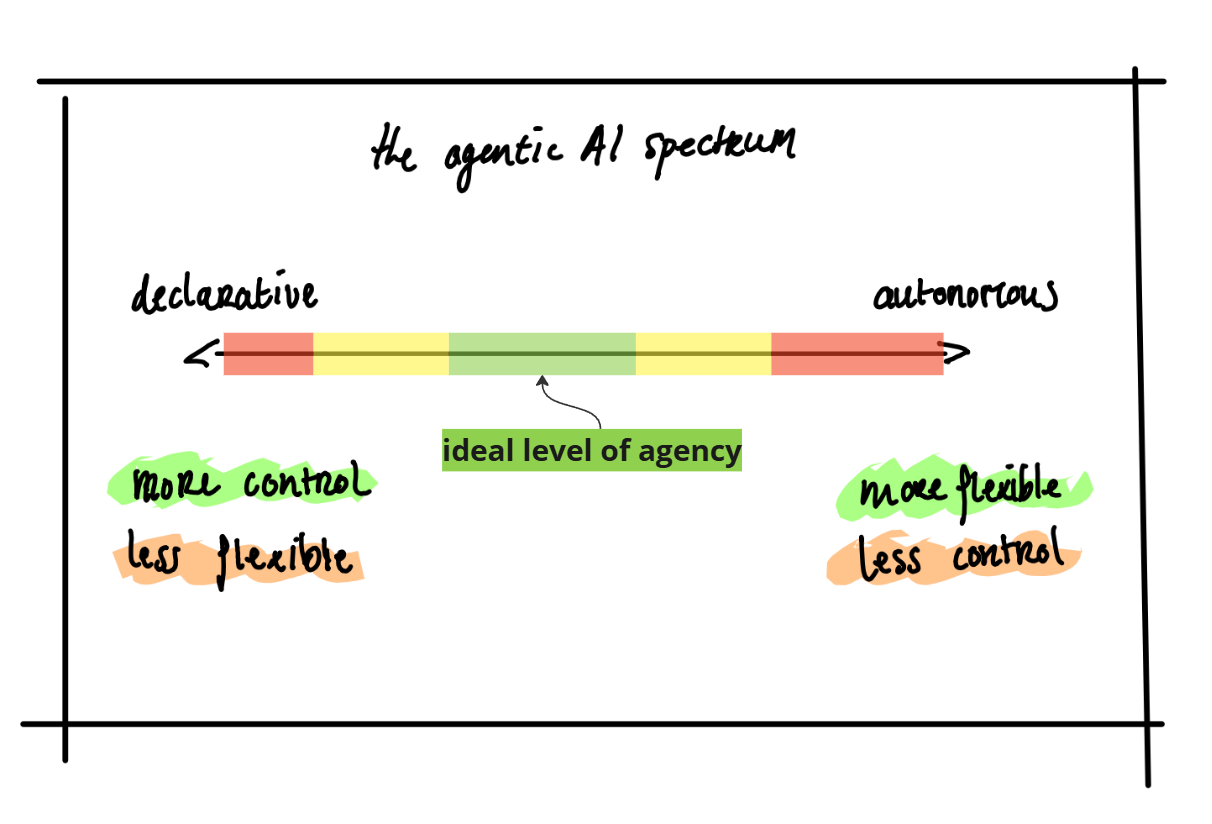

Then he moves on to explore Nathan Lambert’s argument for agency as a spectrum.

That permits us to think of AI systems (or humans) that do not have full agency, meaning they are forced to operate under some constraints. In other words, Lambert thinks there is such a thing as an AI-little-bit-agent.

The more agency your AI has, the less control you have over its output but it will be more flexible in dealing with all sorts of situations. Conversely, the less agency your AI has, the more control you can have over the output, but it won’t be as flexible in handling edge cases.

There must be a sweet spot between declarative and autonomous extremes where you have just the right amount of agency for your AI system.

Borrowing from Jurgen’s post, but updating it, it looks like this:

Think of it like a Goldilocks Zone of Agency2.

The enterprise value of any process (including both AI and humans) is a function of their utility and the level of any AI possesses.

Most enterprise processes have 0% AI and 100% human work right now, which means whatever tech is used, it doesn’t have any agency.

Mathematically, one way to capture this is with a simple product of utility and agency, for example

, where E is the enterprise value, U(A) is the utility the system can provide as a function of its agency, and A itself represents the degree of autonomy. By calibrating the right level of agency—sufficient to handle edge cases yet aligned with desired outcomes—you maximize the synergistic value your AI system delivers.

There is a technological limit though.

Once you move out of the Goldilock’s Zone towards autonomous side, you introduce more errors, hallucination, bad output. Here you need humans to correct the mistakes of the AI system before it’s signed off to retrieve control.

Once you move out of the sone towards the declarative side, you introduce more unhandled edge cases, bad inputs. Here you need to preprocess input so it can be computed by your AI system.

So for most use cases I’d argue that you don’t actually need a fully autonomous AI agent. You just need an AI-little-bit-agent.

We lack a good Agentic Framework

We do not have a production-ready framework for this. A few examples are LangGraph, AutoGen, OpenAI Swarm but it’s still very early days.

A good comparison would be computer ports. Do you remember this Concerto of Computer Ports before standardization?

Yeah, now we use probably like 5 of those now, at best. Now we still have the Concerto of Agentic Frameworks.

The problem with this is these frameworks are created by AI Labs, primarily driven by researchers. Their main motivation is science, not shareholder value.

From a humanistic perspective, that’s awesome, but for an adoption perspective it leads to this3, which is a quote from the Octomind blog:

LangChain was helpful at first when our simple requirements aligned with its usage presumptions. But its high-level abstractions soon made our code more difficult to understand and frustrating to maintain. When our team began spending as much time understanding and debugging LangChain as it did building features, it wasn’t a good sign.

These technologies are not ready for production-level environments. They’re too abstract, too theoretical.

The solution is boring

Software has increased its potential to create enterprise value by at least two orders of magnitude. But at the end of the day, it’s just software. It must make sense from a business perspective, which requires discovery, consent building, careful architecture planning, and consulting.

That’s boring, but it’s real.

What’s not boring would be to plug in a magical cloud-based AI agent that you cannot even comprehend what it entails, and it automagically solves all your problems.

The good news is that the enterprise (is starting to come out of the hopium shock of reading too much Altman on X. The bad news is that it’s going to take an awful lot of work to make AI systems generate value even with the technology we currently have today, and I don’t see any indication that more advanced AI systems will not make this issue even bigger.