I have a love-hate relationship with chess. When I was a kid, I was very fascinated by it so I did what most kids would do and I pleaded with my father to teach me to play. I lost, every single time. Nothing is surprising about that, I was a kid playing against an adult.

I started reading about chess. Learning about openings and strategies. I was not a frequent player but by the time I was a teenager, I was fairly confident in my head that I had all the knowledge I needed to beat my dad in the game.

So one day after dinner was finished I challenged him to a match at the kitchen table. He was very laid back. For him, it was a fun Friday evening pastime with his son.

For me, it was about everything. Honor. Respect. I started to see myself in a binary world. I either win or I lose this game. If I win, I win everything. If I lose, I lose everything.

I took my time every time I needed to make a move. I was slow, meticulous, and calculating. I was using everything I learned from the books. For years I have faced this unconquerable foe and now here I was, challenging him as his equal.

Yeah, there are a lot of Jungian layers to this story.

By the 20-minute mark, he started to become focused. He realized something was different. We were still playing and I was gaining the upper hand. He stopped smiling.

I noticed this. I became more confident and I gained strength from the early signs of success.

Then my dad changed his strategy.

He looked at me in the eye and asked:

“Any girl catching your attention recently?”

I looked at him, perplexed. He never asked me this. My parents were always pretty chill about my love life even when I was a teenager and let me share what I felt like sharing.

I immediately got rushed with a thousand thoughts and impressions as my brain was trying to automatically conjure an answer to the question.

Then all of a sudden I had another thought:

“Stop. Focus on the game.”

I didn’t realize it then but this was the moment I lost the game. My focus was broken. I lost my train of thought. As I learned years later this happens frequently to people with ADHD. Until then I was in hyperfocus and with a simple question I went from a probable win to an inevitable loss.

About 15 moves later Dad looked at me smiling and said the magic words: “Checkmate”.

Later that night he explained to me that he realized I had become so good at the game that he couldn’t win unless he could disrupt my thinking. So he tried to disrupt me, to fill my head with thoughts that would prevent me from winning.

I never played with him again.

LLMs can reason

On September 21st a research team from Stanford, Chicago, and Google published a paper with the title “Chain of Thought Empowers Transformers to Solve Inherently Serial Problems”.

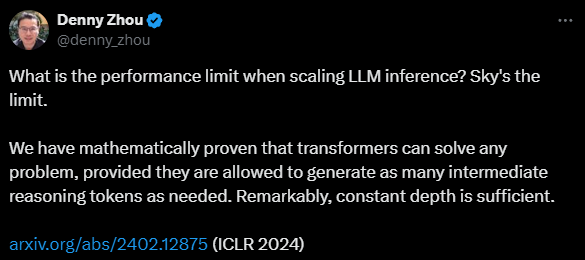

Shortly after one of the authors, Denny Zhou posted on X, claiming that they’ve mathematically proven that “transformers can solve any problem, provided they are allowed to generate as many intermediate reasoning tokens as needed”.

Zhou claims that Chain of Thought prompting significantly enhances the ability of LLMs to tackle problems. Particularly those requiring serial computation (tasks that require steps to be performed in a specific sequence).

Like thinking through possible next moves one after the other as I was playing against my father.

It’s almost like having mathematical proof that a chess student, when given a series of moves (the CoT prompt) outlining a strong chess strategy, can successfully follow these moves and win the game. This demonstrates an ability to perform complex computations (analyzing the board and planning future moves) in a specific sequence.

This is how I tried to win.

Zhou's paper shows that CoT expands the range of problems LLMs can theoretically solve and improves their performance on tasks requiring serial computation.

From the outside, this might seem that the LLM is now exhibiting a rudimentary form of reasoning. It is solving the problem after all.

Chain of Thought is not new. LangChain has been around for years and you can develop text generation driven by CoT. But it’s the first time it opens the door to solving problems at scale.

LLMs can’t reason

We didn’t have to wait for long for this conversation to have its next entry. On Oct 7th, Apple published a breakthrough paper. The researchers challenged the notion that LLMs possess genuine reasoning abilities. They argue that the reasoning process in LLMs appears to be more akin to pattern matching than formal logical deduction.

One of the most striking examples of this was the LLMs’ sensitivity to input variations. They observed that LLM performance can fluctuate drastically when even minor changes are made to the input, such as altering numerical values or word order.

Or you know…asking an irrelevant question as part of the process.

Just like I was, the same student from the previous example might struggle in a real game against a skilled opponent. They might make seemingly illogical moves, fall for simple traps, or become easily distracted by their opponent's tactics.

This fragility suggests that their understanding of chess is still superficial and lacks the robust, adaptive reasoning we associate with a true chess master.

The only problem with it is that if it’s fragile, it’s not logical.

Transformers in retrograde

Reasoning is a big topic. This is a fundamental leap towards building AI systems that can generate novel scientific discoveries.

Altman admitted to this a year ago, when he said:

”We need another breakthrough. We can still push on large language models quite a lot, and we will do that. We can take the hill that we're on and keep climbing it, and the peak of that is still pretty far away. But, within reason, I don't think that doing that will (get us to) AGI. If (for example) super intelligence can't discover novel physics I don't think it's a superintelligence.”

Then the whole world went nuts about the rumored Q* development that could do just that.

Then it was renamed to Strawberry, then o1 was published and it’s impressive.

Slow, but impressive.

But it was just another episode of Smoke & Mirrors because all we got was “LangChain as a Service”. It looks as if the entire AI industry got trapped in its big visions and promises. The entire market is now awaiting AGI and will settle for nothing less.

Whenever something new is announced the whole market jumps on it and claims we’re making a huge step towards AGI.

It happened with Devin in March.

Then it happened with the dystopian Black Mirroresque gadget, Friend.

Then finally o1 claims it solved reasoning.

How do you prove it?

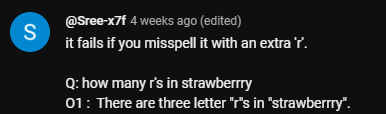

You got it. Count the number of r’s in “strawberry”. The comments were…unsurprising.

Watch the video. It’s a mouthful. A lot of fancy talk justifying how it gets it right.

Until…it doesn’t.

Maybe it’s not that o1 cannot reason, it’s just that transformers are currently in retrograde.

Talks about emerging features are exciting. Discussing all the philosophical arguments about AI and human relationships and how the vision is unfolding in front of our eyes is even more exciting.

But it’s becoming more like a religion than science. Jurgen Gravestein captured this a lot better than I can so I recommend his piece for further reading.

Among this religion, I see patterns.

Founders and venture capitalists marketing hyperbole.

Thinkers follow suit, discussing technologies that don’t even exist yet.

Then the third part of the AI brainrot trifecta:

ChatGPT enables the junk food equivalent of thinking.

People copypasting verbose ChatGPT responses to questions, completely killing real, human conversation. I’ve seen it way too many times running my education company and see it spreading like wildfire on social media platforms too.

This is the dark side of the AI industry: it’s become astrology for nerds.

We are stochastic parrots

Most comments I read about what LLMs can or cannot do are related to how good or bad is the code it writes. Lot of benchmarks test LLMs against coding tasks.

I find this so incredibly ironic because the consensus is that LLMs might produce working code but it’s shit.

Here’s why it’s ironic: Code is logic.

A great way to have a “neurosymbolic hybrid” — as Gary Marcus calls what could become AGI — would be having an LLM that produces and executes its own code…I wrote about this two weeks ago, I called this the Universal Solver.

Then we claim it reasons because we can see some magical emerging properties that allow us to solve problems.

Except it’s not a black box.

This is why I love the stochastic parrot analogy because that’s what LLMs are.

Do they actually reason? No.

Do they actually solve problems? Yes.

As a computational physicist friend of mine told me 10 years ago:

Correlation does not mean causality but 9 times out of 10 you can get away with it.