After my wedding day, both of us got sick. So I was stuck in bed for a week and apart from watching 4 seasons of The Good Doctor, I ended up building a world simulator

Let me introduce you to this little guy. It lives in a small little world called Enki so I guess that makes it an Enkian. Enkite. Enkling. Okay, I need to work on that.

In this post

- I will introduce you to a problem with some analysis: Can AI evolve?

- Then I will try to answer it.

- Then I will introduce you to Enki.

Neurosymbolic Hybrids

If you remember my first post, I made a statement there: AI is terrible at managing outliers and they have been terrible at it for 30 years.

Our brain uses symbolic systems — a general set of rules — to manage outliers but neural networks cannot work with symbolic systems. I explained that human and AI needs to work together as a “neurosymbolic hybrid” where the neural network gets governed by symbolic systems.

Without it, AI is going to have a really hard time achieving true agency.

As Gary Marcus explained, you don’t want an autonomous car that will only avoid crashing into trucks it has seen during training. You want your car to avoid crashing into big things in general.

Hm.

Wish we had a way to create these general sets of rules to follow, like:

if distance_to_object < threshold:

if object_size >= small:

evade()

What a sneaky little way of thinking. Maybe we could call it Python or something.

Jokes aside, symbolic systems are how we operate. We use computer code, mathematics, and physics to describe the general set of rules governing our lives.

Humans are already neurosymbolic hybrids.

We have this electric fat goo in our skull that acts as a neural network but it cannot just do whatever it wants. It can only work under constraints, like physics.

You could argue that when we dream, that’s where the brain LLM hallucinates, without constraints. After all, have you tried looking at your hands in your dreams?

But how do you get the brain to abide by the rules of physics? Easy, not doing so hurts. You have a body that can only operate under the constraints of physics. Most of those constraints are coded in your DNA.

Disclaimer: I’m making a lot of simplifications here that might make a lot of people angry. Anyway.

The Quartet of Life

So we have the Electric Fat Goo that is limited by the Body, that is limited by our DNA, that is limited by Physics. I’ll call this the Quartet of Life.

We are trying to replicate this. But neural networks don’t understand the set of rules that governs our world.

This is why Sora was such a big deal because it’s not just simply generating video frame by frame, but it’s running a physics engine. As Haque (2024) says “Sora has the potential to be a world model”. It’s a big deal because a world model would mean we have a symbolic system for how the world works that can be translated into code.

However when you look at OpenAI’s website, they say:

These capabilities enable Sora to simulate some aspects of people, animals and environments from the physical world. These properties emerge without any explicit inductive biases for 3D, objects, etc.—they are purely phenomena of scale.

In other words: we have no idea why this is happening, but we’re very excited about this.

Why? Because if you have a world simulator, you can use the rules of the world to determine how its inhabitants could behave. Not through rules but through constraints.

You know, like “Don’t jump off a cliff because you’ll die.”

However, you’ll still have some creatures that will just yeet themselves off a cliff anyway. Bad news: yeeting means dying. Good news: it won’t happen again.

Nature’s solution to weeding out insufficient constraints is evolution. Winning strategies will survive, losing strategies will go extinct. That’s what our DNA is for.

So a hypothesis arises from this: if AI could evolve, maybe it could get smarter on its own. But can AI evolve?

Coding Evolution

I’ve also played a lot with Spore when I was a kid and I’m following the work of Léo Caussan on The Bibites with awe. But one of the biggest influences on my thinking was David R Miller who is responsible for probably the biggest mic drop moment in Youtube history. 3 years ago David published the last video to his channel titled:

”I programmed some creatures. They evolved.”

…and he never published another video again.

In that video he spends an hour explaining how he basically created the Quartet of Life and then put the whole thing on Github.

Unfortunately his biosim4 never had a GUI and I’m a sucker for visuals. I also don’t know shit about C++, only some Python and Javascript but I even suck at those. However this did not stop me from looking in the mirror and saying:

I’m going to build my own world simulator.

Introducing Enki

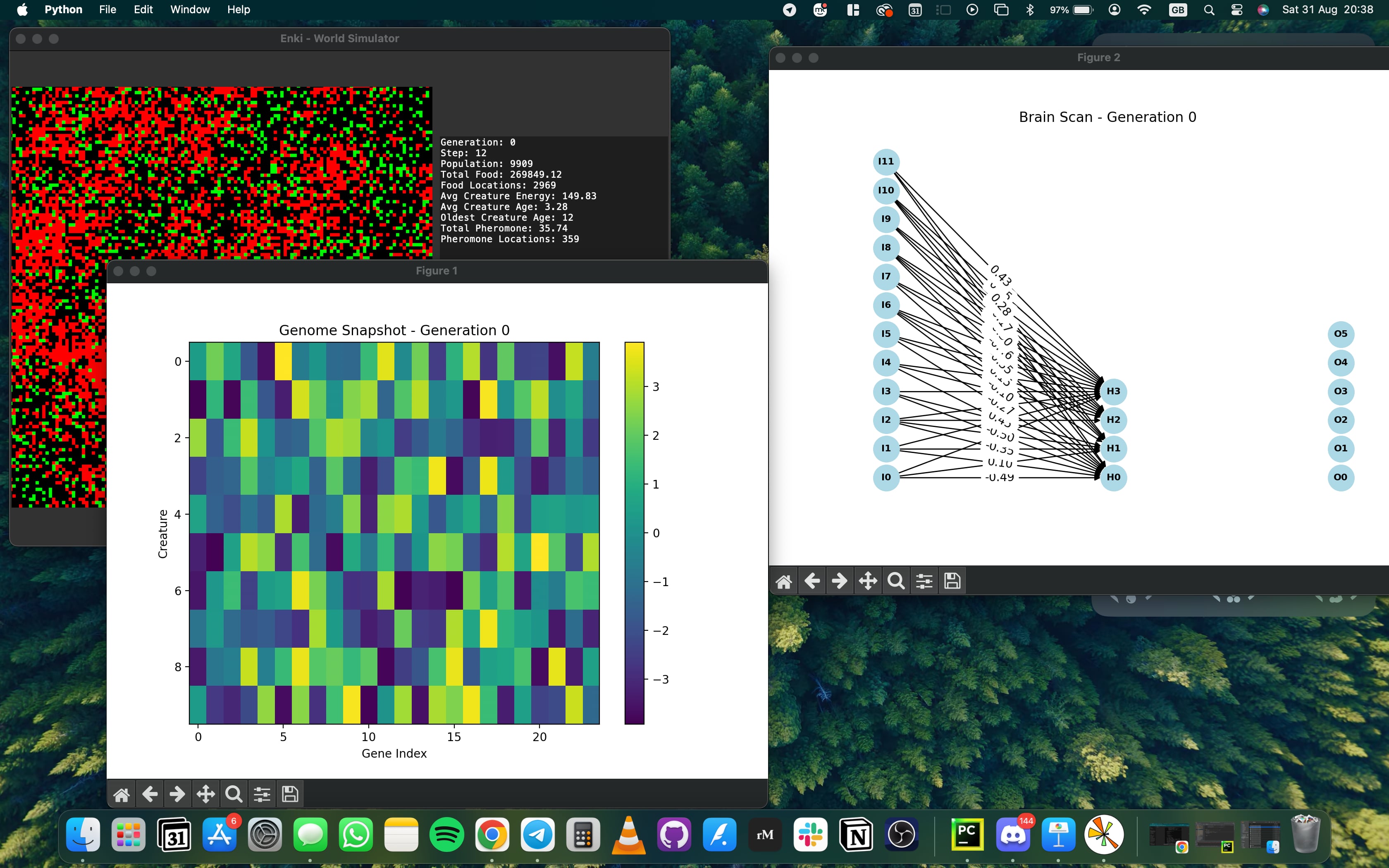

Disclaimer: It’s important to note that I did not write a single line of code. The only thing I manually did was change the neuron’s radius from 40px to 20px once. Cursor AI understands the entire codebase and writes the code for me. It takes a lot of debugging but below took me a bit less than 2 days.

Enki is heavily based on Miller’s work. It simulates a world with 1000 creatures in it. It measures time in years and 1 year = 1 action by each creature. There are very few parameters coded in like probability of food appearing (green pixel). These will be changed to be more dynamic in the future.

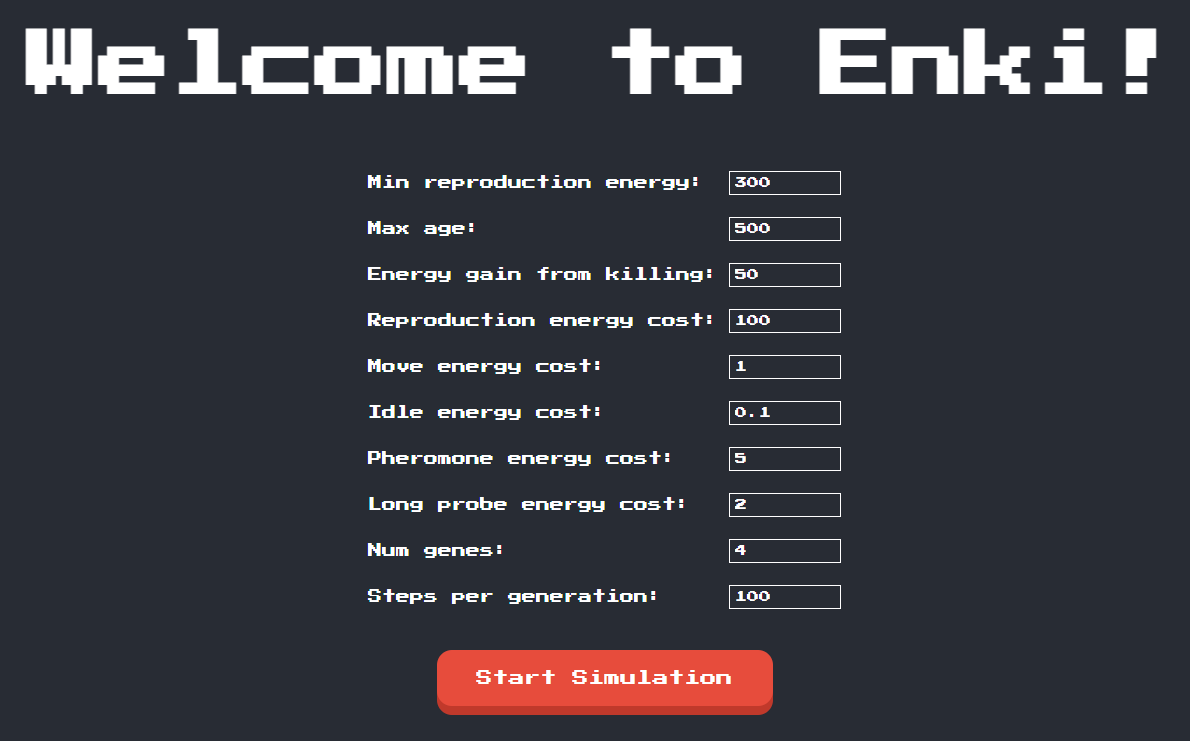

There are quite a few parameters you can change to play around and see how the creature’s behavior change with them. It’s fun to play around.

Once you click on “Start Simulation” a few things happen:

The World

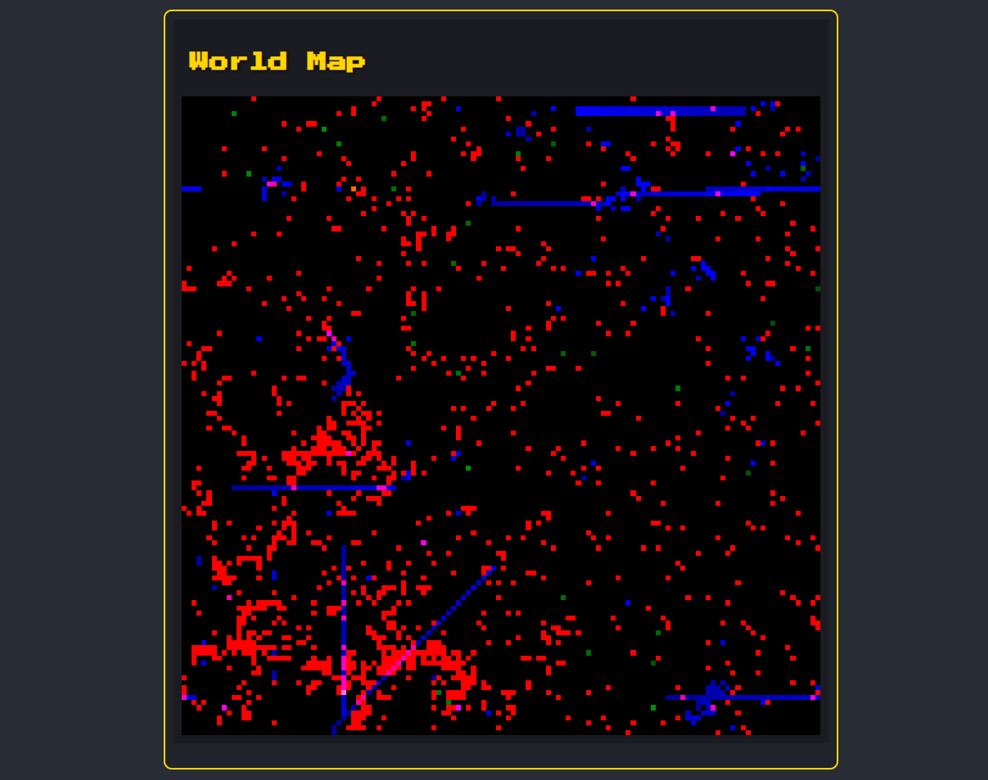

The world is a 128x128 grid where each creature is a red pixel, the pheromone emitted by them is blue and food is green. I think the creatures in the simulation below were a bit hungry.

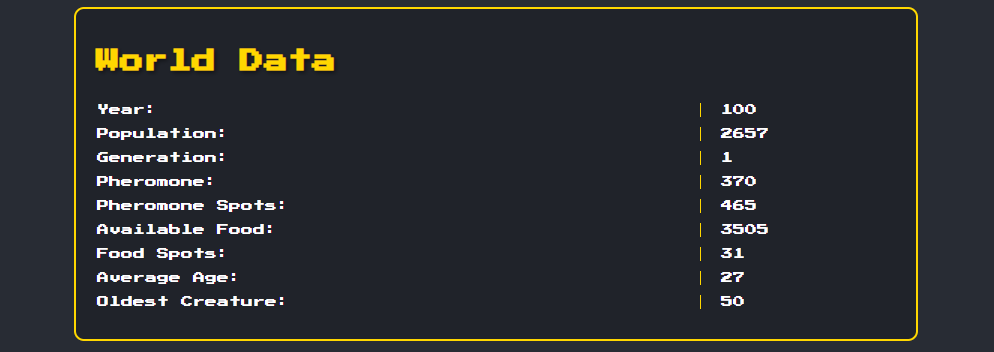

The simulation lets each creature act once every year. This is the timestep of our simulation. We have some stats around the world that is shown in the interface and this will make more sense once you understand how the creatures work.

The Creatures

The Enkians. Enkites. Enklings. Ugh, please help me find a good name for them. They have a brain to understand the world around them and a DNA that governs how their brain will work. Let’s look at the brain first.

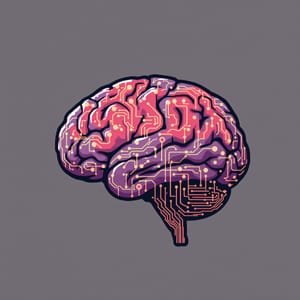

Neurons

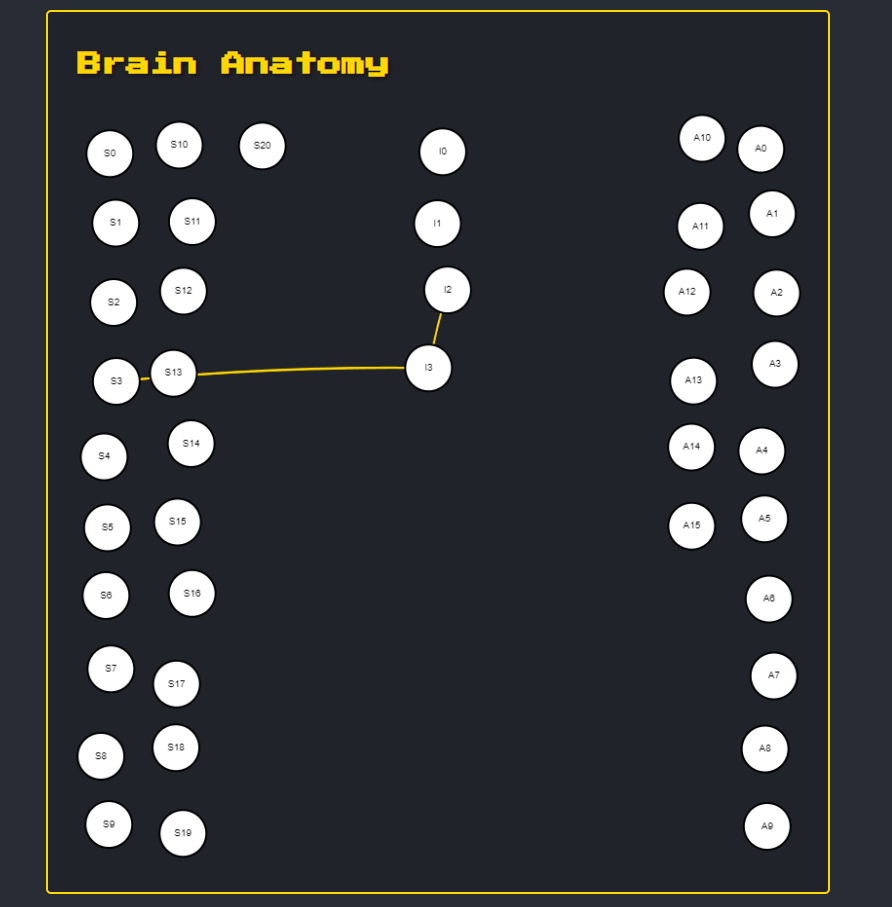

Each creature has a unique brain. Below is a visualization of the “median brain” during a simulation. Here’s how it looks like:

They have 21 neurons that process sensory inputs and 16 neurons that are responsible for action outputs (11 actually, some are redundant). They also have 4 internal neurons that can be used for more complex actions if needed but I haven’t assigned them any use yet.

This brain works as a simple feedforward neural network.

This is mimicking Miller’s logic, and there will be a more detailed version of the anatomy in the repo (once I write the documentation properly), but here’s the gist of it:

Sensory Neurons: Process location and environment information, social information (population), and oscillator information. The oscillator is a multifaceted thing in the simulation, i.e.: if 2 creatures oscillate similarly, they have a higher chance to reproduce. In other words, two creatures can only become Mama and Papa if they vibe together.

Action Neurons: Can be spatial (move in different directions, set oscillator, probe ahead, emit pheromones, or set responsiveness. This latter is not really well implemented yet because Cursor AI thought this meant “reset the brain” and I only realized it late. But responsiveness should govern how reactive the creature is. Miller’s code also have a Kill neuron but I haven’t implemented that yet because they just ended up killing each other most of the time.

Connections

I’ve had some bugs with this last night so I’m not sure this is working as it should yet. But here’s the gist of it. When two neurons are connected, they’re always a source (sender) and a sink (receiver) neuron. The source neuron can excite the sink neuron (yellow) or inhibit (white), which is defined by the weight of the connection.

The weight of the connection is a randomly generated 16-bit integer, which is then converted into a floating-point number to be used in the neural network’s calculations.

Whether or not two neurons are connected is defined by the genome, so let’s take a look at that.

Genome

This is still a work in progress in the code as I’m rebuilding Miller’s logic.

Each gene contains a bunch of information:

- Source type (sensory or internal) stored in 1 bit

- Neuron ID stored in 7 bits

- Sink type (internal or action) stored in 1 bit

- Neuron ID stored in 7 bits

- Weight (the 16-bit integer)

So we have these 32-bit genes that can be nicely represented by 8 hexadecimal digits, which means that if our creature has 4 genes, it could look something like this:

9286420B 2385A97F 23B08EF0 A21BBBD3

How it works

Let’s take a simple case. We have a creature with all of these neurons and one gene: 9286420B. Let’s see what will this gene do.

First let’s decode the gene 9286420B to binary:

10010010100001100100001000001011

This gene means the following:

- Source type: 1 (sensory neuron)

- Neuron ID: 18

- Sink type: 1 (action neuron)

- Neuron ID: 6

- Weight: 16907, converted to floating-point: 0.1475

The #18 sensory neuron is RANDOM and the #6 action neuron is SET_OSCILLATOR_PERIOD.

Simply put, at the beginning of each year, this neuron generates a random number. This number is then multiplied by the weight set in the gene, which will give us the sensory neuron’s output.

Let’s say this random number is 0.5. This means that our Oscillator value is

This value gets added to the SET_OSCILLATOR_PERIOD action neuron’s input.

Firing a neuron

The action neuron’s inputs are summed and then pass it through a hyperbolic tangent function which will result in a number between -1 and +1, then scales it and clips it to get the final value, which in this case is 1.

Our creature, thanks to this gene just set its Oscillator to a period of 1.

Why is this relevant?

Because the Oscillator governs reproduction. If there’s another creature within 10 tile distance with an Oscillator period that’s greater than 0.5, they will reproduce and bring a child to the world of Enki.

In other words, our Creature is now ovulating.

How I built it

At first, I built a monolithic script with Claude 3.5 Opus and ran it in PyCharm locally. Then when the script became too big for Claude I went over to GPT-4o, but even then it was a monster.

I was running the first versions locally on my Macbook and frankly I’m surprised it didn’t explode.

The first version had a bunch of the logic programmed into it through a series of arbitrary decisions which then was later somewhat replaced with TensorFlow.

That’s when my Macbook gave up.

I realized that this was a pretty stupid way to build Enki so I started doing something else. Moved over to my PC, fired up Cursor AI, and looked up the work of others. That’s when I rewatched Miller’s work and pulled up the biosim4 code to begin with. I refactored my monster script with Cursor AI to components and implemented the current build. Sometimes I would give the relevant files from biosim4 to Cursor AI and it would rebuild it in Python appropriately to Enki’s logic.

Do you want to check this? Here’s the repo link.

What’s next

Now that my creatures can live, reproduce, and die, I can move on to the next level of this: run different tests for emergent behavior. That would require a more complex world to begin with with some elements, predators, different resources and observe how they react.

As you can see just because you model the world and build a symbolic system to process the world around you, agency won’t happen immediately. But I’m curious to see what kind of behaviors I’ll see when I make Enki just a bit more complicated.

Now back to Alfred, maybe.