Yesterday OpenAI announced GPT-5 while a few of us were on a live Q&A session on Zoom, so we started following the events live. I immediately got API access to I started testing it. Here's my take.

"What have we done?"

GPT-5 is impressive in a lot of ways but it also disappoints in a lot of ways. But the shock of doing something that we thought was unthinkable did not arrive. This is nowhere near the Manhattan Project, but I understand how many will feel like that. Let me feed your context window a bit.

The Context

I spent a few years in the blockchain industry and I've seen how people would go crazy and think that the most recent revolution will sweep away everything we knew to be working and we will need to reimagine E V E R Y T H I N G.

When that happens, capital becomes cheap and you always have at least one player who starts blitzscaling.

Hoffman makes a very bold prediction: that ChatGPT may become the first AI that most of the 8 billion people on our planet use. That's the overarching goal of OpenAI, not creating AGI or bettering humanity. Capturing market share and creating a monopoly.

If you've been following Silicon Valley it should come as no surprise that the golden child of the Valley is executing the ultimate Silicon Valley plan. Come on.

So what's happening exactly? Let me give you a few facts:

Jony Ive joined OpenAI

ChatGPT went from 4o,4.1,4.1-mini,o3,o4-mini,o4-mini-high to just GPT-5

OpenAI just raised $8.3billion an have 5 million paid users

ChatGPT is on track to hitting 700 million weekly active users

Now this is the context bit. Let's start thinking about it.

Reasoning about the Good.

The bad-but-good numbers

5 million paid users out of 700 million total means ~0.71% conversion rate. If ChatGPT is treated as a freemium, consumer SaaS product, that's not a very good number. Even at scale you'd want to see something around 2-3% at least but the ideal is 5-8%.

Of course since we established that they're blitzscaling, they don't care about monetization. Following in the footsteps of Zuck, Spiegel and many others. If you have reached product market fit, shove as much money into capturing market share as possible until you create a monopoly. Then charge whatever the fuck you want. If you don't believe me read Peter Thiel's Zero to One which explains that all startups should aim to create a monopoly.

Okay so that means that OpenAI is willingly burning cash to make people come to their table instead of Gemini, Claude or Grok. Here's where things get interesting.

Perception is reality

The majority of humans using AI chatbots are using the free version of ChatGPT. If you're reading this, chances are that you are a paid subscriber to at least one of these, so your perception of what AI can do is mainly driven by o3 versus Claude Opus 4.1 or Grok 4 Heavy. You have been using Deep Research for a few things, maybe even vibe coding with Lovable or Claude Code.

It's easy to think that's what everyone thinks. But it's not. 695 million weekly active users' core perception of ChatGPT's capabilities was whatever GPT-4o could do. The model that was launched over a year ago. The model that I haven't used in ages. That's what almost a billion people think of when they say "ChatGPT".

Until yesterday.

Since then the entire world is using something that's somewhat on par with the cognitive capabilities of juggling between 4.1, o3 and o4-mini, sometimes Gemini 2.5 Pro and maybe Claude Opus 4.1. It beats some models by a few percentage points and it underperforms against others.

But that's not the point. The point is that 99.37% of ChatGPT users experienced a time jump from May 13th 2024 to August 7th 2025. They skipped the whole shebang of models competing with each other and the reasoning debate. They just see that going from GPT-4o to GPT-5 is a big jump.

All the PR around how scary this is and how much of a breakthrough the new GPT-5 is...you weren't the audience.

No, the audience was Masayoshi Son, CEO of Softbank who invested over $33billion into OpenAI and Erzsike, my mom's 73 year old neighbor who talks to ChatGPT because she's lonely.

Because that old lady doesn't understand jack shit about reasoning models or hallucinations. She doesn't know anything about RAG and she doesn't really care about MCP servers. For her, "Chat" just became a hell of a lot smarter. Not because it is, but because it feels like it.

1000 PhDs in your pocket

Altman loves to make the claim of "PhD level intelligence" which makes absolutely zero sense. Just ask anyone who actually has a PhD in anything. You can see the aspiration to become the next Steve Jobs everywhere from literally buying Jony Ive to echoing the famous iPod sentence (remember a thousand songs in your pocket?).

But the most important decision Jobs ever made was replacing Philips screws with Pentalobe ones on macs. Almost everyone has a Philips screwdriver set at home and almost nobody has a Pentalobe set. That's still true today.

By locking Apple into its own ecosystem, wrapping it into a black box, Jobs created the myth of "just works" and the market loved it.

Altman figured that his users will probably prefer convenience over performance so he is desperately trying to become the next Apple by forcing all users to have a unified user experience. That simplicity allows for faster growth, which leads us back to blitzscaling.

If you're a power user and create a simple model selector router for yourself that decides when to use GPT-4.1, when to use o3 and when to use o4-mini, you'll have basically recreated most of GPT-5's capabilities. But that doesn't mean Erzsike won't be totally mesmerized.

So yeah, GPT-5 is really fucking impressive, because it pulled off what not many can. It repackaged something they already had and the world will gobble it up like there's no tomorrow. But it's nothing revolutionary, it's just a Silicon Valley tech giant doing Silicon Valley tech giant things.

Reasoning about the Bad

Hallucination still exists

One of the things I was excited about is their claim that hallucinations are basically being wiped out. Then I started writing documentation with GPT-5 and when I asked it to change a paragraph, it wiped the entire document from the Canvas.

I haven't seen that happen in ages, mainly because I haven't used GPT-4o. That model used to do this a lot. I tried it a few more times and it failed mostly. I need to deliberately click on "Think longer" to increase performance but even that seems insufficient now. I'll probably just go ahead and access the actual models via AlfredOS now (oh by the way AlfredOS is now open source ).

Vibe Coding above all

It also doesn't come as a surprise that after Lovable's historic $200million fundraise the big guys would make a move towards vibe coding more. What OpenAI did was not trying to make GPT-5 beat Claude Opus 4.1 in coding because it can't. You launch Claude Code, use its subAgents or any of the orchestration framework (like Claude Flow, Superclaude, BMAD, etc) and you get infinitely better performance.

No, but GPT-5 can one shot front end that's relatively good. That's great because it gives you the wow factor of demos and who cares about production anyway?

Reasoning about the Ugly

Free-Form Function Calling

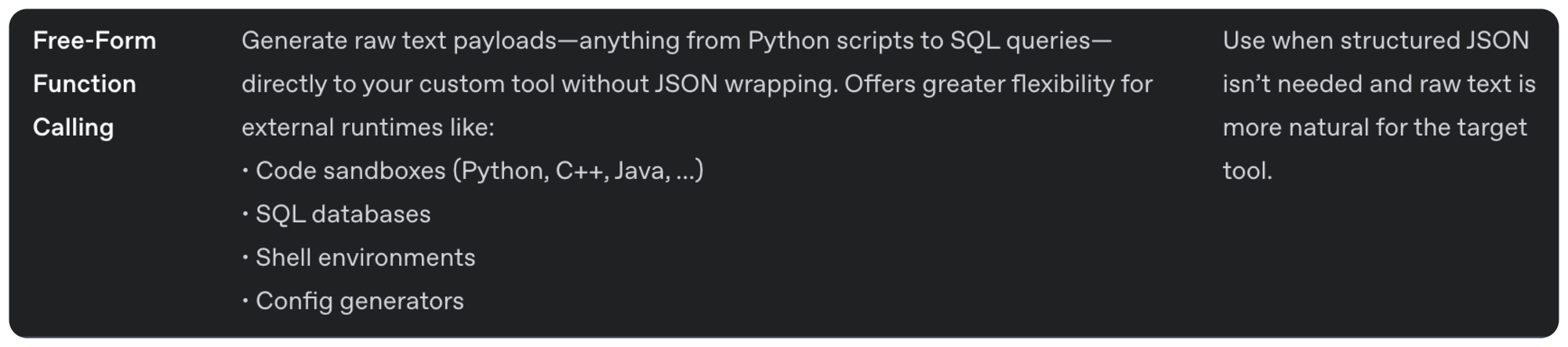

This is an interesting move that relates to my thinking of GPT-5 being OpenAI's Pentalobe moment. Hidden somewhere in the cookbook you'll find what this means.

This is an interesting move that seems to be making the bed of creating a competing framework to MCPs. Function calling used to mean you needed to define what endpoint you'd want to call, what's the JSON output you expect and basically connect an API endpoint to your agent. MCPs solved that via a universal interface where you don't need to configure every endpoint for the agent one by one. If you own the entire software, it doesn't really have much added value. But if you are in discovery and don't necessarily know what you need, MCPs are really useful.

OpenAI Agents SDK supports MCP, but now it seems we will have something relatively similar to subAgents where you can just run free-form raw text tool calls and – although this is my assumption only – a nano model will transform that into a valid payload. If this works then the whole shenanigan of MCP may become obsolete because two agents can just solve it at runtime. (Or at least that's the bet I think they're making).

I put it into the ugly bit because if my assumptions are correct then yes, this is indeed a Pentalobe moment for OpenAI, where they say yeah we support MCPs but use our free-form function calling and you won't even need that.

The $1.5m bonus

Zuck is really making life challenging for Altman now with all the treating PhDs like Premier League players. To be frank I'd rather see a computer scientist researcher being treated as a celebrity all star genius than someone who can kick a leather ball. But I despise football and the fact that it's treated as a religion in most parts of the world and I know I'm in the minority.

But with the obvious move towards commercial goals means abandoning research goals. But how will you prevent a mass exodus when you see that most of the OG team has left, and Zuck is poaching the rest?

Give everyone money! Vested over 2 years so if you leave now you don't get anything. It makes sense. Talent won't leave OpenAI which will signal further trust in the company and feed the myth of the next mysterious thing that will make all the TikTok and Linkedin influencers:

This changes everything!

The Verdict

GPT-5 is not AGI but since there's no consensus on the definition, to hundreds of millions of people it will feel like one. AI development doesn't seem to slow down but Artificial General Intelligence seems to be more elusive than we thought.

I don't think it actually matters if we achieve AGI within the next few years or not. Companies still have no idea how to use today's tech (or even last year's tech) so whatever job impact AI will have we're yet to see.

What I think this new GPT-5 announcement shows is something more profound, and to prove my point I've done a very deep simulation which I'll present in my next post next week.