One of my clients runs a construction firm in California and has an ambitious goal:

Create an always-on AI foreman for their work.

I’ll call it Joe. They have a good old SaaS app to manage the business. You know, updating quotes, keeping track of schedules, action items, processing supervisor visit memos, notifying team members. We decided to do something different:

Create a voice agent that connects to all our API endpoints via Model Context Protocol

Below is a one minute demo of the voice agent I built in a day, without coding. Read below to learn what it takes to build something like this with a full timeline.

This post is the living proof of why you should stop learning how to code.

Thing I did:

- Prompt Cursor, ChatGPT, Claude, Claude Code, Lovable

- Copy/paste prompts

- Browse for inspiration on 21st.dev

- Copy/paste URLs into Firecrawl

Things I didn’t do:

- Read documentation

- Write code

- Read code

That’s it. I built a whole app in a day. Again. I better get used to this.

Phase 1: Model Context Protocol

[09:30 AM] Okay, I need to create an MCP server for our API. I asked for the API documentation from the dev team and I received an OpenAPI URL. I downloaded the swagger.json with the help of ChatGPT.

[09:33 AM] I went to modelcontextprotocol.io to get the guide on how to create an MCP server. I didn’t read it, but I did get the URL.

[09:34 AM] I put the MCP documentation URL into firecrawl.dev and crawled the documentation contents into a bunch of Markdown files that I could download.

[09:37 AM] I created a new workspace in Cursor. I created a docs/ folder where I pasted the MCP documentation Markdown files and the swagger.json.

[09:38 AM] I created a new file called instructions.txt. It said:

I want to create an MCP server for my API. Here’s everything you need to know about MCP: ‘@docs’ and here’s everything you need to know about my API:‘@swagger.json’. Plan the process and create a tutorial for me in a tutorial.txt file.

[09:44 AM] I got the tutorial. I didn’t open it. But I did send a new prompt to o3 inside Cursor: “Read ‘@tutorial.txt’ and implement it”

[10:12 AM] First version of the implementation done. Now I need to test it so I asked o3: “How do I test this?”

[10:41 AM] o3 suggested I deploy it to fly.io, a platform I’ve never used before. It wrote the changes in the code to make it happen and gave me a bunch of terminal commands to follow. I installed Fly, deployed my project and less than 90 minutes into the project my MCP server was live.

[10:57 AM] Alright, let’s get a coffee and figure out what we do next.

Phase 2: Voice Agent UI

[11:13 AM] I also need a nice UI. Since I can talk faster than I can type, I opened ChatGPT and hit the microphone icon, which would record my voice message, then transcribe it immediately. I started rambling about the project and what I want the user to experience. The main logic was this:

The user wants to feel like they’re talking to their foreman. The less different talking to Joe feels like to a human, the more they’ll like it. So it should feel like you’re calling or sending a voicemail or texting Joe.

Then I followed up with a simple prompt:

I’m going to send this to an AI software developer app to build my design into reality. Write the prompt I should send the app.

[11:35 AM] I opened Lovable and I pasted my new, crispy prompt and hit enter.

[11:46 AM] Yeah I don’t like this. Let’s find some inspiration first. I opened 21st.dev to find components I liked. That’s when I found background gradients and a gooey text morphing animation. The good thing is that I can just click on “Copy prompt” and I get the exact thing I need to paste into Lovable.

[11:53 AM] I opened a new project in Lovable. Same original prompt but now I also added the two prompts from 21st.dev there.

[12:04 PM] Okay, after a few tweaks I can say that I’m less than 3 hours into this project and I already have an MCP server and a proper UI for Joe. I connected my Lovable project to Github to get the code, then asked o3 to clone the code into my workspace from Github. It wrote the terminal command and done. Now let’s build the app.

Phase 3: The Voice Agent

[12:37 PM] I’m pretty sure there is at least a template or demo version of what I want. Let’s take a look. I opened ChatGPT and started a search.

[01:12 PM] Ok, I found it. OpenAI does have a demo repository. I’ll just need to somehow retrofit it to my needs. I opened Cursor and asked o3 to clone this repository into my workspace. It wrote the terminal command, ran it and voila.

[01:20 PM] Alright, let’s tally. I have the following:

- instructions.txt

- tutorial.txt

- docs/ with the full MCP documentation

- live MCP server on fly.io

- a new realtime agent demo app

- a voice agent demo UI

Hm, I might need some extra documentation too from the OpenAI API. so I opened firecrawl.dev again and crawled the OpenAI API reference in a Markdown file, saved it inside my Cursor workspace as openai.md

[01:33 PM] Cool, now let’s start turning this into something real. I opened o3 and got to work.

Given the ‘@realtime_demo_app‘ and give it a completely new UI. I seeded the new UI code for you in the ‘@voice_agent‘ folder. Create this new version of the app into ajoe_finalfolder.

Now here’s where things got a bit clunky. Cursor o3 got stuck a few times. The UI I built was a Vite app and the OpenAI demo app was a Next.js typescript app. Well, I’ve never built any of those myself and frankly I probably couldn’t even explain the difference between the two even if I wanted to.

I spent the next hour or so going down a rabbit hole of endless debugging. I knew that building something from scratch is easier than refactoring two existing things. o3 failed at it spectacularly.

[02:42 PM] I ended up creating an overly simplified UI for the demo app that’s similar to my final UI but ugly. That was doable. I started testing every feature and tweaking it for an hour.

[03:57 PM] Okay now I had a super simple, working app that did what I wanted but was ugly. Next step: let’s plug the old and the new UI together.

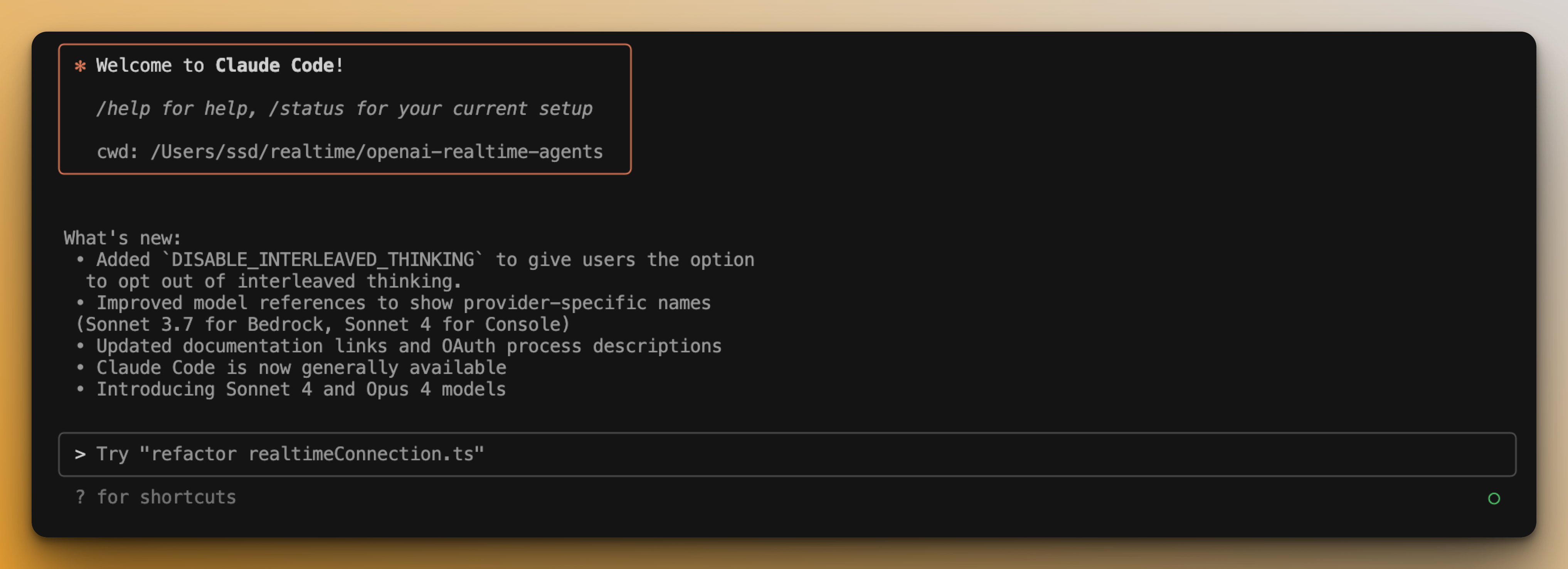

[04:11 PM] o3 seemed to drop the ball on this so I decided to find a new friend: Claude Code. It’s really easy to install you just paste one line of code into the terminal and it takes care of everything for you.

[04:53 PM] DONE! The demo is ready. I have the app which I can call, send a voicemail to and chat via text. All I need to do is plug my MCP server into it (or any other MCP server) and I have a custom voice agent that can do whatever I want, like a VA.

Now I’m off to surgery and then I’ll wire the app together with the MCP server and debug the remaining features (the gooey morph animation has disappeared for example). Should take me about 3 hours lol.

Why this matters to you

You are a builder. All of us are builders. I know it, you know it.

Give a kid a handful of LEGOs and they’ll have fun for hours.

But you learned to accept the gatekeeping. Technology became too complicated for us regular folk to embrace it.

Not anymore. You already have the tools, I explained all of these today.

What you need is a process. To know what to grab and when.

You will still run into rabbit holes just like I did, but they will be less frequent and less frustrating. There’s a builder in you that wants to be free, to build, to create new things.

You can liberate that inner builder by becoming an AI-First Operator.

Join my AI-First Operator Bootcamp this summer and I’ll turn you into a builder in 12 weeks who can create any app or any automation in an afternoon.

Here’s what you’ll get:

- Every week you’ll get a 30 minute lesson from me that gets you from non-technical to “knows enough to be dangerous” in ONE area.

- Every week we’ll have a live workshop where I’ll build an n8n workflow or vibe code an app live, explaining to you what’s happening so you can follow along and practice.

- You’ll get access to AlfredOS, my new platform that replaces $2k+ SaaS bill with a $50/mo server cost.

- You’ll get three 1:1 calls with me to help you get on the right track (VIP Pass only)

But what if this is too advanced for me?

It’s not. All those Python Udemy courses you started and abandoned were too advanced because they wanted to turn you into an engineer. This bootcamp is designed for stay at home moms, marketing consultants, 75 year old retirees, hairdressers, accountants, florists. If you still feel like this is too much, ping me within 2 weeks of the kickoff and I’ll give you your money back.

But what if I don’t have the time?

Yeah I know we’re all busy. This is why I designed the program to be digestible in 15 minutes a day. If you miss a lesson, no problem, all sessions are recorded, you’ll keep access to every video and every material as long as my site is up. If you’re confused or still need help, just ping me and I’ll help you get back on track personally.

But what if it’s not what I need?

You will become an AI-First Operator sooner or later. Not because it’s amazing but because it’s inevitable. So the question is not whether you need to become one, the question is why not now?