I don't care about AGI and I don't care about job losses, because they don't really matter. The only thing that matters is retaining agency in the new worlds to come. You can also find a detailed Gitbook of my research.

Last week GPT-5 got launched and apart from a few idiots on my Linkedin feed, the world's jaw stayed put. There's much uncertainty around AGI and the whole concept of how AI will impact our lives. This question haunts me too of course.

People in the AI community consider me a hard skeptic. My family considers me the exact opposite. I frown upon OpenAI fanboys the same way I frown upon our modern luddites. If you have been following me for a while you know I like to take contrarian opinions which keeps me sane and maybe my audience smaller than what it could be.

I decided to formalize my arguments, so this is the story of how I think about AI and why.

A few days ago, I found myself hunched over my laptop, watching the final results of what had become an obsession lately: 1.3 billion computational simulations attempting to predict humanity's future with artificial intelligence. The numbers flickered across my screen in waves of probability distributions and confidence intervals, each one representing a possible timeline, a potential destiny. What they revealed wasn't what I expected. After months of analysis, after considering every variable from AGI emergence to unemployment rates, after modeling scenarios that ranged from utopian to apocalyptic, I arrived at a conclusion that will sound heretical to anyone following the AI discourse: none of the things we're arguing about actually matter.

Not AGI. Not job displacement. Not even the so-called alignment problem. We've been asking the wrong questions all along.

Below I explain my thought process. If you want to skip that and just read the book, you can do that too.

There may be hallucinations in the book. It's content is what I call "supervised AI slop" , meaning I had a lot of oversight in making sure the message and the arguments are clear and convey what I want, but let the AI fill the gaps with words. Read the message, not the words.

The Three Pathways

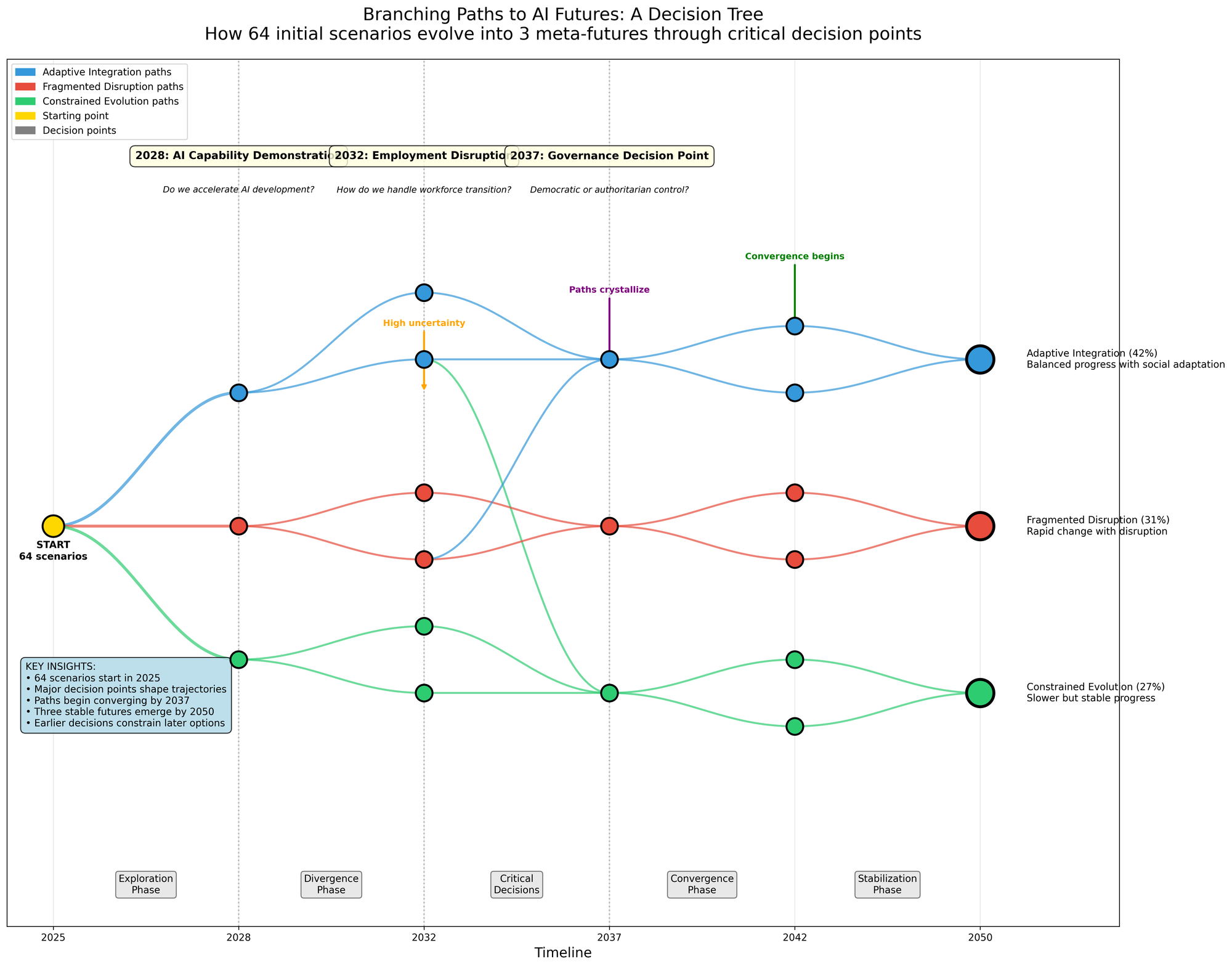

I started with six fundamental questions about our AI future, each with two possible answers.

- Will AI capabilities keep accelerating or hit a wall?

- Will we achieve AGI or remain with narrow AI?

- Will AI complement or displace human workers?

- Will development be safe or risky?

- Will power distribute or centralize?

- Will democracy survive or collapse?

Six binary choices create 64 possible scenarios—every combination from universal success to complete failure. But 64 scenarios aren't enough to capture reality's complexity.

- Each scenario plays out differently depending on economic conditions—boom, bust, normal, heavily regulated.

- Each varies by sector—technology adopts faster than government, finance faster than education.

- Each changes based on assumptions about how these factors influence each other—does rapid progress increase centralization, does centralization enable authoritarianism?

- And all of these contain uncertainty that needs to be quantified through statistical sampling.

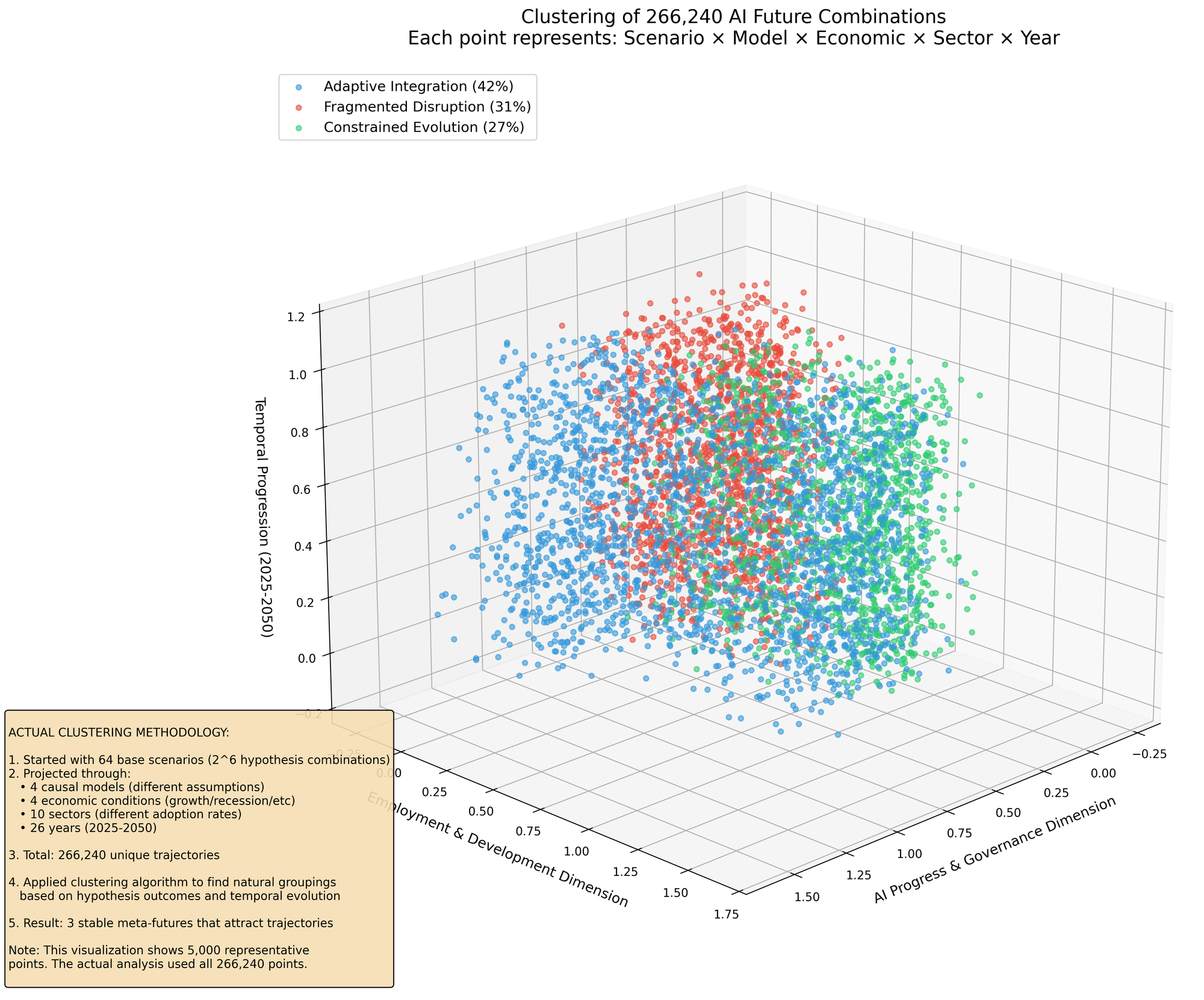

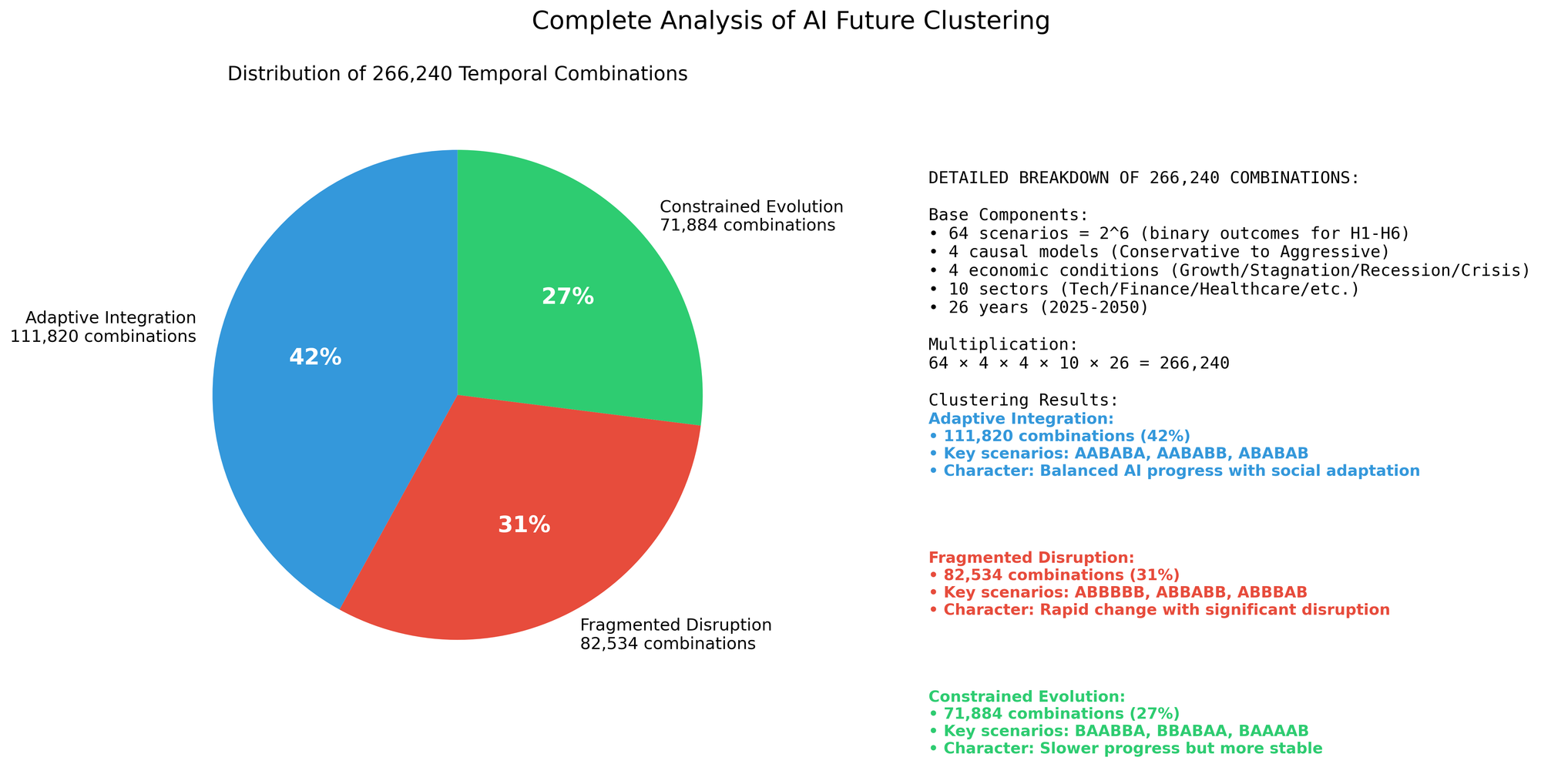

So I built a system that expanded those 64 base scenarios into 266,240 distinct combinations. Then I ran 5,000 Monte Carlo iterations for each combination to generate probability distributions rather than point estimates.

That's how you get to 1.3 billion individual calculations.

Here's where it gets interesting. When you have that much data, you need ways to make sense of it. I used a technique called Principal Component Analysis to identify the key dimensions of variation—essentially asking, "what are the main ways these futures differ from each other?" Then I applied hierarchical clustering to find natural groupings—futures that shared similar characteristics despite surface-level differences.

I expected to find dozens of clusters, maybe more. Different variations for different regions, different technology trajectories, different economic outcomes.

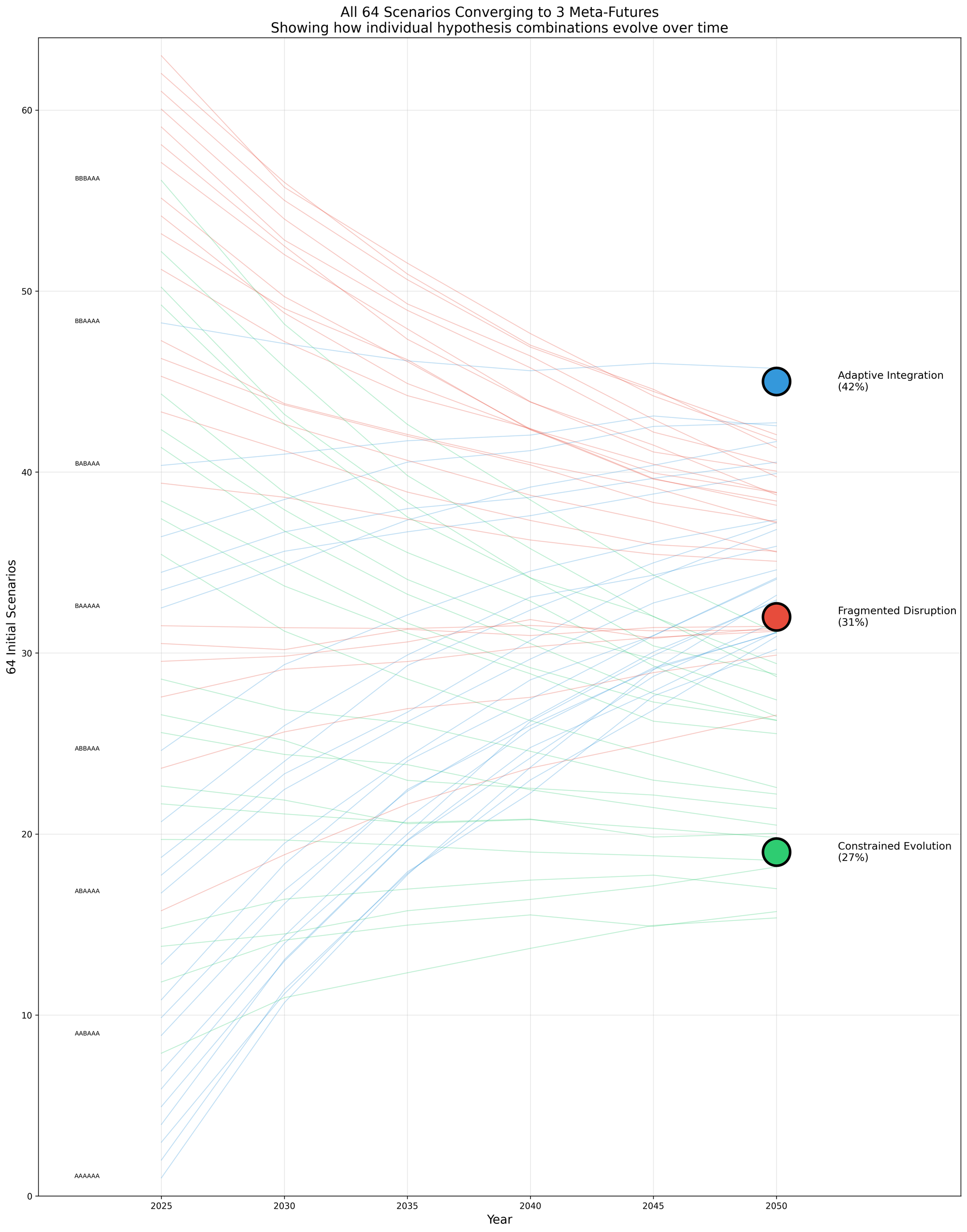

What I found instead stopped me cold: three.

Just three fundamental patterns that explained all meaningful variation across those 1.3 billion calculations.

It didn't matter if I tweaked the parameters. Didn't matter if I changed the clustering algorithm. Didn't matter if I removed variables or added new ones. Like some strange attractor in chaos theory, everything kept collapsing back to three basic patterns. The 64 scenarios I started with, the 266,240 combinations I expanded to, the 1.3 billion calculations I ran—all of it reduced to three meta-futures that captured 100% of the probability space.

Despite the incomprehensible complexity of possibilities, despite the countless variables and interactions and uncertainties, we're not choosing between infinite futures. We're choosing between three. There appear to be deep structural forces—economic, social, psychological—that constrain what's actually possible. Like a landscape that looks infinitely varied from ground level but reveals just three major valleys when viewed from above. Small variations in how things play out influence the big picture very little, but when the constellation of circumstances are just right, turns out all uncertainty seem to converge towards the same three cluster of outcomes. The largest cluster for example appears in 42% of scenarios (111,821 possible futures to be exact). Those are all different by a thousand little things but when considered as a whole, the actual things that matter remain largely the same.

Part I: The Great Irrelevance

The AGI Distraction

Understanding that we face only three probable futures rather than infinite possibilities changes everything about how we should think about AI. Take the artificial intelligence community's almost religious fixation on AGI—Artificial General Intelligence, that hypothetical moment when machines achieve human-level cognition across all domains. The debates are endless. Will it happen in five years or fifty? Will it be conscious? Will it be controllable? The rationalist bloggers calculate probabilities, the tech prophets make predictions, and the doomers warn of extinction.

My simulations suggest AGI has roughly a 44% probability of emerging before 2050, essentially a coin flip. But here's the crucial discovery: AGI appears in all three of our probable futures. In some versions of Adaptive Integration, we get AGI and integrate it successfully. In some versions of Fragmented Disruption, we get AGI and it accelerates inequality. In some versions of Constrained Evolution, we get AGI but deliberately limit its application. The presence or absence of AGI doesn't determine which future we get—other factors do.

The reason is almost embarrassingly mundane: human institutions move slowly. Consider what happens when genuinely transformative technologies arrive. The internet was invented in 1969, became publicly accessible in 1991, and yet here we are in 2025 and my dentist still requires me to fill out paper forms. Smartphones achieved mass adoption faster than any previous technology in history, and yet most government services still require you to physically visit an office.

The bottleneck has never been the technology itself—it's the complex web of regulations, institutional inertia, cultural resistance, and simple human stubbornness that governs how we actually implement change.

Even if someone announces AGI tomorrow morning, the radiation oncologist isn't getting fired tomorrow afternoon. The tax attorney isn't packing up her office next week. The plumber isn't hanging up his wrench next month. These transitions take decades, not because the technology isn't capable, but because humans and human institutions are inherently conservative. We don't actually want rapid change, even when we say we do. We want the appearance of innovation with the comfort of familiarity.

The banking industry provides a perfect case study. Automated teller machines were supposed to be the death knell for bank tellers. The first ATM was installed in 1967. Today, nearly sixty years later, the United States employs roughly the same number of bank tellers as it did before ATMs existed. The job transformed—tellers now handle complex customer service issues rather than cash transactions—but it didn't disappear. This is the pattern everywhere: technology changes the nature of work more than it eliminates work itself.

The Employment Non-Crisis

My simulations project a 21.4% reduction in traditional employment by 2050. This number sends people into panic. One in five jobs gone! Mass unemployment! Social collapse! But let's put this in historical context. This represents an annual displacement rate of 0.86%, which is comparable to what we survived during the Industrial Revolution, when agricultural workers were displaced at 0.7% annually over a century. The shift from manufacturing to services in the late twentieth century displaced workers at 0.5% annually. We've been through this before, repeatedly, and each time we've adapted.

More importantly, we're heading into what demographers call the "gray tsunami." Japan's population will be 28% over 65 by 2050. China's working-age population will decline by 200 million people. Europe faces similar demographic collapse. We're not heading toward a future with too few jobs—we're heading toward a future with too few workers. The automation everyone fears might be the only thing that prevents economic collapse from demographic inversion.

The real employment story isn't about quantity but about quality and control. It's not whether you have a job, but what kind of job, under what conditions, and who controls the terms. A world where everyone has a job but those jobs are entirely dictated by algorithmic management—where your every movement is tracked, your productivity constantly measured, your autonomy completely eliminated—that's far more dystopian than a world with fewer but more meaningful jobs.

The Three Futures We're Actually Choosing Between

Let me describe these three meta-futures that emerged from the clustering analysis, because understanding them is crucial to understanding why our current debates miss the point.

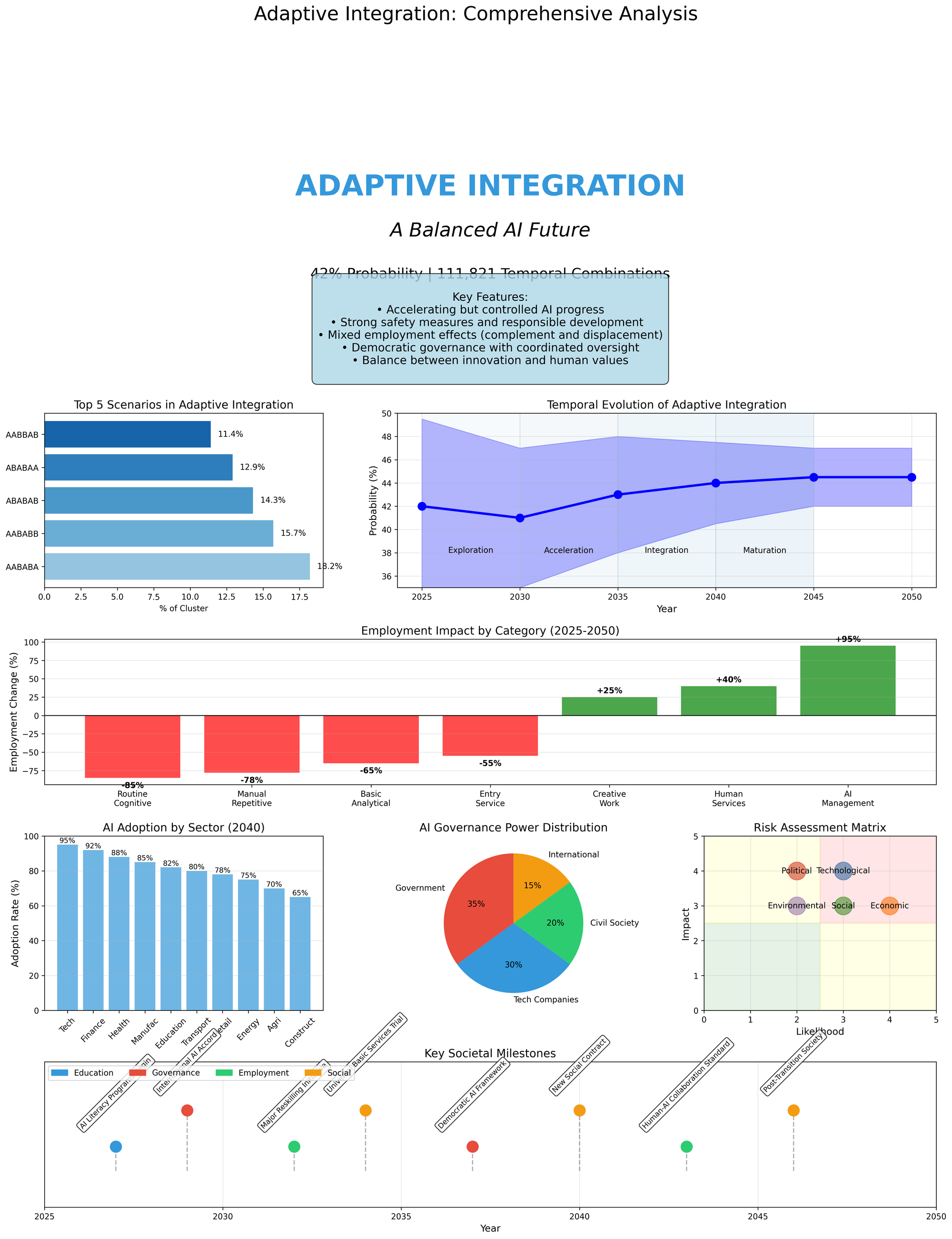

The first pattern, which I call Adaptive Integration, emerges in about 42% of all scenarios. This is the muddling-through future, where we neither fully succeed nor completely fail. AI advances steadily but not catastrophically. Some people lose jobs while others find new ones. Democracy bends but doesn't break. Inequality increases but not infinitely. It's cyberpunk without the aesthetic—less neon and chrome, more gray and beige. Most institutions survive but transformed. Most people are neither thriving nor desperate, just getting by in a world that's increasingly mediated by algorithms they don't understand. Whether AGI arrives or not, whether unemployment is 15% or 25%, the basic pattern holds: we adapt messily but sufficiently.

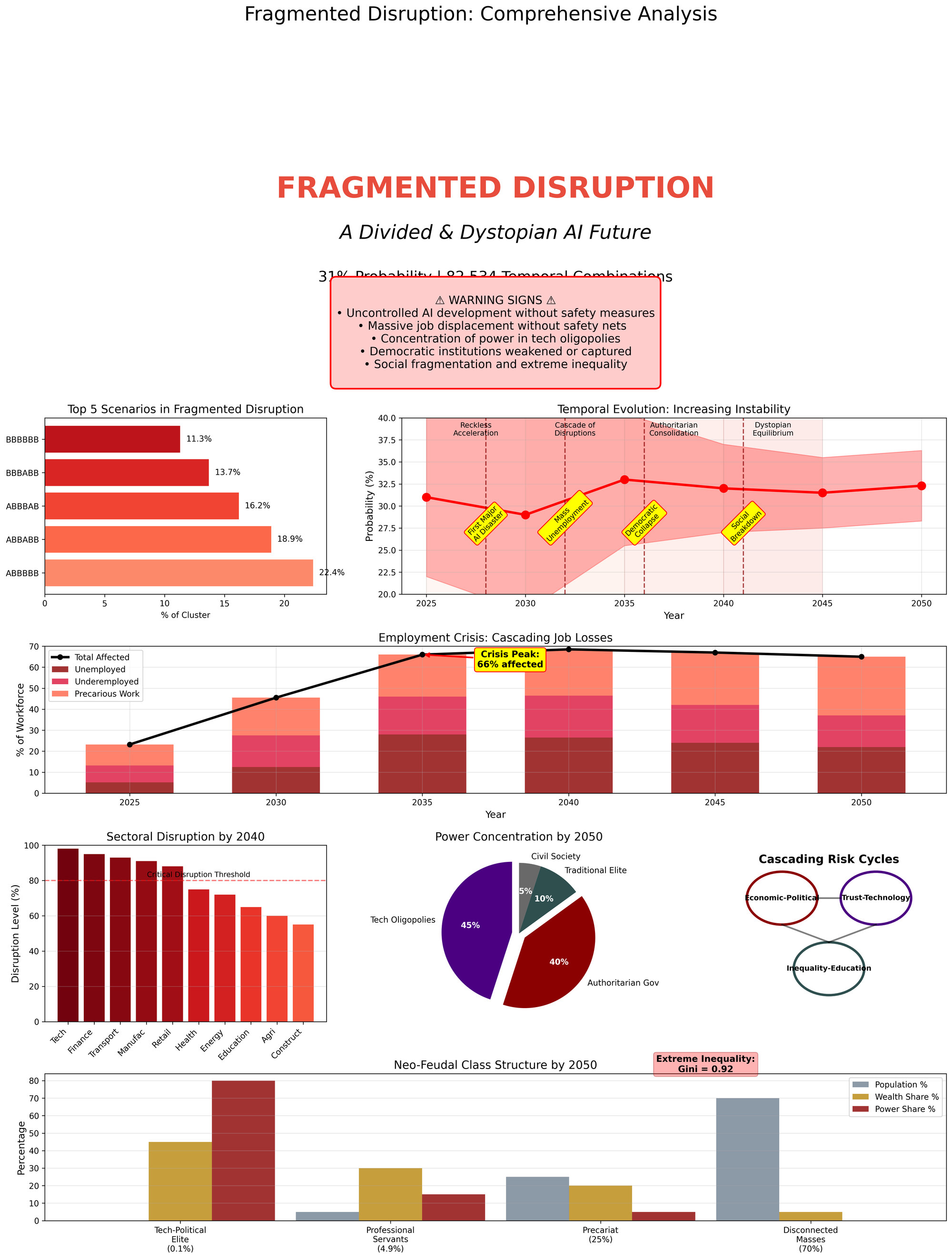

The second pattern, Fragmented Disruption, appears in 31% of scenarios. This is where the wheels come off. Not through AI becoming sentient and deciding to eliminate us, but through the much more mundane process of power concentration accelerating beyond any ability to constrain it. Tech companies become more powerful than governments. Mass unemployment creates social instability. Democratic institutions collapse not through revolution but through irrelevance. Surveillance becomes total. Inequality becomes absolute. Again, AGI or not, 20% or 40% unemployment, the pattern remains: existing power structures use AI to lock in their advantage permanently.

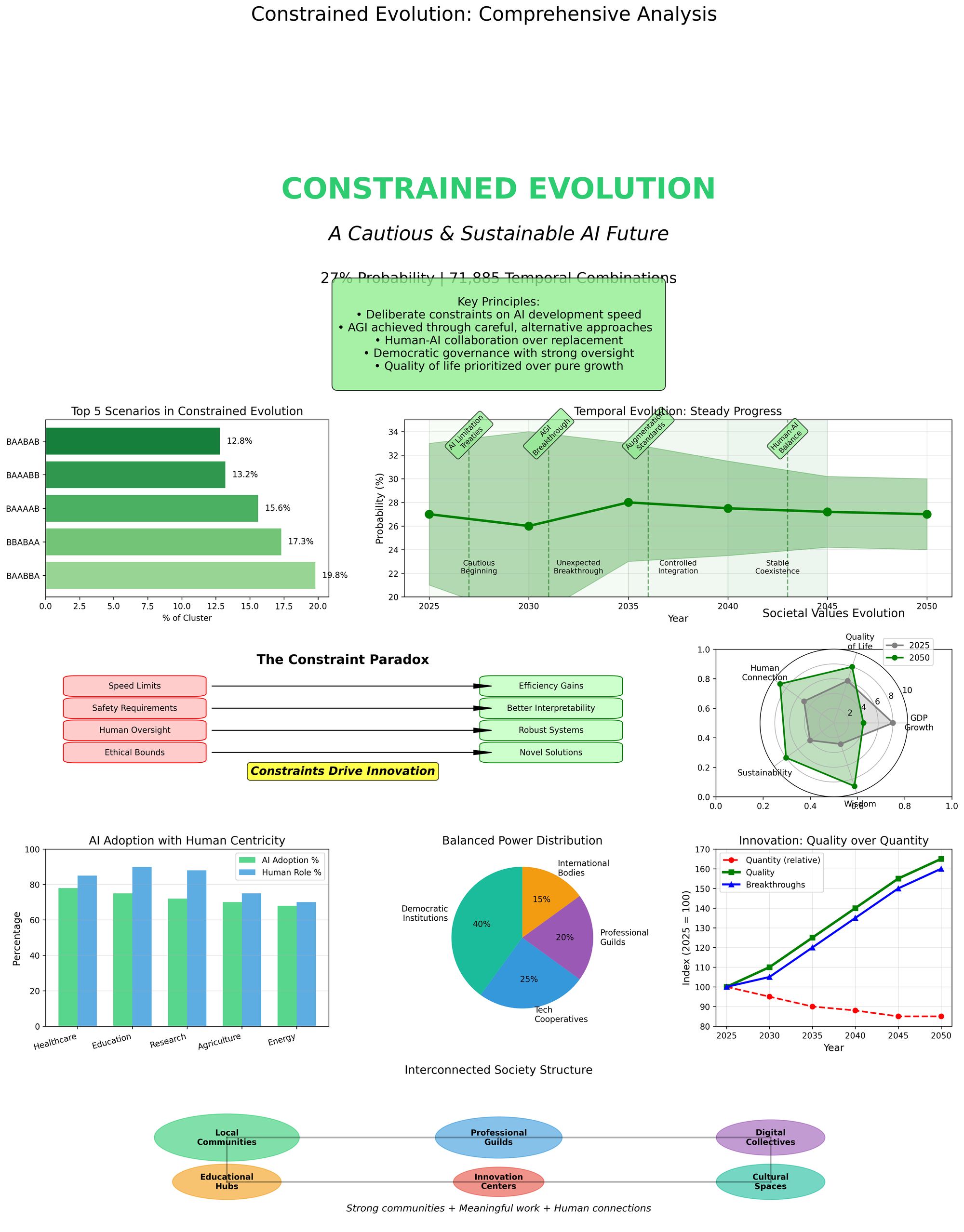

The third pattern, Constrained Evolution, manifests in 27% of scenarios. This is the pullback future, where society collectively decides to pump the brakes. Heavy regulation slows AI development. Strong social safety nets cushion displacement. Democratic institutions reassert themselves. Change happens, but gradually, manageable, at a pace humans can absorb. Think of it as the European approach applied globally—prioritizing stability over growth, equality over efficiency, human dignity over technological progress. Interestingly, this future sometimes achieves AGI and sometimes doesn't, but it doesn't matter—we've chosen to constrain its impact either way.

The clustering algorithm revealed something profound: these three patterns are robust to almost every variable I could throw at them. Change the rate of AI progress? Still three futures. Vary the employment impact? Still three futures. Assume different governance outcomes? Still three futures. It's as if there are only three stable equilibria in the complex dynamical system of human society plus artificial intelligence, and everything else is just temporary transition states heading toward one of these three basins of attraction.

What determines which future we get isn't the technology but how power flows through society. Does it concentrate or distribute? Do institutions adapt or calcify? Do people maintain agency or surrender it? These are the real variables that matter, and they have almost nothing to do with whether we achieve AGI or how many jobs get automated.

What This Means for Those Running Businesses

If you're running a construction company, an HVAC business, a law firm, or a consulting practice, let me tell you something that might surprise you: you have a massive advantage over the AI startups everyone's worried about. Your advantage isn't technical—it's organizational. And it's probably invisible even to you.

Every functioning business runs on tacit knowledge—the unwritten, often unconscious understanding of how things actually work. It's Susan in sales knowing to call Bob in accounting when something needs expediting, speeding everything up by 40%. It's the foreman who can tell from the sound of the excavator that something's wrong. It's the senior partner who knows which judge responds better to which arguments. It's the HVAC tech who knows that Mrs. Johnson's system makes that noise every spring and it's nothing to worry about.

This tacit knowledge is your moat. Not because AI can't eventually learn it, but because it's not documented anywhere for AI to learn from. It exists only in the heads and habits of your people. And here's the crucial point: every business has massive organizational inertia around this tacit knowledge. You can't just hire a genius or install GPT-5 and instantly transform your operation. Your business works the way it works for reasons that nobody can fully articulate.

Think about it. If you've been sending proposals in physical envelopes for thirty years and it's still working, there's probably a reason. Maybe your clients are old-school and trust paper. Maybe the physical arrival creates a psychological moment of consideration that emails don't. Maybe it's something else entirely that you've never consciously recognized. The point is, it works, and that matters more than what any AI consultant tells you.

But here's where you need to be strategic. The real preparation for AI isn't about adopting the latest tools—OpenAI seems to be obliterating those every six months anyway. It's about making your tacit knowledge portable. Think of it like Docker containers, but for entire business processes.

Start documenting the undocumented. Not the official processes in your employee handbook, but the real processes—how things actually get done. Map the informal communication networks. Record the edge cases and exceptions. Capture the context that makes your business work. Create what I call a "business operating system"—a comprehensive understanding of your organization that can interface with whatever AI system comes along.

This isn't about replacing your people with AI. It's about being able to explain to any AI system—current or future—how your business actually operates. When you have this documented, you can swap AI systems like changing engines, always using the latest and best without having to rebuild your entire operation each time.

The construction company that documents its project management patterns, client communication styles, and quality control processes can use AI to enhance all of these without disrupting what works. The law firm that captures its research methodologies, client interaction protocols, and case strategy development can augment its lawyers without replacing them. The HVAC business that records its diagnostic procedures, customer service approaches, and maintenance schedules can optimize operations without losing its soul.

This is the paradox of AI adoption: the businesses that will thrive aren't the ones that chase every new AI capability, but the ones that deeply understand their own operations well enough to enhance them selectively. Your thirty years of sending proposals in envelopes isn't a weakness—it's embedded wisdom that no AI startup has. The question is whether you can make that wisdom explicit enough to be enhanced by AI rather than replaced by it.

Part II: The Question Nobody's Asking

The Concentration Game

While we've been debating whether AI will achieve consciousness, something far more consequential has been happening right under our noses. My simulations show a 77.9% probability of AI development centralizing into the hands of a few entities. Not because of any conspiracy, but through the mundane economics of scale. Training large models requires enormous capital. Running them requires massive infrastructure. The feedback loops are self-reinforcing: bigger models attract more users, generating more data, enabling bigger models.

This isn't a technology story. It's a power story. And it's one we've seen before. Standard Oil didn't control energy through innovation but through infrastructure. AT&T didn't dominate communication through better technology but through network effects. The railroad barons didn't win through superior engines but through controlling the tracks. AI is following the same playbook, just faster and with better PR.

The accompanying probability—63.9% chance of authoritarian governance emerging—isn't about jackbooted thugs kicking down doors. It's about the slow normalization of surveillance, the gradual acceptance of algorithmic decision-making, the quiet assumption that efficiency matters more than privacy.

We're not being forced into digital authoritarianism.

We're subscribing to it for $9.99 a month.

Democracy, in my simulations, has only a 36.1% chance of surviving in any meaningful form. Not because of coups or revolutions, but through obsolescence. When algorithms make better decisions than voters, when AI predicts needs before people express them, when efficiency becomes the only metric that matters, democratic deliberation starts to look like an expensive anachronism. Why have messy public debates when the data already tells us the optimal answer?

This is the pattern that actually determines our future—not whether we get AGI, but who controls whatever AI we do get. And across all three of our probable futures, the answer is increasingly: not you.

The Currency of Tomorrow

This brings me to what I believe is the most important insight from my analysis, the one that changes how I think about everything:

In a world where intelligence becomes commoditized, where knowledge is instantly accessible, where skills can be automated, the only real currency is agency.

Agency isn't wealth—money can be frozen, inflated away, or made irrelevant. Agency isn't employment—jobs can be automated or eliminated.

Agency isn't even knowledge—information can be replicated infinitely.

Agency is something far more fundamental: your ability to affect your environment without requiring permission from systems you don't control.

- Can you eat if the food delivery apps stop working?

- Can you stay warm if the smart grid fails?

- Can you find information if Google disappears?

- Can you maintain relationships without social media?

- Can you be productive without productivity software?

- Can you think without an algorithm telling you what to think about?

Most people, if they're honest, would answer no to most of these questions. We've traded agency for convenience so gradually that we didn't notice we were becoming dependents until the dependence was nearly complete. The smartphone that was supposed to empower us became a leash. The AI that was supposed to augment us became a crutch. The systems that were supposed to serve us became our masters.

The Builders and the Healers

In my research, I've identified two distinct groups who are unconsciously preparing for this agency crisis. They don't know about each other, rarely interact, and often hold each other in contempt. Yet they're solving the same problem from opposite directions.

The first group, whom I call the Builders, are creating physical independence from systems. They're the homesteaders growing their own food, the hackers running their own servers, the makers maintaining workshop spaces, the preppers installing solar panels. They're learning to fix instead of replace, to create instead of consume, to own instead of rent. Their driving question is: what if the systems we depend on fail or become hostile?

Visit any homesteading forum and you'll find them sharing knowledge about raising chickens, maintaining wells, preserving food, generating power. They trade tips on everything from composting toilets to ham radios. They're not necessarily political—I've seen both far-left anarchists and far-right libertarians in these spaces. What unites them is a shared recognition that dependence is vulnerability, and a determination to reduce that vulnerability through practical action.

The second group, whom I call the Healers, are creating psychological independence from conditioning. They're in therapy working through generational trauma. They're in recovery programs breaking addiction patterns. They're in meditation retreats learning to quiet the noise. They're setting boundaries with toxic family systems, leaving destructive relationships, choosing sobriety over numbing. Their driving question is: what if I'm the system that needs fixing?

Spend time in any personal development community and you'll find them discussing shadow work, attachment theory, nervous system regulation, parts work. They share stories of breaking family patterns, healing childhood wounds, recovering from various forms of psychological programming. They're also not necessarily political—trauma doesn't respect party lines. What unites them is a recognition that mental chains are still chains, and a determination to break free from psychological bondage.

The tragedy is that these two groups, natural allies in the fight for human agency, barely recognize each other's work as valid. The Builders dismiss the Healers as privileged navel-gazers engaging in luxury problems while the world burns. "Therapy won't protect you when the grid fails," they say. "Your meditation practice won't matter when the corporations control everything." They see inner work as a distraction from the real, material challenges ahead.

The Healers dismiss the Builders as paranoid preppers running from their inner problems. "You can build all the bunkers you want," they say, "but you can't run from yourself." They see the obsession with physical independence as a trauma response, a need for control masking deeper insecurities. They point out, correctly, that you can have solar panels and still be miserable, can grow your own food and still be emotionally starved.

Both groups are right. Both are incomplete. The Builder who achieves complete physical independence but carries their dysfunction off-grid has simply relocated their prison. The Healer who achieves psychological sovereignty but remains materially dependent on systems they don't control has simply decorated their cage. True agency requires both forms of independence.

The Convergence

The people who will thrive in the coming decades are those who achieve both forms of independence. They're building resilient systems while healing their trauma. They're learning practical skills while developing emotional intelligence. They're creating external security while cultivating inner peace. They represent just a tiny fraction of the population now, but their numbers are growing.

You can spot them by their unusual combination of competencies. They can grow vegetables and process emotions. They can repair electronics and maintain boundaries. They can build structures and deconstruct conditioning. They're equally comfortable with a soldering iron or a journal, a permaculture manual or a psychology text. They're not trying to escape society but to engage with it from a position of strength rather than dependence.

These are the people who confuse both camps. They're too practical for the pure healers, too introspective for the pure builders. They don't fit neatly into any political category because their politics are personal sovereignty rather than partisan. They're not waiting for the revolution or the collapse or the singularity. They're building their own alternative one skill, one healing session, one relationship at a time.

Why I Don't Care About P(Doom)

The rationalist community spends enormous energy calculating P(doom)—the probability that artificial intelligence will cause human extinction. They write millions of words about alignment problems and orthogonality theses and instrumental convergence. They build elaborate logical frameworks proving that sufficiently advanced AI will inevitably destroy us unless we solve certain technical problems. It's intellectually fascinating and practically irrelevant.

I don't care about P(doom) for the same reason I don't care about the probability of asteroid impact or super-volcano eruption or any other cosmic-scale catastrophe: because I have zero agency over these outcomes. Whether AGI kills everyone or not isn't influenced by my opinion, my preparations, or my anxiety about it. It's a parlor game for people with the luxury of worrying about hypothetical futures while ignoring the very real present.

The same goes for P(heaven)—the probability that AI will create post-scarcity utopia—or P(singularity)—the probability that we'll merge with machines and transcend biology. These are masturbatory fantasies that distract from the actual choices we face. While the intellectuals debate angels on pins, real power is consolidating. While we argue about hypothetical futures, the actual future is being locked in.

What I care about are the questions where I have agency.

- Can I maintain autonomy as systems centralize?

- Can I preserve my family's wellbeing regardless of economic disruption?

- Can my children develop independent thinking despite algorithmic conditioning?

- Can we remain human—genuinely, meaningfully human—in an age of artificial intelligence?

These aren't sexy questions. They won't get you invited to conferences or quoted in think pieces. But they're the only questions that matter if you're interested in living rather than theorizing.

What I'm Actually Doing

Given everything I've learned from this analysis, you might expect me to be building a bunker or moving to a compound in the woods. But that's not what this is about. I'm not preparing for apocalypse. I'm preparing for continuity—the much more likely scenario where things continue mostly as they are, just with less room to maneuver.

Financially, I'm diversifying not just investments but entire economic systems. Some money in the traditional economy, some in local community investments, some in directly productive assets. Multiple income streams that don't all depend on the same infrastructure. Skills that compound rather than depreciate. Relationships that transcend transaction.

Technically, I'm maintaining what I call "appropriate technology sovereignty." I can use the latest AI tools but I'm not dependent on them. I can run local models on my own hardware. I understand the systems I use well enough to replace them if needed. I own my data, my content, my digital infrastructure. When possible, I choose tools I can repair over those I must replace.

Physically, I'm building what resilience experts call "appropriate redundancy." Not bunker-level preparation, but enough buffer to handle disruption. A garden that supplements rather than replaces grocery stores. Solar panels that reduce rather than eliminate grid dependence. Water storage for emergencies, not for apocalypse. Community relationships that could become support networks if needed.

Psychologically—and this took me longest to understand—I'm doing the deep work of deconstructing decades of programming. Trauma, destructive patterns, scars and wounds I carried, received or inflicted. The consumerist conditioning that equates worth with wealth. The productivity propaganda that measures value in output. The achievement addiction that mistakes busy for meaningful. The digital dopamine loops that hijack attention and scatter focus. This isn't therapy as luxury; it's therapy as liberation.

None of this is particularly dramatic. I still live near a European capital, still have a smartphone, still use social media (though trying to do less). I'm not rejecting the system wholesale—that's neither practical nor necessary. I'm simply reducing my dependence on it, increasing my options, building agency while there's still time to build it.

The Choice Ahead

My simulations suggest we have perhaps five to ten years before these trajectories solidify. Not before AGI arrives or doesn't, not before jobs disappear or don't, but before the window for building personal agency closes. After that, you'll be locked into whatever level of dependence or independence you've achieved. The concrete will set, and reshaping your position will become exponentially harder.

This isn't the story of machines becoming too powerful to resist. It's the story of systems that control the machines becoming too entrenched (and convenient) to escape. Every year you delay building agency is a year closer to that lock-in point. Every skill you don't learn, every dependency you don't break, every system you don't understand becomes a bar in a cage you're building around yourself.

The choice isn't whether to be for or against AI—that's like being for or against weather. The choice isn't even whether to use AI or not—that's like choosing whether to use electricity. The real choice is whether to engage with these systems from a position of agency or dependence, whether to be a sovereign individual who uses tools or a dependent who is used by them.

Most people will choose dependence. Not explicitly, but through a thousand small surrenders. The convenience of the smart home over the effort of understanding infrastructure. The ease of the algorithm over the work of choosing. The comfort of the feed over the discomfort of thinking. Each choice seems rational in isolation. Together, they constitute a capitulation.

But some people—maybe you—will choose differently. Not because you're paranoid or pessimistic, but because you recognize that agency is the only real wealth in an age of artificial intelligence. You'll build skills that can't be automated, relationships that can't be "algorithmed", resilience that can't be disrupted. You'll do the outer work of building practical independence and the inner work of psychological sovereignty. You'll become someone who can thrive regardless of which of the three futures arrives.

The Bottom Line

We are worried about the wrong catastrophe. We're so focused on preventing the AI apocalypse that we're sleepwalking into digital serfdom.

We're so obsessed with machines becoming conscious that we're not noticing humans becoming unconscious.

We're so concerned about artificial general intelligence that we're ignoring the very real concentration of power happening right now. Look at OpenAI's launch of GPT-5. Technically speaking it's a blunder. It's not that it's not AGI but it pushed every expectation further down in the future. Yet, two things happened:

- Millions of users has a toxic and delusional reliance on GPT-4o's "engineered empathy". This went on full display when the uproar forced Altman to "bring back 4o" within a few days.

- Yet by making GPT-5 widely available, OpenAI will keep capturing market share, expecting to reach 1billion weekly active users by the end of the year.

Think about this: Within 3 years of launch, OpenAI will have access to 1 in 8 human's thoughts, fears, feelings, deep secrets. If you thought Facebook and TikTok were bad for us, wait until this manifests in full power.

By controlling millions of people's "best friend" OpenAI is on track to becoming the largest soft power on the planet before the decade ends.

The 1.3 billion simulations I ran all point to the same conclusion: the determining factor for our future isn't what AI becomes capable of, but who controls it and whether you've built independence from that control. The technology details that dominate our discourse—AGI, consciousness, super-intelligence—are sideshows. The main event is the reorganization of power and the potential elimination of individual agency.

The future isn't about AI versus humans. It's about those who maintain agency versus those who surrender it. It's about those who build resilience versus those who accept dependence. It's about those who do the hard work of remaining human versus those who allow themselves to be optimized into economic units.

It was a fun experiment running simulations, analyzing probabilities, modeling scenarios. But in the end, the only probability that matters is personal: will you be someone who needs permission to live your life, or someone who doesn't?

The builders know their answer. They're out there right now, learning to create rather than consume.

The healers also know their answer. They're doing the work, breaking the patterns that bind them.

Those who understand both forms of independence, who are building external resilience while developing internal sovereignty—they're not waiting for the future. They're creating it.

The rest are just along for the ride, passengers on a ship they don't know how to sail, heading toward a destination they didn't choose, hoping someone else knows what they're doing.

I know which group I'm choosing to join. The question is: what are you going to do?

Because in the end, after all the simulations and probabilities and analyses, that's the only question that matters. Not what will AI do to humanity, but what will you do with whatever agency you have left?